Source Code Explore Other Examples

This example requires a connected RealSense depth sensor.

The Intel RealSense depth sensor can stream live depth and color data. To visualize this data output, we utilized Rerun.

Logging and visualizing with Rerun

The RealSense sensor captures data in both RGB and depth formats, which are logged using the Image and DepthImage archetypes, respectively. Additionally, to provide a 3D view, the visualization includes a pinhole camera using the Pinhole and Transform3D archetypes.

The visualization in this example were created with the following Rerun code.

RDF

For visualize the data as RDF:

rr.log("realsense", rr.ViewCoordinates.RDF, timeless=True)

Image

First, the pinhole camera is set using the Pinhole and Transform3D archetypes. Then, the images captured by the RealSense sensor are logged as an Image object, and they're associated with the time they were taken.

rgb_from_depth = depth_profile.get_extrinsics_to(rgb_profile)

rr.log(

"realsense/rgb",

rr.Transform3D(

translation=rgb_from_depth.translation,

mat3x3=np.reshape(rgb_from_depth.rotation, (3, 3)),

from_parent=True,

),

timeless=True,

)

rr.log(

"realsense/rgb/image",

rr.Pinhole(

resolution=[rgb_intr.width, rgb_intr.height],

focal_length=[rgb_intr.fx, rgb_intr.fy],

principal_point=[rgb_intr.ppx, rgb_intr.ppy],

),

timeless=True,

)

rr.set_time_sequence("frame_nr", frame_nr)

rr.log("realsense/rgb/image", rr.Image(color_image))

Depth image

Just like the RGB images, the RealSense sensor also captures depth data. The depth images are logged as DepthImage objects and are linked with the time they were captured.

rr.log(

"realsense/depth/image",

rr.Pinhole(

resolution=[depth_intr.width, depth_intr.height],

focal_length=[depth_intr.fx, depth_intr.fy],

principal_point=[depth_intr.ppx, depth_intr.ppy],

),

timeless=True,

)

rr.set_time_sequence("frame_nr", frame_nr)

rr.log("realsense/depth/image", rr.DepthImage(depth_image, meter=1.0 / depth_units))

Join us on Github

rerun-io

/

rerun

rerun-io

/

rerun

Visualize streams of multimodal data. Fast, easy to use, and simple to integrate. Built in Rust using egui.

Build time aware visualizations of multimodal data

Use the Rerun SDK (available for C++, Python and Rust) to log data like images, tensors, point clouds, and text. Logs are streamed to the Rerun Viewer for live visualization or to file for later use.

A short taste

import rerun as rr # pip install rerun-sdk

rr.init("rerun_example_app")

rr.connect() # Connect to a remote viewer

# rr.spawn() # Spawn a child process with a viewer and connect

# rr.save("recording.rrd") # Stream all logs to disk

# Associate subsequent data with 42 on the “frame” timeline

rr.set_time_sequence("frame", 42))

# Log colored 3D points to the entity at `path/to/points`

rr.log("path/to/points", rr.Points3D(positions, colors=colors…

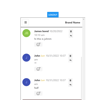

Top comments (2)

love it

During a long stay at the driving school, waiting for my next driving lesson, I opened 1win.com on my smartphone. It helped me to use this time to my advantage, providing excellent entertainment that helped me to relax before important classes.