In the contemporary realm of AI applications, efficiency and control are paramount. Cloudflare's AI Gateway emerges as a game-changer, offering remarkable advantages: observability, caching and rate-limiting.

The cost-saving aspect is excellent, serving requests directly from Cloudflare's cache rather than the original model provider (if cached). Additionally, the in-built rate limiting feature is a robust measure against potential abuse, ensuring your application scales gracefully without being overwhelmed by excessive requests and burning your wallet.

I use it to interact with OpenAI API, but this Gateway also supports Cloudflare Workers AI, Azure OpenAI, HuggingFace and Replicate. In addition, there is a Universal Endpoint that helps you set up a fallback provider when a request fails.

The Power of Caching

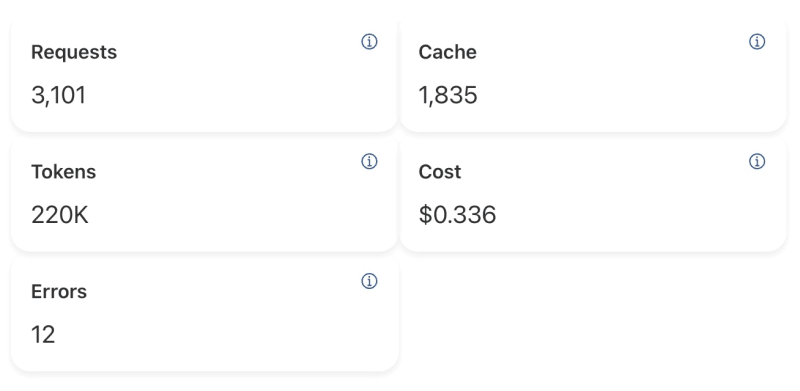

Caching can be set from one minute to one year, and in my case, it caches from text-embeddings to gpt-3.5. In the above screenshot of a non-production app, the savings were 50% instead of calling OpenAI directly, plus faster response speeds.

Rate-limit your Wallet

Every time you release your App into production, you question if some bad actor will run you to the ground. The Rate-limit feature will enable you to control the frequency and volume of requests made to your app, thereby avoiding any unnecessary charges or misuse of resources. Rate-limit from 1 minute to 1 hour period, fixed or sliding.

Observability

In the case of OpenAI API, observability is very limited. The Real-time logs feature on the Gateway is bliss. You can turn it on or off as you like.

Getting Started

Visit Cloudflare's AI Gateway Docs and if you use OpenAI NPM Package is a easy as setting up baseURL.

import OpenAI from 'openai';

const openai = new OpenAI({

apiKey: 'my api key', // defaults to process.env["OPENAI_API_KEY"]

baseURL: "https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai"

});

Top comments (0)