Chances are high you are familiar with microservices architecture. If you do not work in such an environment, you should at least be familiar with the concepts of services running in a network, communicating with each other. With this architectural approach come certain challenges. When sending network calls, our service might run into timeouts of a downstream service. Not handling those timeouts or errors might result in processing or memory overload. To fix this, we can apply a pattern from the electrical hardware world: circuit breakers.

Why you would want a circuit breaker in place

The circuit breaker pattern is an architectural pattern to make a specific function call fault tolerant and robust. It is used in distributed systems where remote or network calls take place. Its main goal is to quickly analyse if a downstream service is unhealthy and to stop calling it, thus risking memory or processing overload. You might be familiar with this term in the context of electrical switches where a circuit breaker is used to avoid damage on a electrical circuit by overload or a short circuit.

If you are dealing with a high amount of traffic which depends on a certain downstream service, you should consider this pattern to improve your system’s overall stability and end user experience.

How does it work?

The concept of a circuit breaker is fairly simple: whenever a downstream service or function stops working, our circuit breaker "trips" and stops calling it over and over until it works again. Meanwhile all attempted calls result in a specified error.

To apply this pattern effectively in software, we need three steps:

- failure monitoring

- the circuit state machine

- error handling

To illustrate the power of the circuit breaker pattern, we will implement a simple example which will focus on the circuit state machine. We will outline a very simple failure monitoring strategy, trigger the circuit state machine to “trip” and show up some real world use cases. The respective code for the state machine we are building can be found here.

I do not recommend to use this code in a production-like context. There are already battle proven frameworks with this pattern. For Go you can check out gobreaker or if you are looking for a HTTP client with the respective functionality, have a look at Heimdall.

Overview

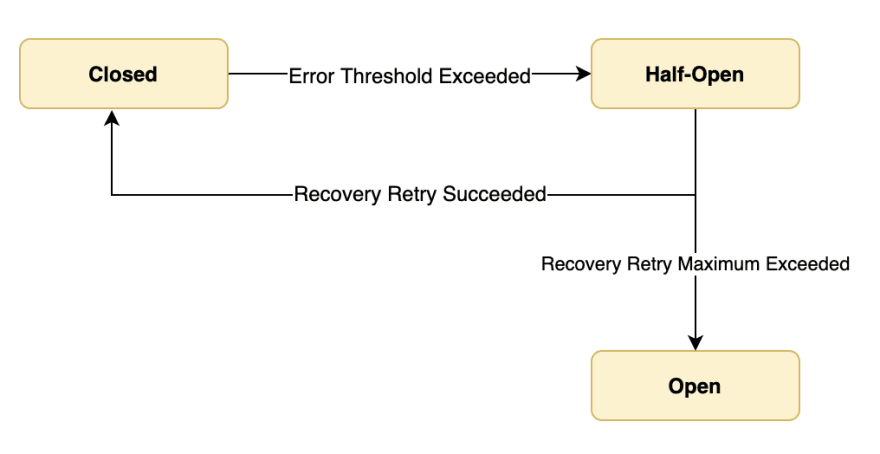

Before we dive into the hands-on action, let’s have a look at a visual representation of this pattern.

The circuit breaker can have three different states: closed, open and half-open. The default state is closed. While being closed, the circuit breaker will forward all requests to a certain function or downstream service. Meanwhile, it monitors all requests and errors for the execution of a given function. When the circuit breaker is open, all incoming requests will return an error immediately. Anyhow, before switching from a closed to an open state, the circuit breaker will try to heal itself by switching to a half-open state.

For the sake of simplicity, we will follow a rather simple approach for our failure monitoring strategy. Let’s say, we want our circuit to trip when a call throws a certain amount of consecutive errors.

The circuit breaker pattern will look like this:

Implementation

Now, let’s see how this might be implemented in code. At first, we define our different states.

type State int

const (

Closed State = 1

HalfOpen State = 2

Open State = 3

)

Our circuit breaker should take in a function and return a generic interface and an error. Therefore, we will implement a respective interface.

type CircuitBreaker interface {

Execute(func() (interface{}, error)) (interface{}, error)

}

The next step is to define all required variables for our failure monitoring strategy. In the beginning we said, the circuit breaker should start to trip when a certain error threshold is exceeded. Therefore, let’s add a strategy which holds the threshold to our circuit breaker, a counter to count our consecutive errors, and the current state.

type Strategy struct {

threshold int

}

type circuitBreaker struct {

strategy *Strategy

state State

consecutiveErrors int

}

type CircuitBreaker interface {

Execute(func() (interface{}, error)) (interface{}, error)

GetState() State

GetName() string

}

For now, this is enough to make the circuit breaker switch from closed to open whenever our threshold is exceeded. Therefore we need to count the consecutive errors. We will also send an alert whenever our state has switched to open to signal that our function is not healthy. To keep things simple, our alert will just be a print of the open state to the console.

Here is what our Execute function might look like.

func (c *circuitBreaker) Execute(f func() (interface{}, error)) (interface{}, error) {

switch c.state {

case Closed:

res, err := f()

if err != nil {

c.handleError(f)

return res, err

}

case Open:

message := fmt.Sprintf("%v circuit breaker open", c.name)

fmt.Printf("ALERT: %v", message)

return nil, errors.New(message)

}

return f()

}

func (c *circuitBreaker) handleError(f func() (interface{}, error)) {

c.consecutiveErrors++

if c.consecutiveErrors > c.strategy.threshold {

c.state = Open

}

}

This is basically all you need to reproduce the behaviour of an electrical circuit breaker in code.

Every consecutive call will result in an immediate error without even calling the original function. In a distributed system, this might keep you from stacking up calls to a downstream service and risking processing or memory overload. Nevertheless, this will keep the circuit breaker open forever. When we want to close it, because our downstream service is working again, we have to switch the state back manually. Therefore, we can implement a simple recovering mechanism. With Go’s fairly simple approach to concurrency, we can execute a go routine which checks if the function we want to execute is still returning an error.

To enable this, we update our strategy to hold a retryMax counter and retryInterval which specify how often and with what interval we want to check if a service is up again.

type Strategy struct {

threshold int

retryInterval int

retryMax int

}

Now let’s add the new state to our Execute function and implement our error handling while in half open state.

func (c *circuitBreaker) Execute(f func() (interface{}, error)) (interface{}, error) {

switch c.state {

case Closed:

res, err := f()

if err != nil {

c.handleError(f)

return res, err

}

c.handleSuccess()

case HalfOpen:

return nil, errors.New("circuit half open. trying to recover")

case Open:

message := fmt.Sprintf("%v circuit breaker open", c.name)

fmt.Printf("ALERT: %v", message)

return nil, errors.New(message)

}

return f()

}

func (c *circuitBreaker) handleError(f func() (interface{}, error)) {

c.consecutiveErrors++

if c.consecutiveErrors > c.strategy.threshold {

c.state = HalfOpen

go c.recover(f)

}

}

func (c *circuitBreaker) handleSuccess() {

c.consecutiveErrors = 0

}

func (c *circuitBreaker) recover(f func() (interface{}, error)) {

retries := 0

for c.state == HalfOpen {

// Open circuit breaker when recovering fails

if retries > c.strategy.retryMax {

c.state = Open

return

}

time.Sleep(time.Second * time.Duration(c.strategy.retryInterval))

// set state to closed if request is successful

_, err := f()

if err == nil {

c.state = Closed

}

retries++

}

}

Now we got it! Our circuit breaker can heal itself by checking upon the given function if it still continues to throw errors. Instead of switching to the open state immediately, we switch to half-open and fire up a go routine which executes the recover function. While the retryMax threshold is not exceeded, it will asynchronously continue sending new requests to the service to check if it is still returning errors. Meanwhile, the Execute function is still returning immediate errors to the consumer of the original function, thus preventing our service from overload. If the retryMax threshold is exceeded, we will set the state to open and send the alert we configured before. If our function stops returning errors, we reset the state to closed and the consecutiveErrors to 0. If you want to see this in action, you can have a look at the tests in the GitHub repository.

By now, you should have a good understanding of how this pattern might help you in a distributed system and you might consider it the next time you are dealing with systems with high throughput. If you have any

questions or any kind of feedback, feel free to leave comment, contact me or follow me on Twitter.

Top comments (6)

Great blog! ! I’ve dealt with circuit breaker pattern in the context of JaveScript, its cool to see it from the perspective of Go. I would recommend checking x-state if you’re interested its a NodeJS framework that handles state. Event-driven development for managing state machines is very powerful and might simplify the circuit breaker implementation, in other words becoming more reactive to change rather than constantly polling and checking thresholds. If you look up microservices choreography vs orchestration there are a couple of good blogs about implementing a reactive microservices architecture :)

Thanks! This is definitely an oversimplified example which I used for education, but re-building this pattern with an event-driven pattern sounds cool and interesting. I might write another article on that :)

Cool! Looking forward for this demo :) Let me know if you want to collab on it I can pitch in with some ideas from the event-driven perspective!

Hey nice article, I also wrote about Circuit Breaker Pattern, dev.to/boxpiperapp/circuit-breaker.... Do check it out too.

Hey, this looks cool! I like all the relevant resources in it! Keep the good work up :)

Thanks for that. Will be rolling out more articles soon. 👋