I'm on a journey to become a better software developer by reducing the number of defects in my code. The Personal Software Process (PSP) is one of the few proven ways to achieve ultra-low defect rates. I did a deep dive on it over the last few months. And in this post I'm going to tell you everything you need to know about PSP.

The Promise of the Personal Software Process (PSP)

Watts Humphrey, the creator of PSP/TSP, makes extraordinary claims for defects rates and productivity achievable by individuals and teams following his processes. He wrote a couple of books on the topic but the one I'm writing about today is PSP: A Self-Improvement Process for Software Engineers (2005).

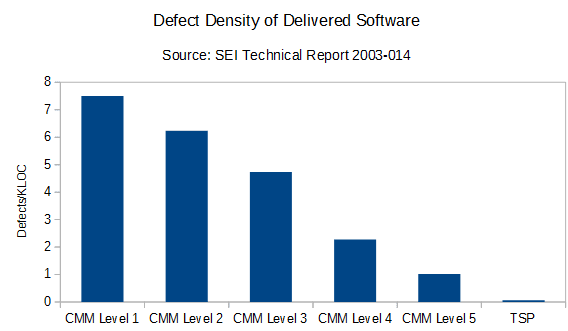

This chart shows a strong correlation between the formality with which software is developed and the number of defects in the delivered software. As you can see, PSP/TSP delivered a shocking 0.06 defects/KLOC, which is about 100 times fewer defects than your average organization hovering around CMM level 2 (6.24 defects/KLOC). Impressive, right?

Humphrey further claims:

Forty percent of the TSP teams have reported delivering defect-free products. And these teams had an average productivity improvement of 78% (Davis and Mullaney 2003). Quality isn't free--it actually pays.

Bottom line: defects make software development slower than it needs to be. If you adopt PSP and get your team to adopt TSP, you can dramatically reduce defects and deliver software faster.

It seems counter-intuitive but Steve McConnell explains why reducing defect rates actually allows you to deliver software more quickly in this article: Software Quality at Top Speed

Of course, you can only get those gains if you follow a process like PSP. I'll explain more on that later but I want to take a small detour to tell you a story.

Imagine your ideal job

Imagine you work as a software developer for a truly enlightened company--I'm talking about something far beyond Google or Facebook or Amazon or whomever you think is the best at software development right now.

You are assigned a productivity coach

Now, your employer cares so much about developer productivity that every software developer in your company is assigned a coach. Here's how it works. Your coach sits behind you and watches you work every day (without being disruptive). He keeps detailed records of:

- time spent on each task

- programming tasks are broken down into categories like requirements, requirements review, design, design review, coding, personal code review, testing, peer code review, etc.

- non-programming tasks are also broken down into meaningful categories like meetings, training, administrative, etc.

- defects you inject and correct are logged and categorized

- anything else you think might be useful

Your coach runs experiments on your behalf

After you go home, your coach runs your work products (requirements, designs, code, documentation, etc.) through several tools to extract even more data such as lines of code added and modified in the case of code. He combines and analyzes additional data from your bug tracker and other data sources into useful reports for your review when the results become statistically significant.

Your coach's job is to help you discover how to be the very best programmer you can be. So, you can propose experiments and your coach will set everything up, collect the data, and present you with the results. Maybe you want to know:

- if test-first development works better for you than test-last development?

- are design reviews worth the effort?

- does pair programming work for you?

- do you actually get more done by working more than 40 hours a week?

- are your personal code reviews effective?

Those would be the kinds of experiments your coach would be happy to run. Or your coach might suggest you try an experiment that has been run by several of your colleagues with favorable outcomes. The important point is that your coach is doing all the data collection and analysis for you while you focus on programming.

Doesn't that sound great? Imagine how productive you could become.

Unfortunately this is the real world so you have to be your own coach

This is the essence of the Personal Software Process (PSP). So, in addition to developing software, you also have to learn statistics, propose experiments, collect data, analyze it, draw meaningful conclusions, and adjust your behavior based on what your experiments reveal.

What the Personal Software Process gets right

Humphrey gets to the root of a number of the problems in software development:

- projects are often doomed by arbitrary and often impossible deadlines

- the longer a defect is in the project, the more it will cost to fix it

- defects accumulate in large projects and lead to expensive debugging and unplanned rework in system testing

- debugging and defect repair times increase exponentially as projects grow in size

- a heavy reliance on testing is inefficient, time-consuming, and unpredictable

Here's an overview of his solution:

The objective, therefore, must be to remove defects from the requirements, the designs, and the code as soon as possible. By reviewing and inspecting every work product as soon as you produce it, you can minimize the number of defects at every stage. This also minimizes the volume of rework and the rework costs. It also improves development productivity and predictability as well as accelerating development schedules.

Humphrey convinced me that he's right about all aspects of the problem. He has data and it's persuasive. However, the prescription--what you need to do to fix the problem--is unappealing, as I'll explain in the next section.

Why almost nobody practices the Personal Software Process

PSP is too hard to follow for 99.9% of software developers. The discipline required to be your own productivity coach is beyond what most people can muster, especially in the absence of organizational support and sponsorship. Software development is already demanding work and now you need to add this whole other layer of thinking, logging, data analysis, and behavior changes if you're going to practice the Personal Software Process.

Most software developers just aren't going to do that unless you hold a gun to their heads. And if you do hold a gun to someone's head PSP won't work at all. They'll just sabotage it or quit.

Here's a quote from Humphrey's book just to give you a taste for how detailed and process-driven PSP is:

The PSP's recommended personal quality-management strategy is to use your plans and historical data to guide your work. That is, start by striving to meet the PQI guidelines. Focus on producing a thorough and complete design and then document that design with the four PSP design templates that are covered in Chapters 11 and 12. Then, as you review the design, spend enough time to find the likely defects. If the design work took four hours, plan to spend at least two, and preferably three, hours doing the review. To do this productively, plan the review steps based in the guidelines in the PSP Design Review Script described in Chapter 9....

It goes on from there...for about 150 pages. I can see how you could create 'virtually defect-free' software using this kind of process but it's hard for me to imagine a circumstance where you could get a room full of developers to voluntarily adopt such an involved process and apply it successfully.

But that's not the only barrier to adoption.

Additional barriers to adoption

The Personal Software Process is meant to be taught as a classroom course

The course is prohibitively expensive. So, the book is the only realistic option available to most people.

You'll find that reading the book, applying the process, figuring out how to fill out all the forms properly, and then analyzing your data all without the help of classmates or an instructor is hard.

The book assumes your current process is some version of waterfall

TDD doesn't not play nice with the version of PSP you'll learn in the book. Neither does the process where you write a few lines, compile, run, repeat until you're done. It breaks the stats and the measures you need to compute to get the feedback you require to track your progress. It's not impossible to come up with your own measures to get around these problems but it's definitely another hurdle in your way.

Languages without a compile phase (python, PHP, etc.) also mess up the stats in a similar way.

The chapters on estimating are of little value to agile/scrum practitioners

There's a whole estimating process in PSP that involves collecting data, breaking down tasks, and using historical data to make very detailed estimates about the size of a task that just don't matter that much in the age of agile/scrum. In fact, I didn't see any evidence that the Personal Software Process estimating methods are any better than just getting an experienced group of developers together to play planning poker.

It's very difficult to collect good data on a real-world project

If you're going to count defects and lines of code, you need good definitions for those things. And at first glance, PSP seems to have a reasonably coherent answer. But when I actually tried to collect that data I was flooded by edge cases for which I had no good answers.

For example, a missing requirement caught in maintenance could take one hour to fix or it could take months to fix but you are supposed to record them both as requirement defects and record the fix time. Then you use the arithmetic mean for the fix time in your stats. So that one major missing requirement could completely distort your stats, even if it is unlikely to be repeated.

Garbage in, garbage out. Very concerning.

By the way, there's some free software you can use to help you with the data collection and analysis for PSP. It's hasn't been very useful in my experience but it's probably better than the alternative, which is paper forms.

Your organization needs to be completely on board

Learning the Personal Software Process is a huge investment. I think I read somewhere that it will take the average developer about 200 hours of devoted study to get proficient with the PSP processes and practices. Not many organizations are going to be up for that.

PSP/TSP works because the people using it follow a detailed process. But most software development occurs at CMM Level 1 because the whole business is at CMM Level 1. I think it's pretty unlikely that a chaotic business is going to see the value in sponsoring a super-process-driven software development methodology on the basis that it will make the software developers more productive and increase the quality of their software.

You could argue that the first 'P' in PSP stands for 'personal' and that you don't need any organizational buy-in to do PSP on your own. And that may technically be true but that means you'll have to learn it on your own, practice it on your own, and resist all the organizational pressure to abandon it whenever management decides your project is taking too long.

Is the Personal Software Process (PSP) a waste of time then?

No, I don't think so. Humphrey nails the problems in software development. And PSP is full of great ideas. The reason most people won't be able to adopt the Personal Software Process--or won't even try--is the same reason people people drop their new year's resolutions to lose weight or exercise more by February: our brains resist radical change. There's a bunch of research behind this human quirk and you can read The Spirit of Kaizen or The Power of Habit if you want to learn more.

Humphrey comes at this problem like an engineer trying to make a robot work more efficiently and that's PSP's fatal flaw. Software developers are people, not robots.

So, here are some ideas for how you can get the benefits from the Personal Software Process (PSP) that are more compatible with human psychology.

1. Follow the recommendations without doing any of the tracking

Your goal in following PSP is to remove as many defects as possible as soon as possible. You definitely don't want any errors in your work when you give it to another person for peer review and/or system testing. Humphrey recommends written requirements, personal requirements review, written designs, personal design reviews, careful coding in small batches, personal code reviews, the development and use of checklists, etc.

You can do all that stuff without doing the tracking. He even recommends ratios of effort for different tasks that you could adopt. Will that get you to 0.06 defects/KLOC? No. But you might get 80% of the way there for 20% of the effort.

2. Adopt PSP a little bit at a time

To get around the part of your brain that resists radical change, you could adopt PSP over many months. Maybe you just adopt the recommendations from one chapter every month or two. Instead of investing 200 hours up front, you could start with 5-10 hours and see how that goes.

Or you could just track enough data to prove to yourself that method A is better than method B. And once you're satisfied, you could drop the tracking altogether. For example, if you wanted to know if personal design reviews are helpful for you, you don't need to do all the PSP tracking all the time. You could just setup an experiment where you could choose five tasks as controls and five tasks for design reviews and run that for a week or two, stop tracking, and then decide which design review method to adopt based on what you learn.

3. Read the PSP book and then follow a process with a better chance of succeeding in the long run

Rapid Development by Steve McConnell is all about getting your project under control and delivering working software faster. McConnell, unlike Humphrey, doesn't ignore human psychology. In fact, he embraces it. I believe most teams would follow McConnell's advice long before they'd consider adopting the Personal Software Process (PSP).

Most of the advice in Rapid Development is aimed at the team or organization instead of the individual, which I think it the correct focus. PSP is aimed at the individual but I can't see how you're going to get good results with it unless nearly everyone working on your project uses it. For example, if everyone on your team is producing crappy software as quickly as they can, your efforts to produce defect-free software won't have much effect on the quality or delivery date of the finished software.

What I'm going to do

I develop e-commerce software on a team of two. My colleague and I have adopted a number of processes to ensure only high quality software makes it into production. And we've been successful at that; we've only had 4 critical (but easily fixed) defects and a handful of minor defects make it into production in the last year. Our problem is that we have quite a bit of rework because too many defects are making to the peer code review stage.

I showed my colleague PSP and he wasn't excited to adopt it, especially all the tracking. But he was willing to add design reviews to our process. So we'll start there and improve our processes in little steps at our retrospectives--just like we've been doing.

Rod Chapman recorded a nice talk on PSP and I like his idea of "moving left". If you want go faster and save more money you should move your QA to the left--or closer to the beginning--of your development process. That sounds about right to me.

Additional resources

Here are some resources to help you learn more about PSP:

- Nice overview of PSP by Rod Chapman (video)

- Watts Humphrey speaking about TSP/PSP (video)

- A PSP case study supporting PSP (pdf)

- Research paper that casts doubt on the benefits of PSP (pdf)

- PSP: A Self-Improvement Process for Software Engineers (book)

- Link to the programming exercises for the book (website)

- Process Dashboard - a PSP support tool (website)

Takeaways

It's tough to recommend the Personal Software Process (PSP) unless you are building safety-critical software or you've got excellent organizational support and sponsorship for it. Most developers are just going to find the Personal Software Process overwhelming, frustrating, and not very compatible with the demands of their jobs.

On the other hand, PSP is full of good ideas. I know most of you won't adopt it. But that doesn't preclude you from using some of the ideas from PSP to improve the quality of your software. I outlined three alternate paths you could take to get some of the benefits of PSP without doing the full Personal Software Process (PSP). I hope one of those options will appeal to you.

Have you ever tried PSP? Would you ever try PSP? I'd love to hear your thoughts in the comments.

Top comments (28)

Wow! I don't think I'm going to read the book but I'm incredibly thankful I read this post.

Really great practical summary and review.

You're welcome, Ben.

Does any of that stuff ring true for you?

Do you have any idea what percentage of your time you spend on rework caused by defects or missing requirements?

Me and my team definitely spend a good deal of time on re-work, but we also don't want to be bogged down by perfection.

So I don't think I have a good answer for you, but the wrong types of defects definitely keep lingering on and on and on.

Going open source was an effort in mitigating many of the worst case scenarios this method is trying to avoid. All our crappiest, moldiest code gets some fresh air to wipe out defects.

I'm genuinely interested in this idea of "not wanting to get bogged down by perfection" (I'm not trying to pick a fight).

I hear that a lot in our field but it seems to go against everything Steve McConnell talks about in Quality at Top Speed. And he's drawing his conclusions from some pretty good research. Humphrey makes an even stronger case with the research he cites in the quality chapter of the PSP book.

At my work, we've accepted that higher quality goes hand-in-hand with shorter schedules but just like you we don't have good data to back that up.

So, it feels like we're going slow but once code gets into production we rarely need to change it. And you guys feel like you're going fast but you "spend a good deal of time on re-work." And neither of our teams know where our optimum is.

Do you have reason to believe that McConnell and Humphrey misinterpreted the research or that the research is wrong? Or do you accept the research but believe that getting functionality in front of users as soon as possible is more important for your team and you're willing to be less efficient to do that (sort of an MVP approach)?

I feel like I'm thinking more in terms of mitigating perfectionism when it is not needed. Might be more of a reflection of my team, but everyone needs more encouragement to ship than fear that they will be sloppy.

We still have code review and have good standards, but I've found I need to encourage shipping above some other qualities. That being said, I've talked to folks at conferences who seem to work in teams that have such a disregard for quality of work and quality of software that I don't think we're anywhere close to that end of the spectrum.

And yeah, getting things in front of users is often a necessarily element. We have been burned before by trying to get usability just right before just getting things out there. You really learn so much more once it's out there.

What you can't do is move away to a new project after you've shipped the MVP. Shipping the thing that is supposed to be MVP and then moving onto other things is a big mistake. It means you end up shipping a bunch of half-done features and everything is low quality. MVP is step one, not ever the final step.

We have several of these perma-MVPs live on dev.to at the moment that we need to find the time to improve. Just today I shipped a fix to a bug which has been nagging me since about day one on the project. It was just unimportant enough to completely ignore for a long time even though it forced workarounds which were more complicated than the fix itself ended up being.

Thanks, Ben. I really like your answers.

I see now that you meant actual perfectionism and not "perfectionism" (which is sometimes code for not wanting people to do anything beyond getting a clean compile).

It just goes to show that teams and projects are different. I'm always trying to raise the bar, keep the code clean, write more tests, refactor, etc.

I've been burned too many times by releasing MVP-quality code and then something comes up and I'm unable to go back. So I've had to change my strategy. Instead of building the whole feature as an MVP, we build the essential part of the feature as production-quality code but don't build the back-end GUI or reports at all. If the feature fails we rip out the code and if it succeeds we build the back-end.

The business people never want us to go back and harden an MVP but they always think reports are a high priority.

I cast doubt on anything that uses the spacious term "defect" to measure progress. It's one of those metrics which is easy to manipulate once you start tracking it. Add in the nonsensical KLOC and you have numbers that can be made dance to whatever tune you want.

Additionally, the number of defects one finds is strongly related to the quality of testers one has. I don't care how awesome of a programmer you are, give it to the right testers and the issues reports will flow in.

Phrases like "PSP is too hard to follow for 99.9% of software developers" are condescending. If 99.9% of programmer's can't follow a process then it's something seriously wrong with the process and there's no point looking into it at all.

I have concerns about tracking "defects" and "LOC" too. I expressed some in my post and I held others back because the post was getting too long.

Humphrey was concerned about them as well. He knew these metrics are very open to manipulation and cautioned against using them in any way for rewards, punishments, promotion, or to compare programmers. They are solely intended for the individual programmer to track his or her progress, which should significantly reduce the incentive to manipulate them.

He also talks about choosing alternative measures if LOC doesn't suit your situation. But for most projects, LOC is probably the best measure, even if it's a crappy one.

The number of defects you find is strongly related to both the number of defects in the product and the quality and effort of your testers. That's true. Your PSP stats are only applicable to a single language on a single project. So for most projects over a reasonable amount of time, the tester efficacy should be relatively constant.

I really have trouble with these proxy measures and you've pointed out a bunch of potential problems. So the OCD side of me says we shouldn't use them if they are flawed.

On the other hand, I have very little insight into where I make my errors, what kinds of errors I make over and over again, how productive I am on any given week, my ratio of writing code to writing tests, and how that effects the defect rates I discover in my code, etc. etc..

So where does that leave us? I don't think the measures need to be perfect to help us find ways to improve, which is the whole point of PSP.

I stand by that statement. If I just give the book to 1,000 developers and say "do this from now on", maybe a couple will be doing it a year from now. The barriers to an individual adopting PSP are formidable. But everything lives in a context. PSP is meant to be practiced in an organization that's doing TSP. That means programmers practicing PSP get a coach, training, support, the time to actually do it, incentives aligned with producing quality software, etc.. In that context, most of them will probably be doing PSP at the end of the year.

I have to say that this type of very complicated process strikes me as inherently problematic. In my experience, reducing defects is actually a fairly simple process consisting of two main steps:

Formal Analysis: If an algorithm is mathematical in nature, then it is amenable to a formal mathematical analysis. Cryptography is a nice clear case in point. If I were to come up with a new algorithm for encryption, I'd write a paper describing the algorithm and I'd provide proofs of its characteristics. Then I'd publish the paper and offer it up for peer review and public scrutiny. Once the paper passed that step, then presumably the algorithm would be eligible to be implemented in actual code. Of course someone clever might find a fundamental loophole in the algorithm (as opposed to any specific code) later on, but that's true of everything. For example, crypto algorithms that rely on factoring large numbers into primes are based on the assumption that P != NP, which most experts believe to be true, but no one has been able to prove one way or the other. If someone finds a counterexample, such algorithms will become instantly obsolete. One can think of other situations where this applies. For example, let's say we have a system where multiple threads communicate in real time. Formally determining that the system will perform as expected given various starting assumptions is probably a good idea. I don't think one wants to hack something like that together piecemeal. ;)

Testing: If an algorithm or design is based on somewhat arbitrary business rules, then I would say up-front analysis is probably not very effective. In this kind of case, I think its effectiveness tends to drop off exponentially with time. If you have spent several days as a team trying to think of issues with a particular design, spending an additional month is unlikely to be the most efficient use of time. Instead, in such cases I recommend very thorough testing. We can test individual modules in isolation; we can develop end-to-end tests; we can do property-based testing; we can run performance tests; and so on... I find that thorough and careful testing will uncover problems much more quickly than just staring at a document and trying to think really hard about what may be wrong with it. So for business logic, I recommend trying to get something up and running as quickly as possible and then using all the applicable testing techniques to find issues with it. Then feed any problems that are found back into the design process. This may mean doing some significant refactoring of the design to fix an issue, or it may simply mean adding edge cases ad-hoc to the existing logic. The team has to decide what is appropriate. Even if this takes time, it's still more efficient than spending that time on analyzing a design that exists on paper.

I really don't believe anything more can be done to reduce defects in a general sense. Sometimes one can simplify things. For example, one can use a domain-specific language that isn't Turing-complete to make some potential problems go away. Another option is to use an off-the-shelf framework and plug one’s application-specific logic into it.

However, if one is doing normal programming in a general-purpose language, I believe one must simply rely on the above two methods as appropriate (and hire the best people one can find, needless to say).

Formal methods, yes. I 100% agree. If you can use formal methods to prove the properties of some algorithm, go for it.

However, testing is a super-expensive way to find defects. That's sort of the whole point of PSP: if you can reduce the number of defects you inject and find and remove the defects you do inject as soon as possible, you can drastically reduce the effort it takes to deliver high quality software. Finding a defect through testing, debugging it, fixing it, and then retesting is expensive.

The actual techniques Humphrey recommends aren't radical: collect requirements, review requirements, make a design, review your design, implement in code, personally review your code, peer review your code (or do an inspection), and then if you follow all those steps, you should have a lot fewer defects that need to be found and fixed during testing.

The part that's hard for people to swallow is all the tracking and analysis Humphrey wants you to do to prove that you're getting better over time. But you don't have to do that. You could just take it on faith that code reviews are a good idea, do them, and hope for the best.

We do that with all kinds of stuff in programming. For example, have you proven that pair programming is effective in your organization? If you have, you would be one of the few people who has gone to the trouble. The research is equivocal.

Let me quote straight from Humphrey (page 142 of the PSP book):

Testing just isn't a very efficient way to ensure the quality of your software. There's lots of research to back that up. Unless your software is safety-critical, it is very unlikely that you have anything near 100% statement coverage, never mind the modified condition/decision coverage (MC/DC) you'd need to be able to make any claims about quality.

So, I'm not sure where you're coming from on that, Nested Software. Or have I misinterpreted your remarks (as happens from time to time)?

@bosepchuk , the part I disagree on, at least if there was no misunderstanding, is that basically every line of code written by everyone on the team should be subjected to analysis and code review. That's the impression I got about PSP, but maybe I got the wrong idea. I think that would be significantly less efficient than a more conventional approach combined with testing (unit testing and other methods), as well as adding some amount of analysis and code review where it matters most; and possible addition of things like pair programming into the mix as well.

As far as research goes, while I totally support research into software process, I think we must be skeptical of its actual applicability, given the current state of the art. To me, the fundamental problem is that if you do research in a very "clean" academic setting, there is a natural objection of "that's nice, but does it apply to the real world?" Then if you try to do research with real projects and organizations, there's the equally natural objection of "there are thousands of uncontrolled parameters here that could be polluting the results!" At the end of the day, I wouldn't be inclined to deeply trust any research into software process - even if I 100% agreed with its conclusions based on my own experience and intuition.

That said, I think there are probably many things we can agree on, even though our viewpoints may be somewhat divergent here:

When we write code as developers, we should be thinking hard about ways our code could go wrong, and we should try to generate automated tests for these possibilities as much as possible/reasonable. The idea here of course is to make sure the code works as expected today, but will also continue to work in regression.

As we write code, we should be thinking both about the immediate functionality we're developing, but we should also consider the bigger picture and make sure things we're doing fit into the broader context of the application logic being developed.

Practices like code and design review, as well as "real time" versions thereof like pair programming, can help to improve the quality of code, clean up weaknesses or bad habits, and foster communication among team members.

One thing I wonder about is whether the PSP really takes into account the expectations that people have of software today. More than ever software is a living, breathing thing. Many applications are online, and users want both bug fixes and features to be added more or less continuously. It's a far cry from the world of 1980s programming that PSP seems to have come out of in that sense...

Good points, Nested Software.

I think I need to clarify one point. Humphrey is actually encouraging us to follow a process, track its effectiveness, and do experiments to improve it over time. He makes it clear that the process he describes in the book, is what works for him and is just a convenient starting place to have a discussion. But it's up to each programmer to find and do what works for them.

If you wanted to follow Humphrey's process, then he would agree with you idea that "every line of code written by everyone on the team should be subjected to analysis and code review."

We do that on my team and we find it catches a crazy number of problems before we put code in production. We also work in a legacy environment where much of the existing code cannot be reasonably covered with automated tests. So your mileage may vary.

Yes, research is a huge problem in our field for all the reasons you mentioned and more. Humphrey would encourage you to run your own experiments and figure out what works for you.

I absolutely agree with your three points.

I don't see any reason PSP would have trouble coping with the expectations of today's software. Humphrey was very much in favor of small changes.

Just out of curiosity, what kind of programming do you do?

Cheers.

I find that testing gets more expensive the further away it is from the developer. If a separate qa group finds issues, that's going to take more time and money to solve. If an end-user finds issues, that's going to be worse again. However, I believe strongly in developers taking ownership of thoroughly testing their work before it ever goes on to these other stages. That includes both automated tests of whatever variety are relevant as well as just manually working with the software. As developers we should be going through workflows manually on our own and trying to break our own software. That's actually a very fast way to find many issues that are likely to occur (when I find a problem like that, I make every effort to automate a test for it first so that it stays fixed in regression).

I personally find that monkeying around with an application like this is very efficient because it decouples one from thinking in terms of the code, and just focuses attention on the actual behaviour of the software. So I think this kind of developer testing is an efficient way to improve quality. It doesn't mean a QA team isn't needed, but hopefully it means the bugs they find will really be of a different order - things that are hard to reproduce; complex interactions or integrations of the technologies involved; or running the software in a bunch of different environments. In my experience, it's really not hard to miss problems when just looking at code, or looking at a design on paper.

I've mainly worked in enterprise software as well as full stack web development, along with some dabbling in embedded systems.

I 100% agree with your comments.

Lots of people have trouble "switching hats" from developer to tester to find problems with their own code. Going full black box is quite effective in that regard.

I will try to read the book but as pointed in the article, I don't think it's easy to implement.

I'm developing tools on my own and while it is tempting to implement PSP, the time required for analysis will be hard to find.

I hope this picks up steam and tools will be integrated to give automatic analysis (node.js framwework etc?).

Thanks a for taking the time to write the article!

You're welcome.

I'm curious. What kind of tools are you building? What do they do?

Hi,

Nothing too exciting, basically kind of CMS tools for the office, hopefully in Node.JS :)

I am happy to stumble over this post JUST a day after I started thinking of ways to improve my whole "Programming Process".

My whole idea was revolving around the ability to dissect an application (that you need to write) into smaller parts (components) and give each part your 100%. Then at the end, you can glue all the parts together.

For each component, you need to make experiments, of what you need to do/know to make them bullet-proof! What I mean is, you need to make a component as good as it can get, by using the BEST practices. Each component should be base on a whole lot of science that you need to acquire.

For example, you can create a database in a very simple way, with no or few constraints. You can also build the same database with a lot of constraints, assuring integrity of data.

This is a tough one. We all need to work on it by ourselves, because, as you said, no one can afford to have a coach. But the result of doing this would be awesome!

You're welcome, robencom.

It sounds a little like you're talking about the concept of 'bounded contexts' in domain driving design (DDD). Have you looked into that at all?

I will now. Thanks!

I actually had a grad level class that used PSP. It was quite tedious, but the big takeaway for me was taking the time to do up front design, and refer to that design throughout. Like you, I can't really recommend full use of PSP, but it helped engrain design discipline into every project I've worked since then.

Serendipity. My coworker and I were discussing how stressful it was to be a software developer.

I had pointed out that being a doctor, or police officer, or politician, or pretty much any other profession was far more stressful than being a software developer. (In general. I believe supported by stress reports by profession substantiate that software development is amongst the lowest stress professions. Ripley's Believe It Or Not.)

The thing that we had noted was that software developers take on stress. We are invested. That's not a bad thing... unless it becomes harmful.

So please be aware of when our own predilections become harmful. Ease up on the throttle.

PSP, in moderation.

I really don't know how stressful our profession is compared to others. The CDC puts computer programmers at #8 for suicides. So we are between police officers (#6) and doctors (#12).

Source: ctvnews.ca/health/suicide-rates-hi...

The statistics aren't broken out by gender so that could be skewing things because we know men kill themselves three times more often than women and our profession is overwhelmingly skewed towards males.

My guess is that lots of the stress in our profession is caused by unrealistic expectations (both internal and external).

So, yes, I agree, don't stress yourself out over PSP or anything else if you can help it.

I read about PSP a couple of years ago. I would like to have an updated version for agile processes.

The idea for "build quality in" is nothing new (not even in 2005), for instance it is part of lean and eXtreme Programming. Pair programming, TDD and code reviews are examples for practices.

About halve of the time I measure how long I need for a task (including interuptions) so I can compare it with my estimate. I then log this in an xls sheet. Now I can figure out for a new task that I estimate will take X hours it might really take Y hours (or better: with a confidence of let's say 75% it will take Y hours or less).

You are correct, 'building quality in' is not a new idea.

I referenced Humphrey's most recent book on PSP in this post but his first book on PSP is titled "A Discipline for Software Engineering" and it came out in 1995. And as you mentioned, quality thinking goes back a long way. I even studied Deming's work in university.

Have you learned anything interesting from your time tracking?

Maybe, but there are teams producing software with 100 x fewer defects than the average. There's plenty of room for improvement.