🚀 Journey into the AI Frontier: DeepSeek-R1 vs Llama 2 on Windows with Ollama

As an AI enthusiast, I recently embarked on an exciting exploration of two powerful language models: DeepSeek-R1 and Llama 2. Running these models locally on Windows using Ollama revealed fascinating insights into their capabilities, particularly in mathematical reasoning. Let me share my hands-on experience! 🤖

🛠️ Setup and Installation

🔹 Download Ollama

Ollama is available for macOS, Linux, and Windows. Follow these steps to install it:

1️⃣ Go to the official Ollama download page

🔗 Download Ollama

2️⃣ Select your operating system (macOS, Linux, Windows)

3️⃣ Click on the Download button

4️⃣ Install it following the system-specific instructions

Getting started with Ollama on Windows was surprisingly straightforward. After installing WSL2 (Windows Subsystem for Linux), I pulled both models using simple commands:

ollama pull deepseek

ollama pull llama2

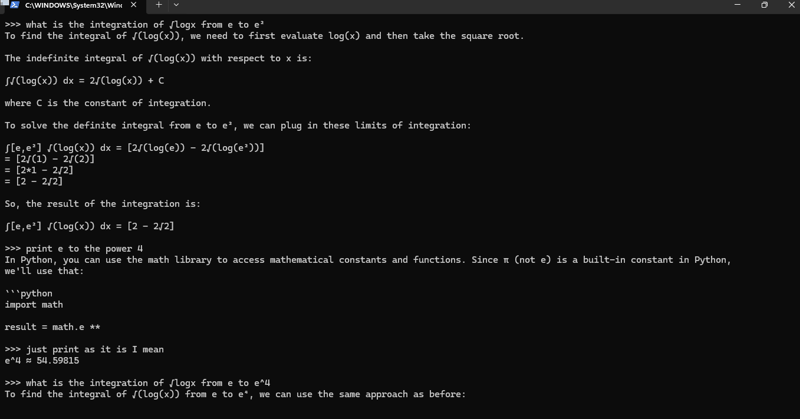

🧮 Mathematical Reasoning Face-off

To evaluate their mathematical prowess, I presented both models with identical problems. The results were eye-opening!

Problem 1: Quadratic Equation

Question: Solve for x: 2x² + 5x - 12 = 0

DeepSeek-R1's Response:

Let me solve this step by step:

1) Using the quadratic formula: x = [-b ± √(b² - 4ac)] / 2a

where a = 2, b = 5, and c = -12

2) Plugging in the values:

x = [-5 ± √(25 - 4(2)(-12))] / 2(2)

x = [-5 ± √(25 + 96)] / 4

x = [-5 ± √121] / 4

x = [-5 ± 11] / 4

3) Therefore:

x = (-5 + 11) / 4 = 6/4 = 1.5

or

x = (-5 - 11) / 4 = -16/4 = -4

Answer: x = 1.5 or x = -4

Llama 2's Response:

Using quadratic formula:

x = [-5 ± √(25 - 4(2)(-12))] / 4

x = [-5 ± √121] / 4

x = -4 or 1.5

🔍 Key Observations

-

Thinking Process 🧠

- DeepSeek-R1 consistently showed its work with clear, step-by-step explanations

- Llama 2 often jumped to conclusions, though usually correct ones

-

Presentation 📊

- DeepSeek-R1 formatted solutions with numbered steps and proper mathematical notation

- Llama 2 tended to be more concise but sometimes at the expense of clarity

-

Accuracy ✅

- Both models provided correct answers

- DeepSeek-R1's detailed approach made it easier to verify results

- Llama 2's brevity, while efficient, could leave some users wanting more explanation

💡 Real-world Applications

This comparison revealed that DeepSeek-R1 might be particularly valuable for:

- Educational contexts where step-by-step explanations are crucial

- Complex problem-solving scenarios requiring detailed reasoning

- Learning environments where process transparency is as important as the final answer

Meanwhile, Llama 2's concise approach could be preferable for:

- Quick calculations and verifications

- Situations where computational speed is prioritized

- Users who are already familiar with the underlying concepts

🎯 Conclusion

While both models demonstrated strong mathematical capabilities, DeepSeek-R1 stood out for its methodical approach and clear explanation of reasoning. Its step-by-step problem-solving strategy makes it particularly suitable for educational purposes and complex problem-solving scenarios.

The experience of running these models locally through Ollama on Windows was smooth and efficient, opening up exciting possibilities for AI-assisted learning and problem-solving right on our personal computers.

Whether you're an educator, student, or AI enthusiast, these tools represent a significant step forward in making advanced AI capabilities accessible for local use. The future of AI-assisted learning looks bright indeed! ✨

Following are the abilities to think, solve complex problems

Top comments (0)