Biometric identification of a person by facial features is increasingly used to solve business and technical issues. The development of relevant automated systems or the integration of such tools into advanced applications has become much easier. First of all, this is caused by the significant progress in AI face recognition.

In this article, we will explain what the components are of a face recognition software and how to overcome the limitations and challenges of these technologies.

You will find out how AI, namely Deep Learning, can improve the accuracy and performance of face recognition software, and how, thanks to this, it is possible to train an automated system to correctly identify even poorly lit and changed faces. It will also become clear what techniques are used to train models for face detection and recognition.

Do you remember trying to unlock something or validate that it’s you, with the help of a selfie you have taken, but lighting conditions didn’t allow you to do that? Do you wonder how to avoid the same problem when building your app with a face recognition feature?

How Deep Learning Upgrades Face Recognition Software

Traditional face recognition methods come from using eigenfaces to form a basic set of images. They also use a low-dimensional representation of images using algebraic calculations. Then the creators of the algorithms moved in different ways. Part of them focused on the distinctive features of the faces and their spatial location relative to each other. Some experts have also researched how to break up the images to compare them with templates.

As a rule, an automated face recognition algorithm tries to reproduce the way a person recognizes a face. However, human capabilities allow us to store all the necessary visual data in the brain and use it when needed. In the case of a computer, everything is much harder. To identify a human face, an automated system must have access to a fairly comprehensive database and query it for data to match what it sees.

The traditional approach has made it possible to develop face recognition software, which has proven itself satisfactorily in many cases. The strengths of the technology made it possible to accept even its lower accuracy compared to other methods of biometric identification – using the iris and fingerprints. Automated face recognition gained popularity due to the contactless and non-invasive identification process. Confirmation of the person’s identity in this way is quick and inconspicuous, and also causes relatively fewer complaints, opposition, and conflicts.

Among the strengths that should be noted are the speed of data processing, compatibility, and the possibility of importing data from most video systems. At the same time, the disadvantages and limitations of the traditional approach to facial recognition are also obvious.

LIMITATIONS OF THE TRADITIONAL APPROACH TO FACIAL RECOGNITION

First of all, it is necessary to note the low accuracy in conditions of fast movement and poor lighting. Unsuccessful cases with the recognition of twins, as well as examples which revealed certain racial biases, are perceived negatively by users. The weak point was the preservation of data confidentiality. Sometimes the lack of guaranteed privacy and observance of civil rights even became the reason for banning the use of such systems. Vulnerability to presentation attacks (PA) is also a major concern. The need arose both to increase the accuracy of biometric systems, and to add to them the function of detection of digital or physical PAs.

However, the traditional approach to face recognition has largely exhausted its potential. It does not allow using very large sets of face data. It also does not ensure training and tuning identification systems at an acceptable speed.

AI-ENHANCED FACE RECOGNITION

Modern researchers are focusing on artificial intelligence (AI) to overcome the weaknesses and limitations of traditional methods of face recognition. Therefore, in this article we consider certain aspects of AI face recognition. The development of these technologies takes place through the application of advances in such subfields of AI as computer vision, neural networks, and machine learning (ML).

A notable technological breakthrough is occurring in Deep Learning (DL). Deep Learning is part of ML and is based on the use of artificial neural networks. The main difference between DL and other machine learning methods is representation learning. Such learning does not require specialized algorithms for each specific task.

Deep Learning owes its progress to convolutional neural networks (CNN). Previously, artificial neural networks needed enormous computing resources for learning and applying fully connected models with a large number of layers of artificial neurons. With the appearance of CNN, this drawback was overcome. In addition, there are many more hidden layers of neurons in neural networks used in deep learning. Modern DL methods allow training and use of all layers.

Among the ways of improving neural networks for face recognition systems, it is appropriate to mention the following:

- Knowledge distillation. A combination of two similar networks of different sizes, where the larger one trains the smaller one. As a result of training, a smaller network gives the same result as a large one, but it does it faster.

- Transfer learning. Focused on training the entire network or its specific layers on a specific set of training data. This creates the possibility of eliminating bottlenecks. For example, we can improve accuracy by using a set of images of exactly the type that errors occur most often.

- Quantization. This approach aims to speed up processing by reducing the number of calculations and the amount of memory used. Approximations of floating-point numbers by low-bit numbers help in this.

- Depthwise separable convolutions. From such layers, developers create CNNs that have fewer parameters and require fewer calculations but provide good performance in image recognition, and in particular, faces.

Regarding the topic we are considering, it is important to train a Deep convolutional neural network (DCNN) to extract from images of faces unique facial embeddings. In addition, it is crucial to provide DCNN with the ability to level the impact of displacements, different angles, and other distortions in the image on the result of its recognition. Due to the data augmentation, the images are modified in every way before training. This helps mitigate the risks associated with different angles, distortions, etc. The more variety of images used during training, the better the model will generalize.

Let us remember the main challenge of face recognition software development. This is the provision of fast and error-free recognition by an automated system. In many cases, this requires training the system at optimal speed on very large data sets. It is deep learning that helps to provide an appropriate answer to this challenge.

Highlights of AI face recognition system software

As we said above, at the moment, when deciding how to build a face recognition system, it is worth focusing on Convolutional Neural Networks (CNN). In this area, there are already well-proven approaches to creating architecture. In this context, we can mention residual neural network (ResNet), which is a variant of a very deep feedforward neural network. And, for example, such a solution as EfficientNet is not only the architecture of a convolutional neural network but also a scaling method. It allows uniform scaling of the depth and width of the CNN as well as the resolution of the input image used for training and evaluation.

Periodically, thanks to the efforts of researchers, new architectures of neural networks are created. As a general rule, newer architectures use more and more layers of deep neural networks, which reduces the probability of errors. It is true that models with more parameters may perform better, but slower. This should be kept in mind.

When considering face recognition deep learning models, the topics of the algorithms that are embedded in them and the data sets on which they are trained come to the fore. In this regard, it is appropriate to recall how face recognition works.

HOW FACE RECOGNITION WORKS

The face recognition system is based on the sequence of the following processes:

- Face detection and capture, i.e. identification of objects in images or video frames that can be classified as human faces, capturing faces in a given format and sending them for processing by the system.

- Normalization or alignment of images, i.e. processing to prepare for comparison with data stored in a database.

- Extraction of predefined unique facial embeddings.

- Comparison and matching, when the system calculates the distance between the same points on the images and then infers face recognition.

The creation of artificial neural networks and algorithms is aimed at learning automated systems, training them on data, and detecting and recognizing images, including all of the above stages.

Building AI face recognition systems is possible in two ways:

- Use of ready-made pre-trained face recognition deep learning models. Models such as DeepFace, FaceNet, and others are specially designed for face recognition tasks.

- Custom model development.

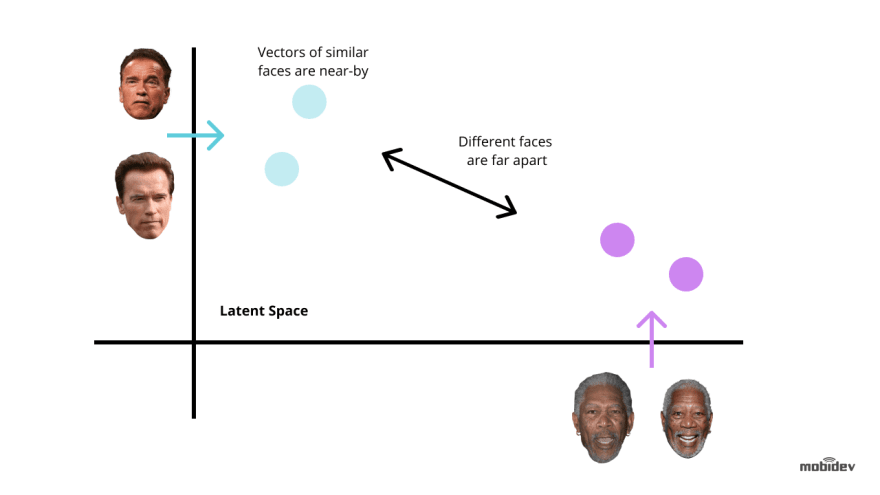

When starting the development of a new model, it is necessary to define several more parameters. First of all, this concerns the inference time for which the optimal range is set. You will also have to deal with the loss function. With its help, you can, by calculating the difference between predicted and actual data, evaluate how successfully the algorithm models the data set. Triplet loss and AM-Softmax are most often used for this purpose. The triplet loss function requires two images – anchor and positive – of one person, and one more image – negative – of another person. The parameters of the network are studied in order to approximate the same faces in the functionality space, and conversely, to separate the faces of different people. The standard softmax function uses particular regularization based on an additive margin. AM-Softmax is one of the advanced modifications of this function and allows you to increase the level of accuracy of the face recognition system thanks to better class separation.

For most projects, the use of pre-trained models is fully justified without requiring a large budget and duration. Provided you have a project team of developers with the necessary level of technical expertise, you can create your own face recognition deep learning model. This approach will provide the desired parameters and functionality of the system, based on which it will be possible to create a whole line of face recognition-driven software products. At the same time, the significant cost and duration of such a project should be taken into account. In addition, it should be remembered how facial recognition AI is trained and that the formation of a training data set is often a stumbling block.

Next, we will touch on one of the main potentials that rely on face recognition machine learning. We will consider how accurate facial recognition is and how to improve it.

Face recognition accuracy and how to improve it

What factors affect the accuracy of facial recognition? These factors are, first of all, poor lighting, fast and sharp movements, poses and angles, and facial expressions, including those that reflect a person’s emotional state.

It is quite easy to accurately recognize a frontal image that is evenly lit and also taken on a neutral background. But not everything is so simple in real-life situations. The success of recognition can be complicated by any changes in appearance, for example, hairstyle and hair color, the use of cosmetics and makeup, and the consequences of plastic surgery. The presence in images of such items as hats, headbands, etc., also plays a role.

The key to correct recognition is an AI face recognition model that has an efficient architecture and must be trained on as large a dataset as possible. This allows you to level the influence of extraneous factors on the results of image analysis. Advanced automated systems can already correctly assess the appearance regardless of, for instance, the mood of the recognized person, closed eyes, hair color change, etc.

Face recognition accuracy can be considered in two planes. First of all, we are talking about the embeddings matching level set for specific software, which is sufficient for a conclusion about identification. Secondly, an indicator of the accuracy of AI face recognition systems is the probability of their obtaining a correct result.

Let’s consider both aspects in turn. We noted above that the comparison of images is based on checking the coincidence of facial embeddings. A complete match is possible only when comparing exactly the same images. In all other cases, the calculation of the distance between the same points of the images allows for obtaining a similarity score. The fact is that most automated face recognition systems are probabilistic and make predictions. The essence of these predictions is to determine the level of probability that the two compared images belong to the same person.

The choice of the threshold is usually left to the software development customer. A high threshold may be accompanied by certain inconveniences for users. Lowering the similarity threshold will reduce the number of misunderstandings and delays, but will increase the likelihood of a false conclusion. The customer chooses according to priorities, specifics of the industry, and scenarios of using the automated system.

Let’s move on to the accuracy of AI face recognition in terms of the proportion of correct and incorrect identifications. First of all, we should note that the results of many studies show that AI facial recognition technology copes with its tasks at least no worse, and often better than a human does. As for the level of recognition accuracy, the National Institute of Standards and Technology provides convincing up-to-date data in the Face Recognition Vendor Test (FRVT). According to reports from this source, face recognition accuracy can be over 99%, thus significantly exceeding the capabilities of an average person.

By the way, current FRVT results also contain data to answer common questions about which algorithms are used and which algorithm is best for face recognition.

When familiarizing with examples of practical use of the technologies, the client audience is often curious about whether face recognition can be fooled or hacked. Of course, every information system can have vulnerabilities that have to be eliminated.

At the moment, in the areas of security and law enforcement, where the life and health of people may depend on the accuracy of the conclusion about the identification of a person, automated systems do not yet work completely autonomously, without the participation of people. The results of the automated image search and matching are used for the final analysis by specialists.

For example, the International Criminal Police Organization (INTERPOL) uses the IFRS face recognition system. Thanks to this software, almost 1,500 criminals and missing persons have already been identified. At the same time, INTERPOL notes that its officers always carry out a manual check of the conclusions of computer systems.

Either way, the AI face recognition software helps a lot by quickly sampling images that potentially match what is being tested. This facilitates the task of people who will assess the degree of identity of faces. To minimize possible errors, multifactor identification of persons is used in many fields, where other parameters are evaluated in addition to the face.

In general, in the world of technology, there is always a kind of race between those who seek to exploit technological innovations illegally and those who oppose them by protecting people’s data and assets. For example, the surge of spoofing attacks leads to the improvement of anti-spoofing techniques and tools, the development of which has already become a separate specialization.

Various tricks and devices have been invented recently for computer vision dazzle. Sometimes such masking is done to protect privacy and ensure the psychological comfort of people, and sometimes with malicious purposes. However, automated biometric identification through the face can undoubtedly overcome such obstacles. The developers include in the algorithms methods of neutralization of common techniques of combating face recognition.

In this context, it is useful to recall the relatively high accuracy of neural networks facial recognition for people wearing medical masks, demonstrated during the recent COVID-19 pandemic. Such examples instill confidence in the reality of achieving high face recognition accuracy even under unfavorable circumstances.

The ways to increase the accuracy of facial recognition technology are through the enhancement of neural network architectures, and the improvement of deep learning models due to their continuous training on new datasets, which are often larger and of higher quality.

Significant challenges in the development of automated systems are also the need to reduce the recognition time and the number of system resources, without losing accuracy.

At the moment, the technical level of advanced applications already allows to analyze the image and compare it with millions of records within a few seconds. An important role is played by the use of improved graphical interfaces. Performing face recognition directly on peripheral devices is also promising because it allows you to do without servers and maintain user data security by not sending it over the Internet.

Conclusion

So, we considered how facial recognition uses AI and, in particular, machine learning. We have listed the main areas of development of these technologies. Touching on the technical aspects of creating automated systems for neural networks facial recognition, we identified common problems that arise in this process and promising ways to solve them.

From this article, you learned how AI face recognition works and what components it consists of. Also, we did not overlook the topic of the accuracy of this process. In particular, we revealed how to improve face recognition accuracy. You will be able to use the knowledge obtained from this article to implement your ideas in the research field.

Top comments (0)