What is Ensembling?

Ensembling is simply a way of aggregating predictions from different machine learning models with the hope of creating a much better model capable of generalizing well to new data.

Ensembling provides a sense of confidence in our predictions by leveraging the collective strength of multiple models. As living beings, we understand the concept of "unity in strength", the same principle also applies to machine learning models. By combining the strengths of individual models, ensembling can improve the accuracy and robustness of predictions.

Ensemble algorithms, such as random forest, XGBoost, CatBoost, and AdaBoost, utilize multiple weak learners to achieve impressive results. These weak learners are typically small decision trees with limited depth and features

Types of Ensembles

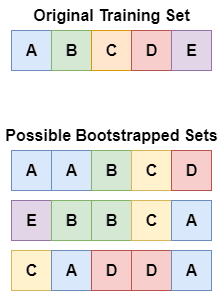

Boostrapping aggregation (e.g random forest)

This is also known as bagging, with this method , the model is trained on few "boostrapped" datasets , bootstrapped datasets are basically variants of the original training set but with repeated or missing samples.

Multiple weak learners are trained on those bootstrapped datasets and a voting of corresponding predictions is carried out.

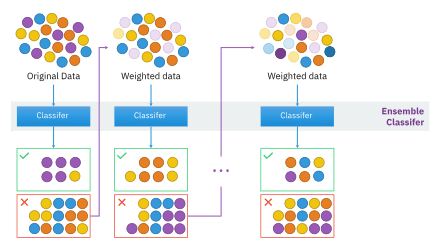

Boosting(e.g catboost, xgboost)

This also uses weak learners , but here the weak learners get better by correcting the errors of their preceding learners.

To visualize this , the data points are usually assigned equal weights , then a model is trained on those weights. The misclassified samples from the data are then assigned larger weights and then retrained, this done multiple times.

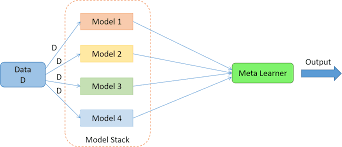

Stacking

In stacking, the predictions of several models are used as input to another model, called the meta-model. The meta-model is trained on the predictions of the base models to make the final prediction.

There are myraid of creative ways even outside of the popular methods in which one can ensemble two or more different models together. By combining models we tend to reduce their weaknesses and amplify their strengths.

Thanks for reading, Adios👋.

Top comments (0)