Since my last post on Ruby: Self, our cohort has progressed in Ruby OOP, including Object Relationships, Object Architecture and Meta-programming. We are now heading towards building our first CLI (Command-Line Interface) project. I am both nervous and excited! I have decided to step back and re-evaluate my understanding of fetching data through remote sources, which eventually would be beneficial for scraping for the CLI project.

What is scraping?

Scraping is a way to leverage remote data, and there are various types of data from .csv, html, to api. This information data essentially boost our built applications, and enhance the capabilities of the user interface. Building data from scratch is possible, though time consuming. Retrieving these data points from available remote sources is ideal. Ruby has gems that allow us to leverage these remote data sources.

Nokogiri::XML::Nodeset

One way you can parse from html sites is to utilize Ruby gem Open-URI and Nokogiri. Open-URI allows us to make HTTP requests. Nokogiri will parse, and collect our data in XML (Extensible Mark-Up Language) objects. With the CSS selector tool, you should be able to pin-point specific elements. It enables us to collect information from the nested nodes, and produce desired data outcomes from text to attributes. Let's start building one for fun! 🙂

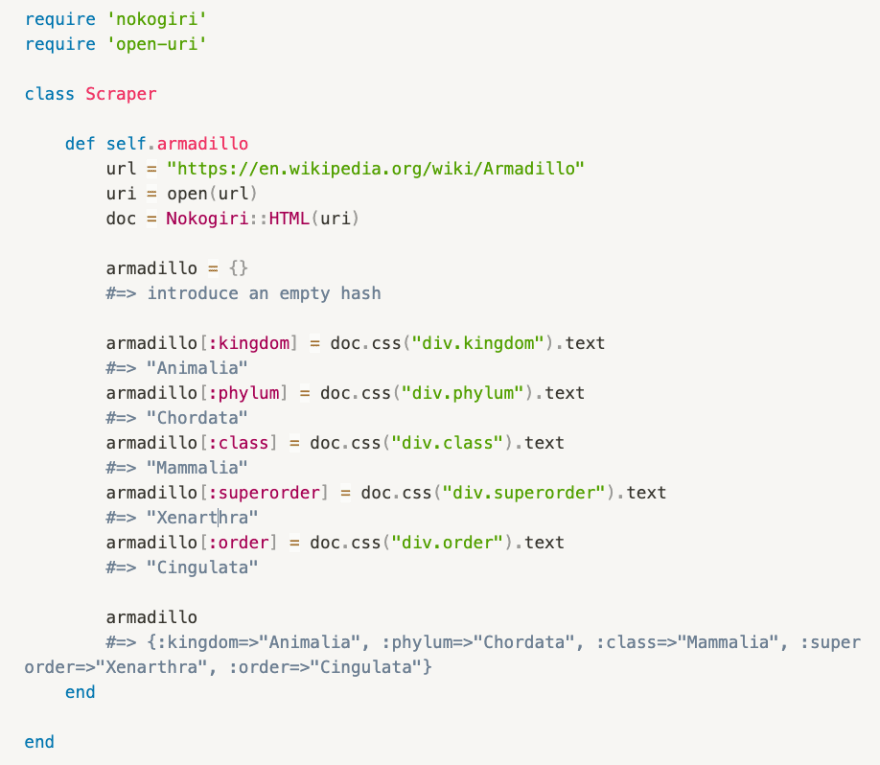

Scraping methods are typically built as class methods. The main purpose of the scraping class is to retrieve remote data, and pass it along to our domain classes. For the purpose of this exploration, I am placing a specific url as my default argument. Armadillo from Wikipedia page will be my case study, we should admit (or at least I think) they are cute.

open(url) is an Open-URI module in Ruby, and you will retrieve HTML content from the url link.

Nokogiri::HTML will convert that string of HTML into NodeSet (in simpler terms, nested nodes). They are referred to as XML objects.

CSS selector is a great tool to inspect elements while perusing the webpage, and learn the data structure. The goal is to extract any desired information with .css methods, and manipulate the data for our use.

I would like to create basic key and value pairs of armadillo scientific classification.

With CSS selector, we are able to find each element's id (#) or class (.), and return its value (texts, images, href and others).

We just created our first key and value pairs with Open-URI and Nokogiri. Yay!

Ruby has several ways to parse HTML element attributes. This is just one of the many examples.

Net::HTTP::JSON

JSON (JavaScript Object Notation) is another Ruby module for parsing. JSON formatted data has better data structure arrangements compared to Nokogiri::XML objects, and provides a more straightforward approach when extracting information.

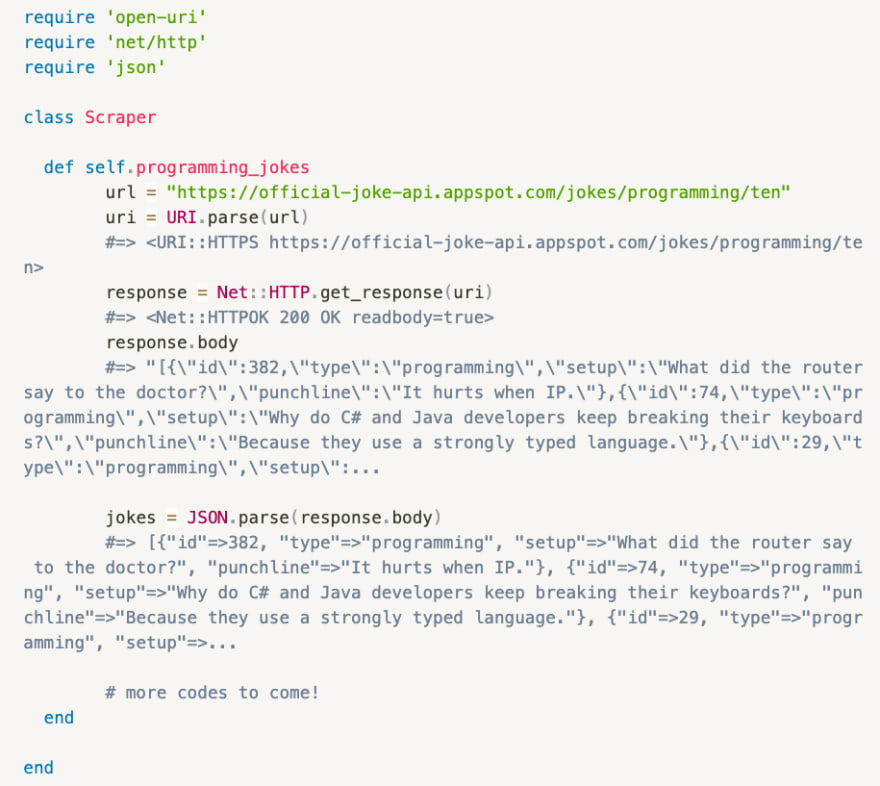

First, we need to acquire the API endpoint. API stands for Application Programming Interface, and it allows various software platforms to communicate to each other. Imagine uploading your Instagram feed, and simultaneously sharing it on your Facebook page. I found a simple RESTful API endpoint formatted in JSON data, which we can utilize and implement for this exercise.

We still need the open-uri module to make the HTTP GET request.

Net::HTTP allows the data structure outcomes to resemble the actual HTTP response.

response.body returns strings of data, which are called JSON objects.

When we are parsing with json module, it returns Ruby hashes with key and value pairs. The resulting information data structure differs from the Nokogiri parsing method, and increases efficiency of debugging time.

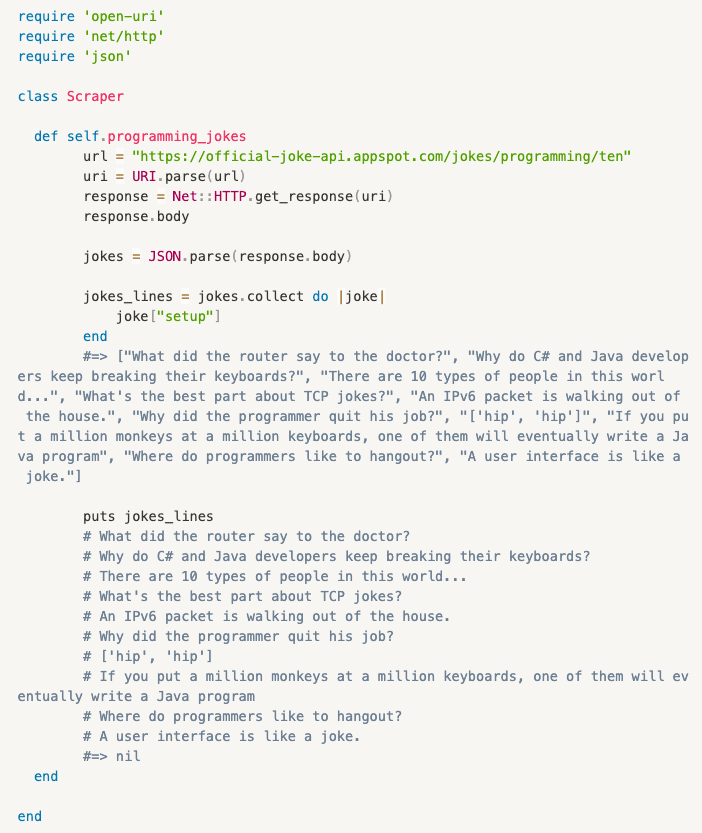

I would like to only print (or puts in Ruby parlance) these lines of jokes. It is easier to manipulate the data when integrating into our CLI compared to using the CSS selector method.

On a side note, I crossed paths with ScrapingBee blog. It gives you extensive knowledge on how to parse many platforms aside from just Ruby. I learned a lot from reading this article.

post scriptum:

I am currently enrolled in Flatiron's part-time Software Engineering program, and have decided to blog my coding journey (on subjects that I have faced difficulty with). I believe one of the catalyst to becoming a good programmer is to welcome constructive criticism. Feel free to drop a message. 🙂

Keep Calm, and Code On.

External Sources:

Wikipedia

Official Joke API

ScrapingBee Blog

Armadillo picture

fentybit | GitHub | Twitter | LinkedIn

Top comments (1)

Great post! Your journey through Ruby OOP and exploring data scraping is inspiring. The way you've explained Nokogiri and JSON parsing is clear and insightful. Also, thanks for the shoutout to the ScrapingBee blog – Keep up the amazing work.

By the way, have you checked out Crawlbase for further insights into web scraping? It's fantastic.