In February this year, we announced Fleet (Formerly Hole), a FaaS platform built on Node.js to be faster than other platforms and to create a more faithful integration with the ecosystem. In this post, I will clarify how all of this works and what we are bringing differently to the Serverless ecosystem and in the next article I will comment on the platform.

What are Fleet Function?

It is a technology capable of executing Node.js functions that are invoked by HTTP requests with auto-scale to zero or N with the ability to execute the functions with a cold start to almost zero.

export default (req, res) => {

res.send({ message: 'ƒ Fleet Simple HTTP Endpoint!' });

};

Live Example: https://examples.runfleet.io/simple-http-endpoint/

Common issues

In a brief explanation about the cold start is when your service receives a request and the platform has to provision its function to be able to handle the request, usually following this flow:

- Event invoke

- Start new VM

- Download code (from S3, normally.)

- Setup runtime

- Init function

The steps from 2 to 4 are what we call Cold start, in the next invocations, if the instance is available and cached the provider can skip these steps to execute the function in Warm start. There are some misunderstandings about the cold start when a function is already running and receives a new invocation, the provider will invoke a new instance with a cold start, the same happens when your application receives many invocations simultaneously, all will be with a cold start.

One of the solutions that some adopt is to ping from time to time to keep the instance alive or use the concurrency provisioning service that will increase your expenses and require you to know exactly what your application's traffic spikes are, requires monitoring to prevent unnecessary expenses which for some this is very bad because it removes the idea of you not worrying about the infra...

Fleet Solution

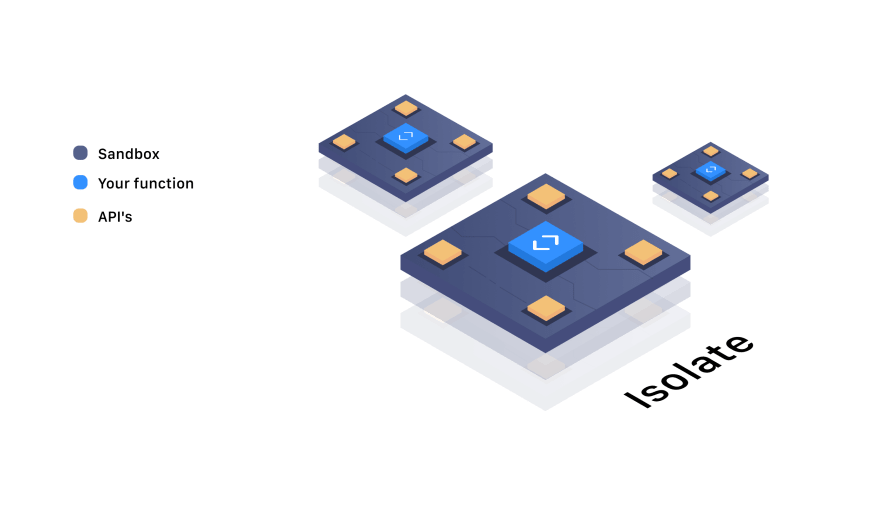

The Fleet Function solution for this is to execute its functions safely and quickly, so we focus on being able to execute several functions in a single Node.js process that is capable of handling thousands of functions at the same time, executed in an isolated environment, safe and fast.

- Isolated Able to perform a function with isolated memory and allow them to use CPU according to the provisioned limits.

- Safe In the same instance, one function is not able to observe the other or obtain resources from other functions (such as information from process.env, context, requests...), this also includes access to the File System.

- Fast We eliminated the steps "Start new VM" and "Setup runtime", the source code, is available in each region where the function is available, close to the execution time. We were able to execute the functions faster within the same process.

This means that we can run Node.js functions much faster than other platforms and the functions consume an order of magnitude less memory while maintaining security and an isolated environment.

To impose a safe environment, Fleet had to limit some Node.js APIs to increase security and prevent suspicious functions from having access to resources, each running function only has access to resources that have been granted to it.

Scaling

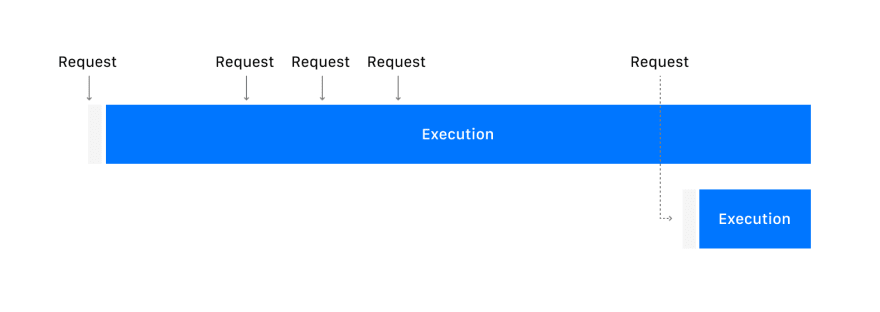

One of the main differentials of Fleet is also how we can scale its Node.js functions. Unlike other platforms that scale their function only via concurrency, that is, each instance of a VM can only handle one invocation at a time, if it is busy it will provision a new instance but there is a limit for that, normally 1000 instances in concurrency.

Differently in Fleet, we have managed that its function can handle many asynchronous requests at a time within a configured limit, if this limit is reached for some time a new instance is provisioned for its function in just a few ms. This means that during the time that your function is running it can handle many requests and take advantage of the connection established with your database during several requests.

In Fleet there is no clear concurrency limit, it is dynamic by region. We do everything to handle the maximum number of requests, you have control over the asynchronous limit so you can multiply the number of requests that your application can handle.

HTTP Rest

Fleet Functions are invoked via HTTP Rest, there is no need for an extra API Gateway service, each new deployment Fleet generates a new URL for preview deployment (in <uid>-<project-name>.runfleet.io ) and with an option, you can define the deployment for production with an exclusive subdomain in <project-name>.runfleet.io.

All deployments are made on a project created on console.fleetfn.com, capable of inviting members to teams with privileges... that's a subject for another article.

You may want to read more about it here.

Use cases

Fleet is built to run Node.js functions much faster and will soon run functions in other languages using WebAssembly. With that in mind, Fleet does not deal with container provisioning like Cloud Run or allows you to create your own custom runtime environment.

Can handle APIs of your applications very well able to meet the high demand and save with low demand.

Fleet can handle microservices, calls between functions, and traffic changes with great confidence. We are working on what we call the Virtual Private Function or VPF which is a network of private functions, this isolates the functions inside the VPF from the outside world, allows only some of the functions inside the VPF to be invoked by the outside world, it also allows better monitoring and sharing between VPFs, in the future, we also want to allow you to securely connect your current network to the VPF network. In addition, we are working on Traffic Shifting is our service capable of making canary deployments using a set of rules based on data, you define an autonomous set of rules to increase the reliability of the traffic change to perform the split, for example, a certain amount of successful or failed requests can increase the traffic percentage for a specific deployment. This is for services that are sensitive to problems with code or when testing new features.

Although the focus of Fleet is not on website hosting, you can also handle server-side rendering with React, deploy the static files to an S3 and use the functions to routing.

I invite you to visit our website, our documentation and the examples repository, feel free to explore, if that interests you and you are curious to test it we are in the private beta phase, with some people already testing, we send invitations every week. To register is very easy:

- Go to console.fleetfn.com

- Continue with Github and hope that you will soon receive an email

If you want to prioritize your email in the early access list, you can fill out our quick questionnaire.

We are every week publishing our weekly changelog, you can follow closely on our twitter @fleetfn which includes some short videos of the main resources and we always publish on our specific page for the changelog with a more detailed description fleetfn.com/changelog.

Top comments (4)

How much cpu is allocated for each fleet function and no where I can see billing page? Can you please share?

Hey Ayyappa, The capacity of CPU allocated is proportional to the memory, we will still release more memory configuration sets soon. The billing page will also be released in the coming weeks.

Great! Thats good to hear!

Any sneak peak on how its comparable to existing solutions? Also any options to limit function calling based on rate limiting or billing max cap?

Waiting!

We will add the comparisons in more detail in the documentation but the most important point is that Fleet has practically no cold start, it is not necessary to add an API Gateway to invoke the functions via HTTP, development experience, as well as other resources on the platform.

We don't have anything planned on this but we will have plans on-demand and fixed plans that can help with billing control.