Disclaimer: this is a report generated with my tool: https://github.com/DTeam-Top/tsw-cli. See it as an experiment not a formal research, 😄。

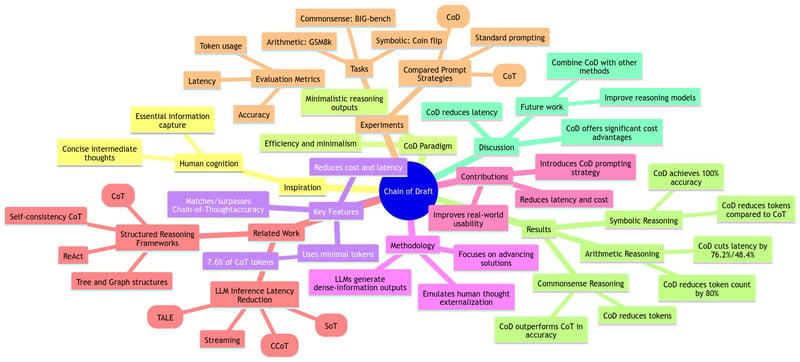

Mindmap

Summary

This paper introduces Chain of Draft (CoD), a novel prompting strategy for Large Language Models (LLMs) that encourages concise intermediate reasoning outputs, reducing verbosity and focusing on essential information. CoD aims to emulate the efficiency of human cognitive processes, matching or surpassing the accuracy of Chain-of-Thought (CoT) prompting while using significantly fewer tokens, thus reducing cost and latency across various reasoning tasks.

Main Points

- Introduction of CoD: A new prompting strategy that aligns with human reasoning by prioritizing efficiency and minimalism in intermediate reasoning steps.

- Efficiency and Accuracy: CoD achieves similar or better accuracy compared to CoT while significantly reducing token usage and latency.

- Inspired by Human Cognition: CoD is rooted in how humans externalize thought, focusing on critical information without unnecessary elaboration.

- Experimental Validation: Experiments across arithmetic, commonsense, and symbolic reasoning benchmarks demonstrate CoD's effectiveness.

Key Findings

- CoD reduces token usage by as much as 92.4% compared to CoT in certain tasks.

- CoD maintains or improves accuracy compared to standard CoT prompting.

- Significant reduction in latency and computational costs.

- CoD achieves 91% accuracy for both GPT-4o and Claude 3.5 while requiring only about 40 tokens per response, thereby reducing the average output token count by 80% and cutting the average latency by 76.2% and 48.4%, respectively.

- In symbolic reasoning, CoD achieves a perfect 100% accuracy, demonstrating significant reduction of tokens compared to CoT, from 68% for GPT-4o to 86% for Claude 3.5 Sonnet.

Improvements And Creativity

- The paper addresses the verbosity and computational cost issues associated with Chain-of-Thought (CoT) prompting by introducing Chain of Draft (CoD).

- CoD aligns more closely with human problem-solving strategies by emphasizing concise, essential information in intermediate reasoning steps.

- The study empirically validates CoD across multiple reasoning tasks and models, demonstrating its effectiveness in reducing latency and cost without sacrificing accuracy.

Insights

- CoD's success indicates that effective reasoning in LLMs does not require lengthy outputs.

- The minimalist approach of CoD can be combined with other latency-reducing methods for further optimization.

- Training models with compact reasoning data inspired by CoD could improve reasoning models' interpretability and efficiency.

- CoD offers significant cost advantages, making it appealing in cost-sensitive scenarios.

References

Chain of Draft: Thinking Faster by Writing Less

Report generated by TSW-X

Advanced Research Systems Division

Date: 2025-03-01 13:44:00

Top comments (0)