Overview of My Submission

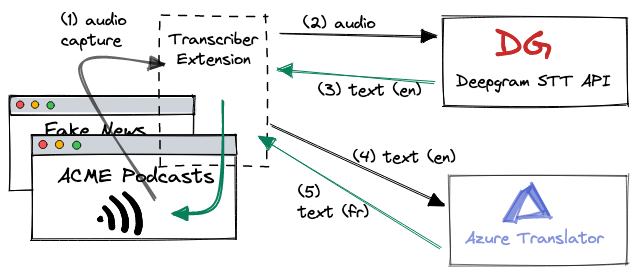

A simple browser extension that can transcribe and translate audio on any web page. The extension works by capturing audio on the web page and streaming it to Deepgram's Speech-To-Text API for transcribing, then the resulting transcript is sent to Azure's translation service for a translation into a target language.

Here's a very high-level overview of the architecture.

Here is a demo showing the extension transcribing and translating audio content from various sites. I was impressed by how fast both Deepgram and Azure were able to transcribe and translate the audio. I think real-time streaming to two separate services is not an ideal solution, but as a prototype, it performed pretty well.

I believe some target use cases for an extension like this would be:

- for older content, where no one is going to update with new transcriptions or translations

- content from smaller organizations/individuals that lack the resources/ability to provide multiple translations

- when you need translation into less common language

My goal for this extension is to make content more accessible.

Submission Category:

I'm submitting this under the "Accessibility Advocates" category—based on it's simple function of making content more accessible.

Link to Code on GitHub

ikumen

/

transcribe-and-translate

ikumen

/

transcribe-and-translate

Simple browser extension that can transcribe and translate any web page with audio content.

Transcribe and Translate

A simple browser extension that can transcribe and translate audio from any web page. The extension works by capturing audio on the web page, then streams it to Deepgram's Speech-To-Text API for transcribing, and then to Azure's translation service for a final translation into a target language. It is my entry to the Deepgram + DEV hackathon.

Development Setup

The project contains two parts, the main extension source and a API service proxy responsible for requesting short-lived access tokens for the extension to use.

|____extension

| |____background.js

| |____icons

| | |____speaker-48.png

| |____manifest.json

| |____content.js

|

|____service-proxy

| |____mvnw.cmd

| |____pom.xml

| |____src

| |____....

|

|____LICENSE

|____README.md

Locally Test Extension

The extension uses some Chrome specific APIs, so it will only work on Chrome based browsers (e.g, Chrome, Edge). To install it locally, simply:

-> Manage extensions -> Load unpacked -> select the directory…

How I Built the Extension

This is a very brief overview of how I built the extension.

Deepgram Speech-to-Text

I used Deepgram's Speech-to-Text service to handle the transcribing. It was my first time using Deepgram, but I was able to get up and running fairly quick—they have great documentation and lots of tutorials to help familiarize you with their API. Basically you need to:

- create an account, generate an API key

- grab one of the SDKs or hit up their REST endpoint directly

Here is an example on how to stream audio data to Deepgram using their REST endpoint.

const socket = new WebSocket('wss://api.deepgram.com/v1/listen', ['token', token.key]);

mediaRecorder.ondataavailable = function(evt) {

if (socket && socket.readyState === socket.OPEN) {

socket.send(evt.data); //

}

}

socket.onmessage = function(results) {

// parse the results from Deepgram

}

Azure Translator

I used Azure's Translator service for translating the resulting transcripts from Deepgram STT to a target language. Again, similiar to Deepgram, you'll need to:

- create an Azure account, and configure a translator service (an API subscription key will be generated for you)

- grab one of the SDKs or hit up their REST endpoint directly

To use the translation service, it's just a request to the translator REST endpoint.

fetch(`https://api.cognitive.microsofttranslator.com/translate?api-version=3.0&to=${toLang}`, {

method: 'POST',

headers: {

'Authorization': 'Bearer ' + token.key,

'Ocp-Apim-Subscription-Region': token.region,

'Content-Type': 'application/json; charset=UTF-8',

'Content-Length': data.length

},

body: data

}).then(resp => resp.json())

.then(handleTranslationResults);

Browser Extensions

This was my first time developing a browser extension, so I'll do my best to explain how it works. I used the following components:

- background scripts are where you put long-running or complex logic, or code that may need access to the underlying browser

- content scripts are sandboxed scripts that run in the context of a web page (e.g, a tab), and they are mostly used for display and collecting data from the web page.

- browser action is an icon representing your extension, and a common way for users to interact with the extension.

The background scripts and content scripts work in their own respective context/scopes. Communication between a web page, content scripts and background scripts are all done with an event-driven messaging system. For example:

// Sending data from web page to an extension's content script

window.postMessage({type: 'some-action', data: ...});

// Listening to a web page from a content script

window.addEventListener('message', (evt) => {

...

});

In summary, this was just a brief overview on some of the browser extension features/components used in our extension, later we'll see they are used in our implementation.

Functional Components

So far we've seen how to set up and use both Deepgram's Speech-to-Text and Azure's Translator services. We also touch briefly on some common browser extension components and their functions. Next we'll define functionally how our extension should work.

- user clicks on the browser action, which activates/opens our extension if it's not running or closes it if it's already running

- detect when we've opened/switch to a tab, stop our extension if it was open for a previous tab

- if we need to close the extension

- try to shutdown the resources we're using (e.g, MediaRecorder, WebSocket)

- remove the translation results on the web page

- if we need to open the extension

- create the translation results container and display on the web page

- start capturing audio for the current web page

- create the MediaRecorder to listen to the audio

- create the WebSocket to send the audio to Deepgram

- send streaming audio to Deepgram whenever the MediaRecorder captures any audio

- send transcript to Azure Translator whenever we get a transcript

- send the translation results to content script for display whenever we get a translation

This list is not exhaustive, but it describes the key functionality of our extension. Let's take a look at each item to see how it could be implemented.

Activating the Extension

To activate the extension from the browser action, we add a listener—when triggered, it will either open or close our extension.

chrome.browserAction.onClicked.addListener(function() {

if (someStateAboutCurrentTab.isOpen) {

// ... handle closing the extension

} else {

// ... handle opening the extension

}

});

Handle Opening or Switching to a Tab

Browsers can have multiple tabs, but our extension will only work on one tab at a time (i.e, the currently active tab). If there was a previous tab, we should always force closing the extension—regardless if it was open for the previous tab—just to make sure.

chrome.tabs.onActivated.addListener(function (tabInfo) {

prevTabId = activeTabId;

activeTabId = tabInfo.tabId;

if (prevTabId) {

closeExtension(prevTabId);

}

});

Closing our Extension

To close the extension, we should clean up resources (e.g, WebSocket, MediaRecorder) and notify the content script to remove the translation results container.

function cleanUpResources() {

if (socket && socket.readyState !== 'inactive') {

socket.close();

}

if (mediaRecorder && mediaRecorder.state !== 'inactive') {

mediaRecorder.stop();

}

}

function notifyContentScriptToClose() {

chrome.tabs.sendMessage(prevTabId, {type: 'close'});

}

Content Scripts Listening to Events

The purpose of the content scripts is to display our translations to the user, and notifying the background script that the users prefers a new target translation language.

Here's an example of how we could display/hide translation results.

// Create the div that will display the translations, and add to the body

function createTranslationDisplay() {

const template = document.createElement('template');

template.innerHTML = '<div id="translations"></div>';

document.getElementsByTagName('body')[0].append(template.content.firstChild);

}

function hideTranslationDisplay() {

document.getElementById('translations').style.display = 'none';

}

function showTranslationDisplay(translation) {

const div = document.getElementById('translations');

div.style.display = 'block';

div.innerText = translation;

}

Here's how a content script can handle receiving new translations, or events (e.g, close, open) from the background script.

function onBackgroundMessage(evt, sender, callback) {

if (evt.type === 'close') {

hideTranslationDisplay();

} else if (evt.type === 'translation') {

showTranslationDisplay(evt.translation);

} ...

}

chrome.runtime.onMessage.addListener(onBackgroundMessage);

Capturing Audio

To capture audio, we use the tabCapture API.

chrome.tabCapture.capture(

{audio: true, video: false},

function (stream) {

// Once a stream has been establish, we need to make

// sure it continues on it's original destination (e.g, your speaker device), but we can now also record it

AudioContext audioContext = new AudioContext();

audioContext.createMediaStreamSource(stream)

.connect(audioContext.destination);

}

);

Note: our transcriber and translator extension was developed against Chrome specific extension API so it will only work for Chrome based browsers (e.g, Chrome, Edge).

Recording Audio Stream for Transcribing

We've just seen how to capture the audio from any web page, now we need to have our extension use it to start the transcription process.

// Receives an audio stream from the tabCapture

function startAudioTranscription(stream) {

mediaRecorder = new MediaRecorder(stream, {mimeType: 'audio/webm'});

// Start a connection to Deepgram for streaming audio

socket = new WebSocket(deepgramEndpoint, ['token', key]);

socket.onmessage = handleTranscriptionResults;

mediaRecorder.ondataavailable = function (evt) {

if (socket && socket.readyState === socket.OPEN) {

socket.send(evt.data); // audio blob

}

}

}

Transcriptions to Translation

Next, on each successful transcription from Deepgram's Speech-to-Text service, we take the transcript and send it off to Azure's Translator service.

function translate(transcript) {

const bodyData = JSON.stringify([{Text: transcript}]);

fetch(azTranslatorEndpoint, {

method: 'POST',

headers: {

//... auth stuff

'Content-Type': 'application/json; charset=UTF-8',

'Content-Length': bodyData.length

},

body: bodyData

})

.then(resp => resp.json())

.then(handleTranslationResults);

}

function handleTranscriptionResults(results) {

const data = JSON.parse(results.data);

if (data.channel.alternatives) {

const transcript = data.channel.alternatives.map(t => t.transcript).join(' ');

translate(transcript);

}

}

Finally to display a successful translation, we send need to send the translation from the background script to the content script.

function handleTranslationResults(results) {

if (results && results[0].translations) {

const text = results[0].translations[0].text;

chrome.tabs.sendMessage(tabId, {type: 'translation', text});

}

}

That wraps up my brief overview of how I built the transcribe and translate extension. You can check out the full source code at the GitHub repo.

https://github.com/ikumen/transcribe-and-translate/tree/main/extension

Conclusion

Phew, we made it :-). Thanks for checking out my submission, I hope you liked it and more importantly learn a little about Deepgram and how browsers extensions work.

Top comments (0)