Erlang is often referred to as the “concurrency oriented programming language”.

How did it get this name? How can a language created in the 80s for the telecom industry help us now?

Erlang or Erlang/OTP (Open Telecom Platform) was built in 1986 at Ericsson to handle the growing telephone user base. It was designed with the aim of improving the development of telephony applications. It is great for building distributed, fault tolerant and highly available systems.

So, what relevance does a technology built in the 80s have for us? It turns out that all the reasons for which Erlang was built for is extremely useful for web servers today. We need applications to be fast and reliable - and we want it now! Fortunately that’s possible with Phoenix, the web framework built on top of Elixir (a modern language built on top of Erlang). It has great features like hot code swapping and real time views - without JS!

How is all that possible? It’s largely due to how the language was built to be concurrent from bottom up - thanks to BEAM, the Erlang VM.

Before we go any further, let’s recap a few concepts from CS 101 - the Operating System!

Concurrency

In order to understand why concurrency in the Erlang VM is so special, we need to understand how the operating system processes instructions/programs.

The CPU is responsible for the working of a machine. It’s sole responsibility is to execute processes. Now a process is an isolated block of execution. They have their own memory, context and file descriptors. A process is then made up of many threads or the over used definition “a lightweight process”.

The CPU is responsible for executing these processes in the most efficient format. It can execute processes one after the other. This is known as “sequential execution”. This is the most basic and oldest method of execution. It’s also pretty useless for us today - even the refrigerators we use today can do more!

You might think that since your modern day computer allows you to do more than one thing at a time, the CPU is executing processes in parallel. Something like this,

However this is far from the truth. We are quite far from true parallelism. There’s a whole plethora of bugs that crop up when we try to go down this path.

The next best thing is concurrency. Concurrent execution means breaking up processes into multiple tiny bits and switching between them while executing. This happens so fast that it gives the user the illusion of multiple processes being executed at the same time - while cleverly circumventing the problems of parallelism.

It looks something like this,

You can see that the CPU switches between process 1 and 2. This is known as context switching. It is a pretty heavy task as the CPU needs to store all the information and state of a process to memory before switching - and then load it back when it needs to execute again. However we’ve worked on this problem for so many years that we’ve got insanely good at it.

One last thing …

The speed of program execution is dependent on the CPU clock cycles. The faster the processor, the faster your computer is. Moore’s law states that the number of transistors on an affordable CPU would double every two years. However if you’ve noticed, processors haven’t been getting that much faster in the past few years. That’s because we’ve hit a physical bump in the road. This happened when we realized going much higher than 4GHz is very difficult and futile. Speed of light actually became a constraint! This is when we decided to do multi-core processing instead. We scaled horizontally, instead of vertically. We added more cores, instead of making a single core powerful.

Okay I know we’ve covered a lot of material here. But what’s relevant for you to remember is that context switching is heavy and that we’ve moved on to multi-core processing now. Let’s see what tricks Erlang has up its sleeve that makes it the concurrency oriented language.

Concurrency Models

In the previous section we deduced that concurrency is the best way forward to achieve multiprocessing. Over the years various languages have introduced different algorithms to achieve concurrency. Let’s have a look at three of the most significant ones relevant to our topic of discussion.

1. Shared Memory Model

This is a commonly used model in popular programming languages like Java and C#. It involves different processes accessing the same block of memory to store and communicate with each other. It allows for context switching to be less heavier.

Though this sounds great in theory, it causes some unfriendly situations when two or more processes try to access the same shared memory block. It leads to situations like deadlocks. In order to overcome this, we have mutexes,locks and synchronization. However this makes the system more complex and difficult to scale.

2. Actor Model

This is the model used by Erlang and Rust to achieve concurrency. It depends on isolating processes as much as possible and reducing communication between them to message passing. Each process is known as an actor.

Actors communicate with each other by sending messages. Messages can be sent at any time and are non-blocking. These messages are then stored in the receiving actors mailbox. In order to read messages, actors have to perform a blocking read action.

3. Communicating Sequential Processes

This is a highly efficient concurrent model used by Go. It’s similar to the actor model in that it uses message passing. However unlike in the actor model where only receiving messages is blocking, CSP requires both actions to be blocking.

In CSP, a separate store called the channel is used to transfer incoming and outgoing messages. Processes don’t communicate with each other directly, but with the channel layer in between.

Actor Model

Let’s take a deeper look into the actor model.

An actor is a primitive form of concurrency. Each actor has an identity that other actors can use to send and receive messages from. When messages are received, they are stored in the actors mailbox. An actor must make an explicit attempt to read contents of the mailbox. Each actor is completely isolated from others and shares no memory between them. This completely eradicates the need for complex synchronization mechanisms or locks.

An actor can do three things when it receives a message,

- Create more actors

- Send messages to other actors

- Designate what to do with the next message

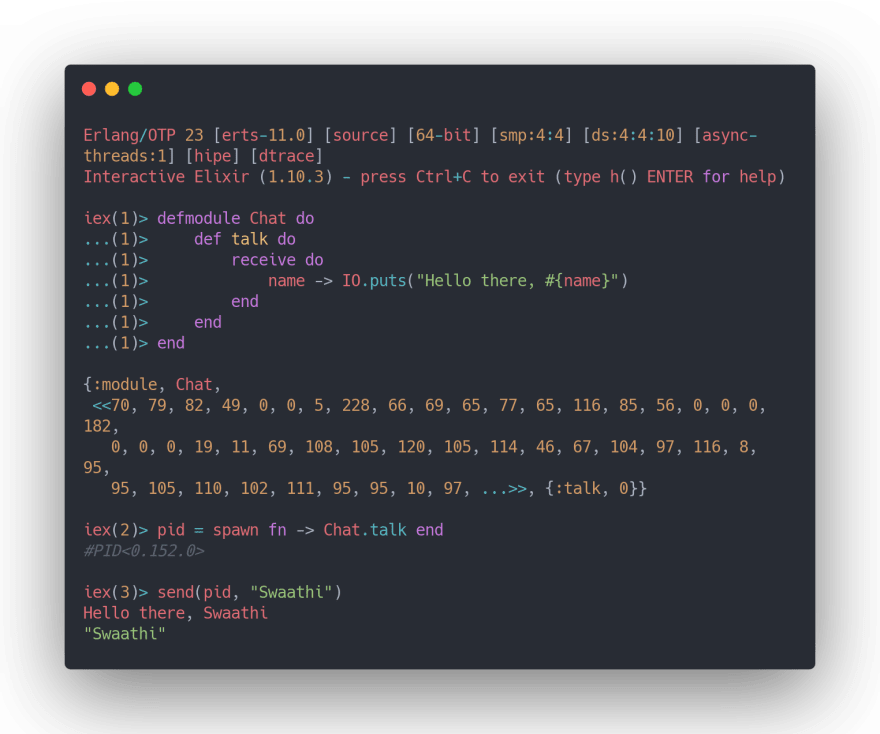

The first two are pretty straightforward. Let’s look at it’s representation on a code level.

You can see here that spawning a new process in Elixir - is exactly that. Unlike other languages which use a multithreaded approach to deal with concurrency, Elixir uses threads.

The key thing to remember is that these are Erlang threads which are much more lightweight than their OS counterparts. On average, Erlang processes are 2000 times more lightweight than OS processes. There could be hundreds of thousands of Erlang processes in a system and all would be well.

Erlang threads also have a PID attached to them. Using this PID, you can extract information about the memory it occupies, functions it’s running and much more. Using the PID, you can also send messages to processes and communicate.

Let’s take a look at the final responsibility of an actor - designation.

You can see here that each process also has its own internal state. Everytime we send a message to the process, it does not restart the function with default values, rather it is able to retain information of the state of the running process. Inherently each actor is able to access it’s own set of memory, and keep track of it.

These three properties allow Erlang processes to be very lightweight. It eradicates the need for complex synchronization techniques and does not take up too much memory space for interprocess communication. All this contributes to high availability.

At this point of time, it’s a great idea to watch this quick 4 minute video of the actor model. After all, a picture is worth a thousand words, and a video even more! If you’ve got the time and want something much more in detail, have a look at this video where the creator of the Actor Model explains all that I’ve said in such an eloquent way.

BEAM

Well after so many topics, we’ve finally come to the actual Erlang VM - BEAM!

The BEAM VM was built to be able to both compile Erlang/Elixir files into bytecode (.beam) files as well as to schedule Erlang processes on the CPU. This level of control gives a huge advantage to efficiently and concurrently run processes.

When the VM starts, it’s first step is to start the Scheduler. It is responsible for running each Erlang process concurrently on the CPU. Since the processes are all maintained and scheduled by BEAM, we have more control over what happens when a process fails (fault tolerance) and are able to more efficiently use memory and perform better context switching.

BEAM also takes full advantage of the hardware. It starts a Scheduler for each available core allowing processes to run freely and efficiently, while still being able to communicate with each other.

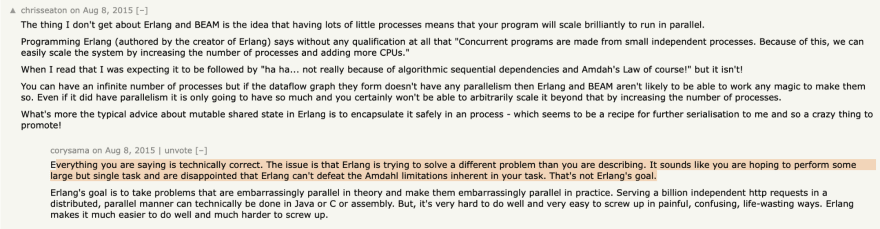

BEAM allows Erlang to spawn thousands of processes and achieve high levels of concurrency. There is an awesome conversation on HackerNews about how the idea that thousands of processes lead to efficient concurrency.

Have a read!

Contributing Characteristics

Stay calm, we’re almost at the end!

Erlang concurrency is largely due to the Actor model and BEAM. However, there are many other constructs that ensure stability as well. I’m going to give you small pointers that you can pick up for further reading.

1. Supervisors

There is a special Erlang process called the Supervisor. It’s goal is to figure out what to do when a process fails. It helps it reset to an initial value so that it can be sent for processing once again.

2. Fault Tolerance

Joe Armstrong, (late) creator of Erlang, said the infamous line, “Let it crash”. He designed the fault tolerant language not with the goal of preventing errors from happening, but to build structures that handle error scenarios.

The entire thread is heartwarming, give it a read!

3. Distribution

Finally Erlang makes it extremely easy to build distributed systems. Each Erlang instance can act as a node in different devices and communicate just as easily as spawning processes.

4. No GIL!

Unlike other interpreted languages, most famously Ruby and Python, Erlang does not have to worry about the Global Interpreter Lock. The GIL ensures that only one Ruby/Python thread runs per process on the CPU. This is a huge blow to concurrency.

App servers like Passenger try to overcome this by creating multiple OS processors and run it on multiple cores. However as we saw before, OS threads are expensive to manage.

Conclusion

Erlang is a beautifully constructed and well throughout language. It’s truly passed the test of time and is so much more relevant in recent times.

Top comments (7)

Amazing article. I had to read many articles in order to learn these stuff but you combined all necessary information about this subject into one, simple to read and easy to follow one. Good job Swaath Kakarla @imswaathik !

Wow thanks so much! Means a lot!

You are welcomeee have a nice day!!

Good article.

I'm a beginner in Elixir. To help more beginner, I have made a working example with source code in github.

🕷 epsi.bitbucket.io/lambda/2020/11/1...

First the process module

And then run both

I hope this could help other who seeks for other case example.

🙏🏽

Thank you for posting with thorough explanation.

I would say it's not true that concurrency means that things are executed sequentially.

Concurrency means that, and Wikipedia wors it really well: "... is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the final outcome."

Importantly, it implies that these sliced up parts of a program can also be reordered, partly executed, continued and parallelized(!) at the system's will.

Go for example makes this very clear: Concurrency is concurrency whether or not the amount of OS threads is 1 or > 1.

Basically, one could say that if code is concurrent, it's a prerequisite of it being able to be paralellized.

Hey great post thank you, I think you added the wrong actor model's picture. Nice work tho ;)

Ah damn. Will fix that. Thank you! :)