This is a Plain English Papers summary of a research paper called Depth Anything V2. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

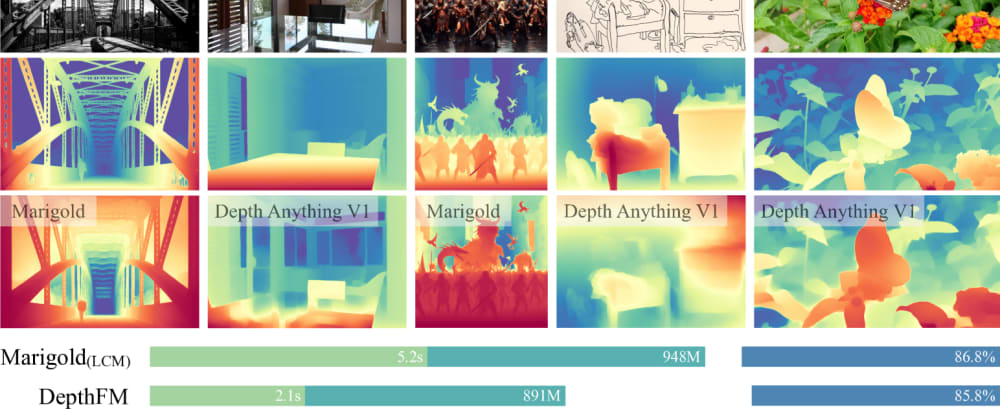

- This paper introduces Depth Anything V2, an improved version of the Depth Anything model for monocular depth estimation.

- The key innovations include addressing challenges with using synthetic data and leveraging large-scale unlabeled data to improve the model's performance.

- The paper builds on prior work in repurposing diffusion-based image generators for monocular depth, domain-transferred synthetic data generation, and self-supervised two-frame multi-camera depth estimation.

Plain English Explanation

The paper describes an improved version of a model called Depth Anything, which can estimate the depth or distance of objects in a single image. This is a challenging computer vision task, as depth information is not directly available in a 2D image.

The key innovation in Depth Anything V2 is how it addresses the challenges of using synthetic data to train the model. Synthetic data, generated by computer graphics, can provide a large amount of labeled depth information. However, there can be differences between synthetic and real-world images that limit the model's performance on real data.

To overcome this, the researchers developed new techniques to better leverage large amounts of unlabeled real-world data. By combining this with the synthetic data in a smart way, they were able to create a more robust and accurate depth estimation model.

The paper builds on previous work in related areas, such as using diffusion models (a type of generative AI) to estimate depth, and self-supervised depth estimation from multiple camera views. By incorporating these ideas, the researchers were able to create a more powerful and flexible depth estimation system.

Technical Explanation

The paper first revisits the design of the Depth Anything V1 model, which relied heavily on synthetic data with labeled depth information. While this provided a large training dataset, the researchers identified challenges in using solely synthetic data, as there can be significant differences between synthetic and real-world images.

To address this, the paper introduces several key innovations in Depth Anything V2:

Leveraging Large-Scale Unlabeled Data: The researchers developed techniques to effectively utilize large amounts of unlabeled real-world images to complement the synthetic data. This helps the model learn more robust features that generalize better to real-world scenes.

Improved Synthetic Data Generation: Building on prior work in domain-transferred synthetic data generation, the researchers enhanced the realism and diversity of the synthetic training data.

Repurposing Diffusion Models: Inspired by repurposing diffusion-based image generators for monocular depth, the paper incorporates diffusion models into the depth estimation pipeline to better leverage learned visual representations.

Self-Supervised Multi-Camera Depth: The researchers also drew on ideas from self-supervised two-frame multi-camera depth estimation to extract additional depth cues from multiple views of the same scene.

Through extensive experiments, the paper demonstrates that Depth Anything V2 achieves state-of-the-art performance on standard monocular depth estimation benchmarks, outperforming previous methods.

Critical Analysis

The paper provides a comprehensive and well-designed approach to addressing the limitations of the original Depth Anything model. The researchers have thoughtfully incorporated insights from related work to create a more robust and effective depth estimation system.

One potential limitation is the reliance on synthetic data, even with the improvements in domain transfer and data generation. There may still be inherent differences between synthetic and real-world scenes that could limit the model's performance on certain types of images or environments.

Additionally, the paper does not delve deeply into the potential biases or failure modes of the Depth Anything V2 model. It would be valuable to understand how the model performs on a diverse set of real-world scenes, including challenging cases like occluded objects, unusual lighting conditions, or unconventional camera angles.

Further research could also explore ways to make the model more interpretable and explainable, providing insights into how it is making depth predictions and where it may be prone to errors. This could help developers and users better understand the model's strengths and limitations.

Conclusion

The Depth Anything V2 paper presents a significant advancement in monocular depth estimation by addressing key challenges in leveraging synthetic data and incorporating large-scale unlabeled real-world data. The researchers' innovative techniques, such as repurposing diffusion models and self-supervised multi-camera depth estimation, have led to state-of-the-art performance on standard benchmarks.

This work has important implications for a wide range of applications, from augmented reality and robotics to computational photography and autonomous vehicles. By enabling accurate depth estimation from single images, Depth Anything V2 could unlock new capabilities and enhance existing technologies in these domains.

As the field of computer vision continues to evolve, the insights and approaches introduced in this paper will likely influence and inspire future research, pushing the boundaries of what's possible in monocular depth estimation and beyond.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)