This is a Plain English Papers summary of a research paper called Evaluating the World Model Implicit in a Generative Model. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper proposes a method to evaluate the world model implicit in a generative model.

- The authors argue that generative models, such as those used in machine learning, often encode an implicit world model that can be examined and understood.

- By analyzing the world model, researchers can gain insights into the biases and limitations of the model, which can help improve the model's performance and safety.

Plain English Explanation

Generative models are a type of machine learning algorithm that can generate new data, like images or text, that looks similar to the data they were trained on. These models often develop an internal representation of the "world" they were trained on, which influences the data they generate.

The researchers in this paper suggest that we can examine this internal world model to better understand the model's biases and limitations. This can help us improve the model's performance and ensure it behaves safely and ethically.

For example, a generative model trained on images of faces might develop an implicit world model that assumes all faces have certain features, like two eyes and a nose. By analyzing this world model, we can identify these assumptions and adjust the model to be more inclusive of diverse facial features.

Similarly, a world model for autonomous driving might make certain assumptions about the behavior of other vehicles or the layout of roads. Understanding these assumptions can help us improve the model's safety and reliability.

Technical Explanation

The paper proposes a framework for evaluating the world model implicit in a generative model. The key steps are:

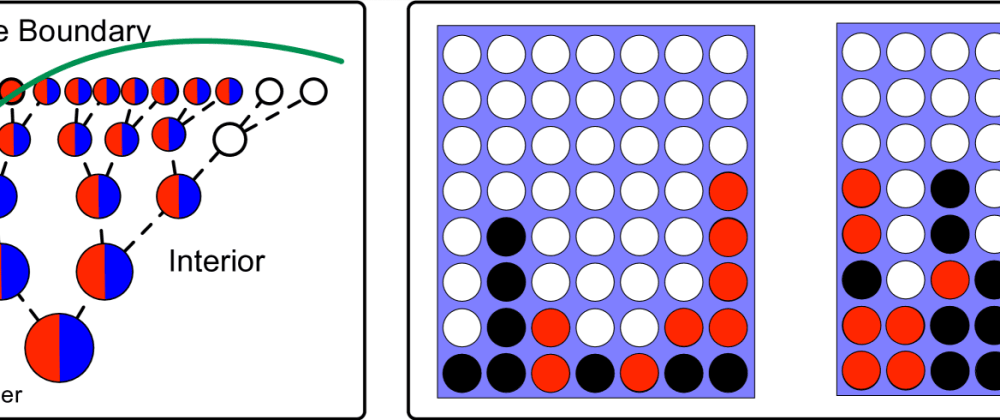

Extracting the world model: The authors show how to extract the world model from a generative model, using techniques like latent space analysis and hierarchical temporal abstractions.

Evaluating the world model: The extracted world model is then evaluated along various dimensions, such as its comprehensiveness, its alignment with reality, and its biases and limitations.

Improving the world model: Based on the evaluation, the authors suggest ways to improve the world model, such as fine-tuning the generative model or incorporating additional training data.

The paper demonstrates the proposed framework on several examples, including language models and image generation models, showing how the analysis of the implicit world model can provide valuable insights.

Critical Analysis

The paper presents a novel and promising approach for evaluating and improving generative models by examining their implicit world models. However, the authors acknowledge that the proposed framework has some limitations:

- Extracting the world model accurately can be challenging, especially for complex models, and may require significant computational resources.

- The evaluation of the world model is largely subjective and may depend on the specific application and desired properties of the model.

- The paper does not provide a comprehensive list of evaluation metrics or a clear way to prioritize different aspects of the world model.

Additionally, the paper does not address the potential ethical concerns around the biases and limitations of the world model, such as the perpetuation of harmful stereotypes or the exclusion of underrepresented groups. Further research is needed to ensure that the analysis of world models leads to the development of more responsible and equitable generative models.

Conclusion

This paper presents a novel framework for evaluating the world model implicit in a generative model. By examining the internal representations developed by these models, researchers can gain valuable insights into their biases, limitations, and potential safety issues.

The proposed approach has the potential to significantly improve the performance and reliability of generative models, especially in critical applications like autonomous driving, medical diagnosis, and content moderation. However, further research is needed to address the technical and ethical challenges of this method.

Overall, this paper represents an important step towards a deeper understanding of the inner workings of generative models and their potential impact on the world.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)