This is a Plain English Papers summary of a research paper called Smart AI Routing Method Slashes Processing Time by 6X with Minimal Quality Loss. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

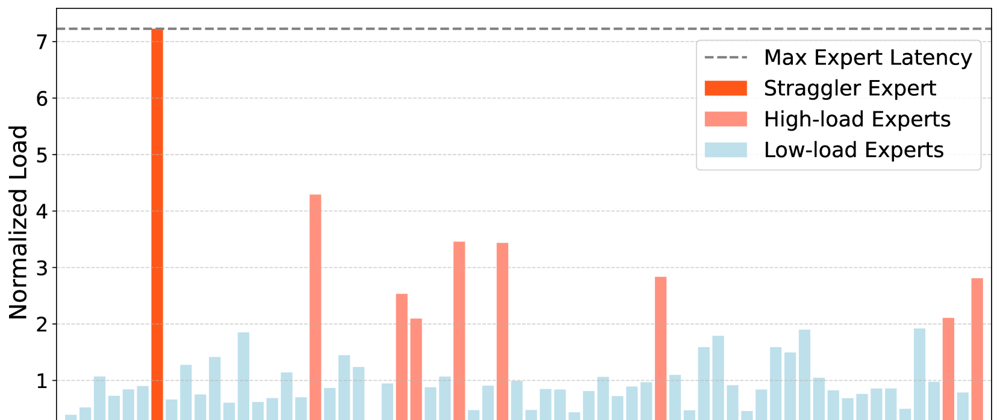

- Mixture of Experts (MoE) models face a "straggler effect" where overused experts create bottlenecks

- Capacity-Aware Inference (CAI) introduces dynamic token routing based on expert availability

- CAI improves both throughput (up to 6.2×) and latency (up to 2.3×) with minimal quality loss

- Implementation requires minimal changes to existing MoE inference systems

- CAI outperforms traditional load balancing methods across different MoE architectures

Plain English Explanation

Imagine a team of specialists where each person handles different types of questions. This is similar to how Mixture of Experts (MoE) models work - they route different parts of a problem to specialized neural netw...

Top comments (0)