This is a Plain English Papers summary of a research paper called The Remarkable Robustness of LLMs: Stages of Inference?. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- Explores the remarkable robustness of large language models (LLMs) and the potential stages involved in their inference process

- Investigates how different layers of LLMs contribute to the model's overall performance and capabilities

- Provides insights into the emergence of high-dimensional abstract representations in language transformers

Plain English Explanation

This paper examines the impressive robustness and capabilities of large language models (LLMs), which are AI systems that can generate human-like text. The researchers explore the different stages or layers that may be involved in the inference process of these models, meaning the steps they take to understand and generate language.

The paper investigates how the various layers or components of an LLM contribute to its overall performance. For example, some layers may be more important than others for certain tasks. The researchers also look at how LLMs can correct speech or language and become more efficient at inference.

Additionally, the paper examines the emergence of high-dimensional abstract representations in language transformers, which are a type of LLM. This means the models develop sophisticated and complex ways of understanding and representing language that go beyond simple patterns or rules.

Overall, the research aims to provide a deeper understanding of how these powerful AI language models work and the various stages or components involved in their ability to process and generate human-like text.

Technical Explanation

The paper investigates the remarkable robustness and capabilities of large language models (LLMs), which are AI systems that can generate human-like text. The researchers explore the potential stages or layers involved in the inference process of these models, meaning the steps they take to understand and generate language.

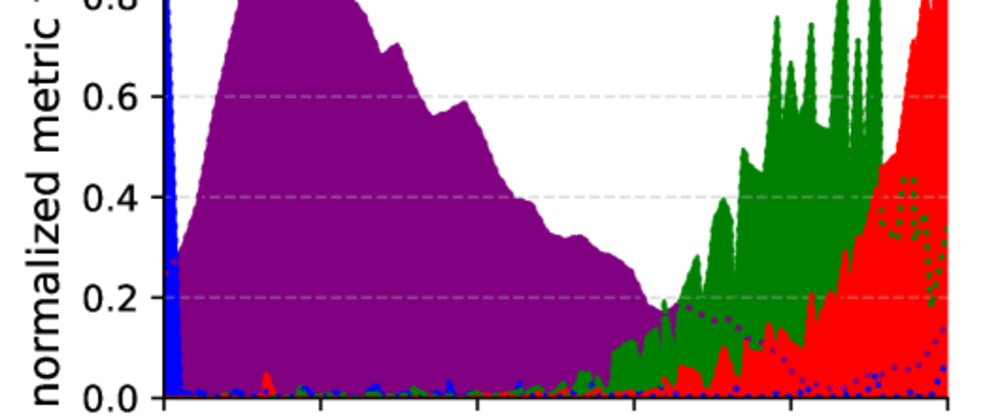

The study examines how the different layers or components of an LLM contribute to its overall performance. For example, some layers may be more important than others for certain tasks, and the models can correct speech or language and become more efficient at inference.

Additionally, the paper investigates the emergence of high-dimensional abstract representations in language transformers, a type of LLM. This means the models develop sophisticated and complex ways of understanding and representing language that go beyond simple patterns or rules.

Critical Analysis

The paper provides valuable insights into the inner workings and capabilities of large language models, but it also acknowledges certain caveats and areas for further research. For example, the researchers note that their analysis of model layers and inference stages is based on specific experimental setups and architectures, and the findings may not universally apply to all LLMs.

Additionally, while the paper explores the emergence of high-dimensional abstract representations in language transformers, it does not delve deeply into the specific mechanisms or implications of this phenomenon. Further research could investigate the nature and significance of these abstract representations and how they contribute to the overall capabilities of LLMs.

Overall, the paper presents a thoughtful and nuanced examination of LLM robustness and inference stages, but there is still much to be explored in this rapidly evolving field of AI.

Conclusion

This paper provides valuable insights into the remarkable robustness and capabilities of large language models (LLMs). By investigating the potential stages or layers involved in the inference process, the researchers offer a deeper understanding of how these powerful AI systems process and generate human-like text.

The findings suggest that the different components of an LLM play varying roles in its overall performance, and the models can demonstrate sophisticated abilities, such as correcting speech or language and becoming more efficient at inference. Additionally, the paper examines the emergence of high-dimensional abstract representations in language transformers, indicating the models' capacity for complex and nuanced language understanding.

These insights have important implications for the continued development and application of LLMs in a wide range of domains, from natural language processing to creative writing and beyond. As the field of AI continues to advance, further research in this area could yield even more remarkable discoveries about the inner workings and potential of these remarkable language models.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)