How to unify an engineer and GUI designer? How do I make them work with same entities?

How to keep mockups in a version control system?

If you are interested in those questions, welcome to aboard!

Before start

This post is a sequel of my previous post "No more HTML coding, please. Let's make layout to HTML real" and the result of research interesting me topic.

This is my second English publication, please be lenient. Any fix suggestions are welcome.

In the previous post, I briefly described current problems of the HTML code automatization and shared my research results. I stopped at the control problem.

In this post I will discover this concept in more detail and share the results of my research.

Want to touch something? Here is the proof of concept.

The Control Problem

I defined two controlling directions:

- The possibility to affect to process of HTML code generation.

- Direct editing the result which will be reflected in the next generation.

As a part of my research I looked at the first direction and came to the conclusion that the HTML generation should be built on a declarative language of rules. This approach will give a high level of control on a resulting code and opportunities to make own rules set to developers.

But now I would like to dealt with in greater detail on the second direction.

Many of graphical web editors offer HTML code export but nothing more. This HTML code is derived and any changes on it will be lost after the next generating.

Thus, building the interface and applications on this approach is very difficult. Not to mention the quality of the generated code. This is quite enough for the tasks being solved for this services such as landing page generators or prototyping, but for applications, the best they can do is be a quick start for something more complex.

The solution

The main idea is that if layouts are made to create an HTML document, then the entire layout can be described in HTML and CSS.

Based on this, we can use the HTML document as a source of truth, without the need for a third format for storing layouts.

But if the layout is described in an HTML document, then what prevents the developer from opening the code and changing it in any way he wants. The answer is nothing, yes with reservations, but it is possible.

This way we have a 2-way binding between the design tool and the source code.

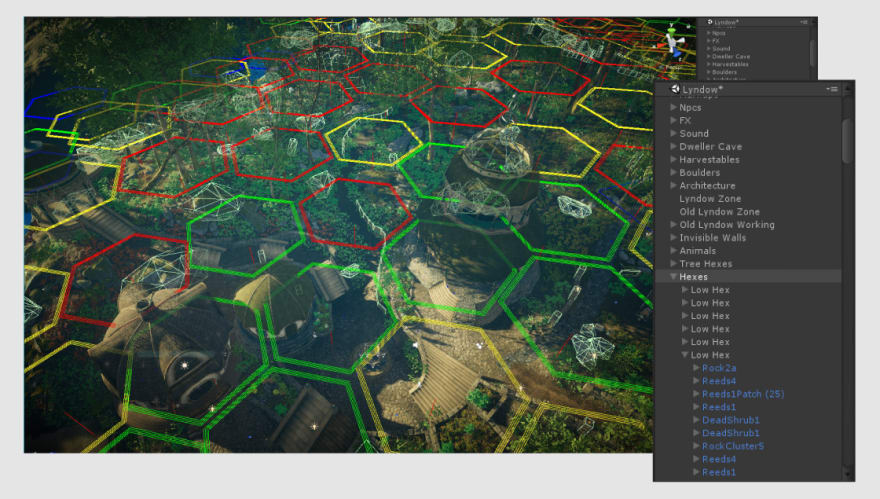

Perhaps someone will say that this sounds like a utopia, Unity 3D, I will say back.

Unity, like any other game engine, has implemented a similar concept in their tools for a long time.

In my opinion, the only obstacle to implementing such an approach in the web industry and derivatives is the high requirements for HTML code, I looked up this issue in more detail in a previous article.

The only thing that I will add. Continuing the comparison with game engines, is that in addition to the tree of the hierarchy of objects that the specialist works with, the engine itself builds many other trees that no one is interested in knowing about. Unlike the web.

Ok, I want to have something like Unreal Engine, but for the WEB. Where should I start?

Right now I have:

- A simple Drag&Drop editor;

- A concept of auto-coding module;

- Some of HTML code that I want to edit.

The first question is how to put it all together?

The Engine

Any engine consists of modules, so it's worth starting with this.

It's hard not to start to imagine a system with modules like api mocking, testing, storage management, animation creation, optimization, working with dependencies, documenting ...

But it's worth cool down and return to the current problems.

The Rendering Module

Perhaps the question is obvious, but in general, what are we drawing and where? We have HTML code that we need to display with something.

The logical choice here would be the most popular render - WebKit Blink, we will use it as a rendering module.

The Read-write Module

But with the reader, everything is not so obvious. As long as we are talking about a single entry point (all the code is in index.html), there are no problems. But a real application can have an arbitrarily complex architecture and can be stored in any format.

I have identified 3 options for how to deal with it:

- Working with the final code, with intermediate rendering;

- Maximum coverage of all popular technology stacks;

- Strict standardization.

There is no better option, each has its drawbacks.

The most reliable seems to be the third, the most convenient for work is the second. I suppose that a mixed approach with a gradual adding of the stacks would be optimal, and where this is impossible, fallback to the third.

The first option seems to be very promising, but with a number of difficult issues, the solution of which may take longer than the second option.

Of the obvious problems:

- Comparison of the intermediate to the original.

- Converting the intermediate code to the original format.

The problems are interesting and require research.

The Auto HTML Code Module

Probably the easiest part.

The Visual Interaction Module

And what can we exactly interact with?

This is what a tree looks like on one of the landing pages, describing 2 blocks lying side by side. If it is justified there, then this is completely inappropriate in the interface editor.

Looking at it, I recall Adobe Illustrator in which, to get to the desired element, you need to click on the same place 2 * N times.

But if we look closely, the editable elements there are only the heading and paragraph. The rest describe the arrangement of the elements.

This fact is well illustrated the following concept - not all HTML code is the same.

From this we formulate a rule. HTML code is divided into:

- Significant;

- Insignificant.

An user interacts with significant items.

The significant code describes an appearance, meta and structure: grouping, positioning, etc.

The editor itself must meet all the expectations of the designer.

The Binding

And what do we have and what do we binding with?

- Some code as a source of truth;

- A DOM tree;

- A Visualization.

When we change the code then the DOM is changes. That guides to re-rendering.

When we change the visualization:

- Modify DOM, store derived result to code;

- Change the code that will change the DOM.

Each of the options will redraw the display.

The state

But what is the lifecycle of the DOM? In addition to the editor, logic can also change it.

This means that we need to determine the current state of the code and select it from the list of all states and edit the changes exactly where it is needed.

The task is additionally complicated by the fact that a new state can completely change the interface, and coupled with an almost endless combinations of options for changing states. This task seems to be completely solvable only at the level of a semantic apparatus comparable to the human one (and the human does not always cope).

All of the above sounds like it’s time to wait for a strong AI, but for now leave this occupation... Or introduce restrictions.

Let's look at the comparison with game engines again. There are always at least 2 modes:

- Edit;

- Preview.

In the edit mode, interaction only with blocks is possible, let's call them components, with the default state.

As for the rest, I would divide them into 2 types of states:

- Manual, those that an editor can understand and give control over them;

- Automatic, unclear to an editor, programmed.

A great example of manual ones would be Pagedraw with its state editor and transition between them.

But still, this topic is one of the most difficult, and requires much deeper and more thorough research.

Summary

From the above, the following concept is formed:

We can interact in edit mode with blocks that are significant. These blocks can form components, which in turn can have simple or complex states.

Editing states is a separate task and can be done both visually and through code. Components form a library. All this will impose certain rules and restrictions.

Proof of concept

To proof the concept, it is necessary to implement the editing mode, in which changes in the visual editor affect the code immediately, and changes in the code are also immediately visible in the visual editor.

The code acts as a source of truth and stores representation data, meta information, and structure (child-container).

Subsequent opening of the code restores the state of the visual editor.

Armed with this knowledge, let's implement it in code.

Restrictions

- Significant elements are those with a class or identifier;

- No side effects applied to items;

- An identifier is unique, reuse will lead to unexpected consequences;

- Most edge cases are not handled;

- Errors are expected;

- Components and states not implemented;

- Moving a container with children is possible when "grabbing" an empty space.

Conclusion

While 2-way binding is challenging and requires extensive research, it is possible. But it also implies a number of restrictions.

Each of tech stacks will need its own interpreter, which is impossible without the interest of the community of this stack.

What's next?

I have another month of free time, so I have enough playing with "cubes", it's time to implement a clean model of the editor. To begin with, I will use React as the target stack, or rather JSX + styled-components, as the most used and studied.

This process can take quite a long time, realizing this, I brushed the dust off my twitter account. I invite everyone interested to follow the process.

There I will post my thoughts about the problem and the current state of the work, and if the stars will align, then about the result - a free editor for everyone who wants to "move this web".

Of course, I will be very glad to see constructive criticism and discussion.

Peace for everyone!

Top comments (1)

I think the biggest problem with web design is that there are 2 stacks"

So you are basically creating the product twice, one time in the design stack a second time in the web stack.

This why I think Desech Studio can solve these issues. You create a minimal design in figma, sketch, adobexd, and then you import it into Desech Studio. And then it syncs with your code, and all the current or future design implementations you do, are in Desech Studio.

This will you keep the designer in your web stack, instead of having his own personal playground in the design stack, which is completely divorced from the actual end product.