Introduction

When someone wants to master computer programming, the best way to start is to learn the complexity of the system. Before you start with coding common questions you should ask yourself are:

- Why should I write the program in this way only?

- What will be the benefit of this program?

- How many resources my program will consume?

- How fast it will execute?

These questions will help you write efficient code.

Computational complexity is all about the writing optimized, efficient and blazing fast programs/code.

In this blog, you will be going through a few amazing theories of the computer system with examples.

Before learning complexity

Let's learn a bit about an algorithm:

A sequence of logical computation is called as the algorithm of the program. These algorithms are analyzed by calculating their time or space complexity. It is proved that your algorithm will always execute in worst-case complexity or an average-case complexity.

- Worst-case complexity: Maximum resource utilized by your algorithm to execute with respect to the number of inputs.

- Average-case complexity: Average resource utilized by your algorithm to execute with respect to the number of inputs.

I hope now you are getting a bit of an idea about the complexity theory. Let's dive deep to clear all our doubts...

Topics:

- Resources.

- Asymptotic complexity.

- Computational models.

1. Resources

What are the resources as per you?

You might be thinking Human, keyboard, electricity, CPU, monitor & all the hardware stuff that you need while using your system then that is wrong❌.

The actual resource that I will be talking about is

- Time.

- Space.

Time complexity

The time complexity of an algorithm is measured irrespective of hardware and software associated with the system. The complexity considers the maximum time required to run an algorithm which is measured in asymptotic notation.

Space complexity

The amount of memory your algorithm requires to run is called space complexity.

Other complexities are arithmetic complexities which depend on the arithmetic computation that has been used in the algorithm and totally depends upon the size of input n.

2. Asymptotic Complexity

It is difficult to describe the compute of an algorithm precisely which falls between the worst-case and the average-case complexity. So in order to calculate the near to accurate complexity, it is always better to keep the input size n as infinity.

These asymptotic complexities are the boundary limits of an algorithm that includes upper bound(big-oh notation), lower bound(big-omega) and the tight bound(big-theta).

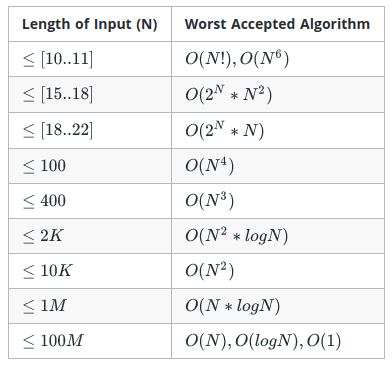

If you are pretty confident that your algorithm is good and won't hamper your system computational complexity below is the table of complexities you can assume your algorithm might take.

For analyzing any algorithm, it is recommended to consider the big-oh notation because it helps us to get the idea of the upper limit of the execution time of the algorithm.

I would recommend a 5 minutes read on hackerearth before proceeding further.

3. Computation Models

This is one of the vast topics which can be totally debatable. The model of computation describes how an output of the mathematical expression is computed when input is given. Similarly, the algorithms rely on these mathematical operations. So it is very important to choose the correct model for computation.

-

Deterministic models: These models are the exact opposite of random. It helps us to predict the exact event that will occur in the future without any involvement of randomness. So if you have some data you can predict the exact outcome with 100% certainty. for example :

- Rolling a fair die.

- Calculating Kelvin using Celsius.

Non-Deterministic models: These models are the opposite of deterministic where you will start with some sort of straightforward events later randomness will be getting added to it. Here you don't have the idea of what will be the future event. Since every time you run you will get a different output. In order to differentiate you can consider the below image.

- Quantum computing models: These models follow the rules of quantum mechanics(the fundamental theory of physics describing the properties of nature.) The very famous example of quantum computing is AI(Artificial Intelligent). you can find more examples here

Concluding...

Any algorithm without proper computational logic is just a waste of resources. you can save those resources or use them in a better way by optimizing, building concise code better.

I hope you understood the very basic fundamentals of Complexity. Do share & comment! 🤗

Top comments (0)