One of the fun things about working with data over the years is learning how to use the tools of the day—but also learning to fall back on the tools that are always there for you - and one of those is bash and its wonderful library of shell tools.

I’ve been playing around with a new data source recently, and needed to understand more about its structure. Within a single stream there were multiple message types.

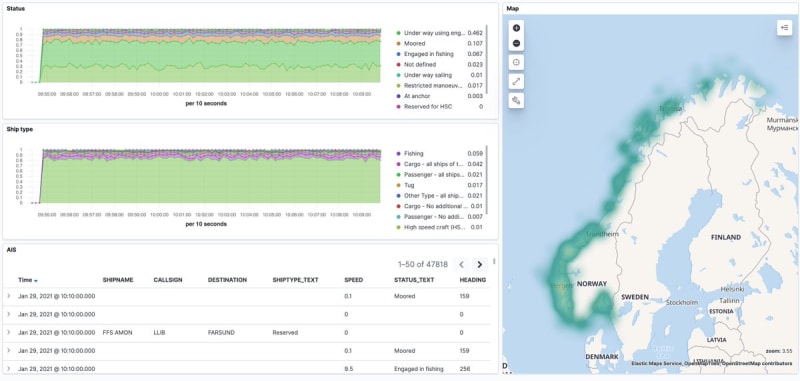

Poking around a fun source for streaming demo with @apachekafka, @ksqldb, and @elastic - AIS maritime data :)10:18 AM - 29 Jan 2021

Poking around a fun source for streaming demo with @apachekafka, @ksqldb, and @elastic - AIS maritime data :)10:18 AM - 29 Jan 2021

Each message has its own schema, and a common type field. I wanted to know what the most common message types were. Courtesy of StackOverflow this was pretty easy.

My data happened to be in a Kafka topic, but because of the beauty of unix pipelines the source could be anywhere that can emit to stdout. Here I used kafkacat to take a sample of the most recent ten thousand messages on the topic:

$ kafkacat -b localhost:9092 -t ais -C -c 10000 -o-10000 | \

jq '.type' | \

sort | \

uniq -c | \

awk '{ print $2, $1 }' | \

sort -n

1 6162

3 1565

4 393

5 1643

8 61

9 1

12 1

18 165

21 3

27 6

kafkacatspecifies the broker details (-b), source topic (-t), act as a consumer (-C) and then how many messages to consume (-c 10000) and from which offset (-o-10000).jqextracts just the value of thetypefieldsortorders all of thetypevalues into order (a pre-requisite foruniq)uniq -coutputs the number of occurrences of each line-

The remaining commands are optional

-

awkchanges round the columns from<count>,<item>to<item>,<count> - The final

sortarranges the list in numeric order

-

Top comments (0)