After over a year of development, SD2, my web server is done.

When I initially set out to build a website, I wanted it ranked #1 when you searched my name on Google. I remember seeing other students like Kevin Frans(MIT/OpenAI) and Gautam Mittal(Berkeley/Stripe) having their websites highly ranked on Google and took inspiration from that to do the same. I also wanted to automate a lot of mundane tasks to ensure that this web server is highly scalable in the event I get a lot of traffic, highly available to prevent any user from having downtime while accessing the many elements to my server, and portable if I need to move over to a different cloud service provider.

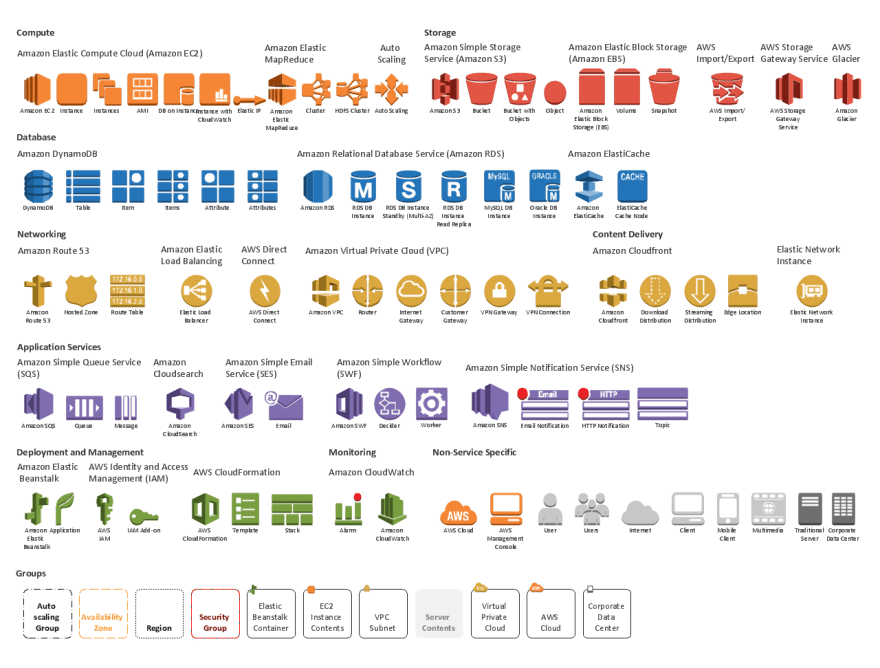

Switching between AWS, GCP, and DigitalOcean

SD2 is the new web server I've been building for nearly a year now. After going on DigitalOcean then to Google Cloud for a bit, then AWS for a longer bit, then finally back to GCP, it's been a long ride. DigitalOcean was too expensive and my Google Cloud discounts expired (which I renewed, so I went back).

I initially started with DigitalOcean since they had relatively low prices and a hackathon I've attended incentivized it using prizes, so I decided to give it a try. After nearly a year, I decided DigitalOcean was a little bit like training wheels and I needed more functionality among other things, so I moved over to GCP.

From GCP, I was able to configure a nice setup with my site, but the problem was in reverse proxying my Blog (runs on Ghost) and reverse proxying other static sites. For this hassle, I temporarily weaved in and out of using simple Github Pages (although in hindsight, this was mostly my fault)

After running some RL models and subsequently burning through hundreds of dollars an hour, I ran quickly out of credits and was nearly forced to move over to AWS.

After finding out about AWS' free tier I decided to give EC2 a chance. Using it for a couple days got me comfortable using it, although configuring the launch wizards opposed to VPC on GCP took some to get used to, but I was able to run basic NGINX instances off of it.

However, AWS has this somewhat weird structure for CPU usage available here: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/burstable-credits-baseline-concepts.html#cpu-credits. Essentially, after several days of running my entire stack, I was beginning to be throttled all the way down to nearly 5% CPU utilization, slowing all my sites to a standstill. It got so bad that any and all my sites were unusable.

This prompted me to get some more credits from GCP and continue on there for the finite future.

Note: When developing this server, I used AWS.

What I needed from a web server

Before going further, I had to outline what I wanted to do:

- A NGINX server to reverse proxy websites I build

- High availability of my Blog (running on Ghost)

- Self hosted Analytics Engine

- Self hosted storage service (both uploading and downloading)

- Self hosted email server (SMTP)

- Self hosted URL shortener

And all of this had to be built using Docker, to make it easily shippable to other servers in the case the one I'm using goes down or something else happens (from experience on moving from DO to GCP to AWS to GCP and in between spinning down and up servers from instance to instance)

While I was still developing this server, I was still on AWS. Here's what I did:

I initially created a AWS AMI with Docker baked in (because I couldn't find one readily available) and started playing with my newfound Docker knowledge (from using it in the workplace).

With this in place I started spinning up EC2 instances left and right, trying out Ghost images, setting up ECS, finding out what Fargate is, and trying to see how I can scale.

After several weeks I realized I didn't need ECS or Fargate or any of the "fancy" features AWS was offering, since I wasn't building a SaaS startup or anything, just a simple personal web server that fit my needs.

So I continued playing around with Docker and EC2.

But as I was setting up all these Docker containers, I was realizing there had to be some way of composing all of them.

That's when I found docker compose. It made it a lot easier to just spin up new containers if I needed to. And scalability? It had it, without even disrupting existing containers.

Running a simple

docker-compose up --scale ghost=3 -d

Seamlessly scales up.

But how are we going to:

- Manage all these ports?

- Reverse proxy everything without much hassle?

Initially I heard that Nginx Proxy by Jason Wilder (Azure) was a great option.

After doing a little digging, it definitely sounded like a nice option. And using it for a little while made me realize it was manually generating configuration files and it was hard to easily configure, especially with Docker compose.

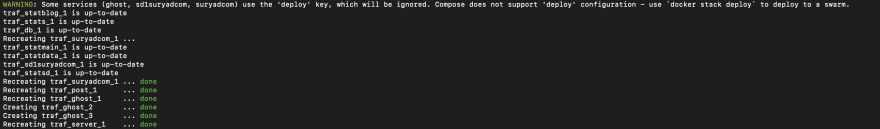

That's when I found Traefik, a cloud native edge router.

This is how they describe it from their README:

Traefik (pronounced traffic) is a modern HTTP reverse proxy and load balancer that makes deploying microservices easy. Traefik integrates with your existing infrastructure components (Docker, Swarm mode, Kubernetes, Marathon, Consul, Etcd, Rancher, Amazon ECS, ...) and configures itself automatically and dynamically. Pointing Traefik at your orchestrator should be the only configuration step you need.

So it was a batteries included reverse proxy engine which fully supported Docker-compose, fitting exactly my needs.

Simply: It took whatever port that was being publically exposed by the container and proxying it to whatever domain/subdomain needed.

This was crucial to me, since either managing a bunch of services running on the machine itself or a bunch of docker containers spun up from a Docker Compose file was going to be a mess to handle.

The better part is that given that I'm running some databases and other sensitive info, I could use an internal subnet to prevent that from being publicly facing and Traefik inadvertently helped with that.

With Traefik and the configuration of my Docker Compose file, I was able to essentially use every Docker image like an app. Easily being able to download, update, and uninstall without disturbance to any other services.

I could install Netdata(gives statistics on how your site is doing) within minutes; even setup my own Overleaf/Sharelatex directly on my website, just with some small configuration changes with Traefik.

Traditionally I'd have to spend hours downloading, installing, and maintaining Redis, Mongo, MySQL, etc. databases on top of downloading, installing, and mainting the services themselves. If anything went wrong, I'd have to completely restart. On top of that, the databases would be public facing (last time FB had a MongoDB instance public facing, millions of username/passwords were leaked) and that's the last thing I want.

On the other-hand, with Traefik and Docker Compose, I'm easily able to run everything and anything I want within minutes with Docker and route every service to its intended destination with Traefik.

As I've said previously; given Docker's "portability" of shipping containers around, I could even set everything up on my old desktop and have it work seamlessly (with using Cloudflare Argo).

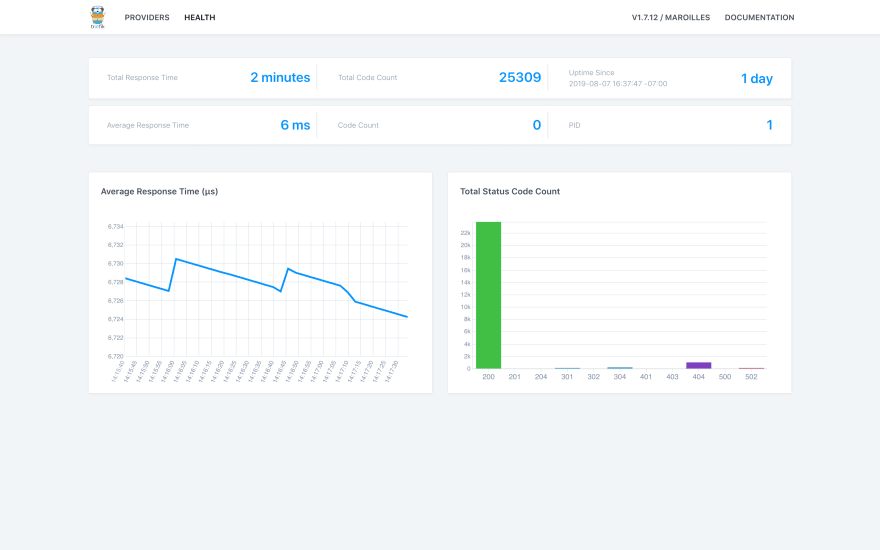

Traefik even has a GUI! Here's how monitor.suryad.com looks like:

So what am I running?

With the power of Traefik and Docker Compose I'm running the following:

- This Blog (subscribe if you haven't yet! sdan.xyz/subscribe)

- suryad.com, sd2.suryad.com (static sites)

- ShareLatex (I run my own Latex server for fun)

- Mongo, Redis, Postgres, MySQL (although I'm probably going to drop MySQL for SQlite soon)

- URL Shortener (sdan.xyz)

- A commenting server (for you to comment on my posts; don't like Disqus having you to login everytime)

- Netdata to check if everything is running or if swap space RAM is being used or to see if CPU is being over-utilized

So have I accomplished what I sought out for?

Somewhat.

I'm still working on an analytics engine after both Fathom and Ackee (open sourced analytics engines) gave me mixed results, but gave me the direction that I should self host (I don't trust that Google Analytics is going to give me the correct results since so many people have adblock on).

In regards to a "Google Drive" for myself, it's at: sdan.cc (where all the images you're seeing are from) which I host from my Fastmail account (allows me to upload/download/serve files with ease)

In regards to email: I need a reliable email service. Although I trust myself, I trust Fastmail to do it for me with better features. I've come to love what features they provide and so far just stuck with them.

At the end of the day I'm more than excited and happy with what I've built with the knowledge I've accrued from my internship at Renovo and from other various sources.

So what can I as a reader use from your "plethora" of services?

Not much unfortunately. This whole setup was more of a feat to show how powerful, simple, and easy self-hosting can be. Although one day I'd like people to use my URL shortener or Latex server, at this point my low spec'd GCP instance probably isn't good enough for multiple people to join on.

However,

You can build everything listed here on your own.

Simply install https://github.com/dantuluri/setup (which coats you server with some butter to start cooking up the main part of the operation)

Then simply download https://github.com/dantuluri/sd2 and run docker-compose on this file and start traefik with this line:

sudo docker run -d -v /var/run/docker.sock:/var/run/docker.sock -v $PWD/traefik.toml:/traefik.toml -p 80:80 -l traefik.frontend.rule=Host:subdomain.yourdomain.com -l traefik.port=8080 --network proxy --name traefik traefik:1.7.12-alpine --docker

Conclusion

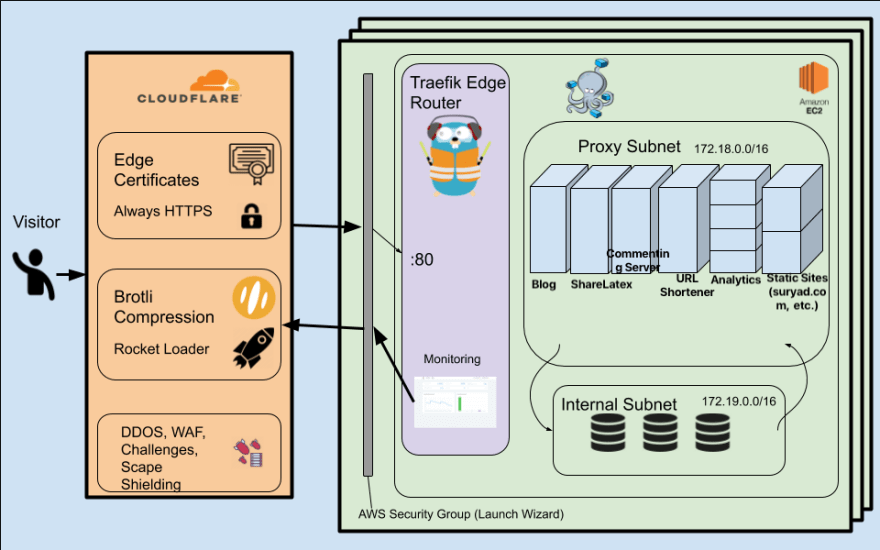

So suppose you have some free time and hop onto any one of my websites/services. How would you get there?

Here's a somewhat simple and packed diagram explaining that:

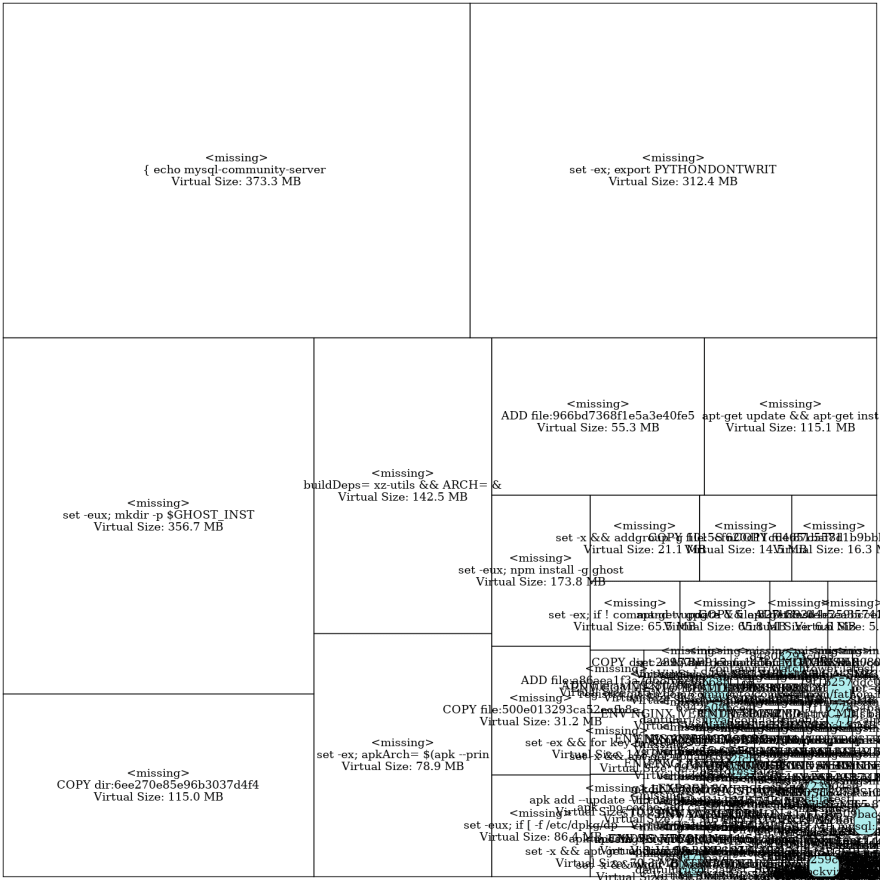

Here's also some fun diagrams generated by various scripts:

Top comments (0)