This is the second of a four-part series on an introduction to serverless computing and Azure Functions. In this part, I would be walking you through deploying a function code in the cloud using Azure Functions. In the previous part, I covered an introduction to serverless computing and Azure Functions - and also covered the very advantages of running code functions on a serverless platform. I'll also be covering the downsides of the serverless platform in this part. Before going forward, I'd like to tell you about...

The Downsides of Serverless

As discussed in the first part of this series, there are a number of negatives to working with Azure Functions. These downsides do have workarounds, which I would also be talking about. Before then, here's the very first negative of working with serverless;

• Rate of Execution: Azure Functions might not be your best bet, if you want your function to be run by multiple clients - as this might seem costly. You may want to consider hosting your entire project by provisioning a Virtual Machine (VM), after estimating your usage and calculating your total cost - you can do that here.

During auto-scaling, just a single function app instance can be created every 10 seconds, which can be up to 200 instances in total. It is worthy to note, that, each created instance can service multiple executions. And, there is no actual set limit on how much traffic a single instance can handle.

In Layman's terms, you can create 200 function apps within 2000 seconds. Note that, within a single Function App, you can have many functions (that perform different tasks) running.

• Time of Execution: Azure Functions might again not be your best bet, if an app timeout of 5 minutes isn't ideal for your project - as the default timeout for functions is 5 minutes. This timeout is configurable to a tune of 10 minutes though, so if your function requires more than 10 minutes to run, you might want to consider provisioning a VM.

NOTE: If your service is launched through an HTTP request and you do expect the return value as an HTTP response, the timeout for this is restricted to 2.5 minutes.

Moreso, there's an option called Durable Functions that allows you to orchestrate the executions of multiple functions without any timeout. Tadaa! :happy: This isn't covered in this article though. Sorry!

Getting Started with Deployment

To get started with deploying a function app, there are some basic requirements needed to get your function in the cloud, which are listed below.

Requirements

• Basic knowledge of Azure Functions, which is covered in the first part of this article

• Basic knowledge of JavaScript

• An Azure Subscription, here

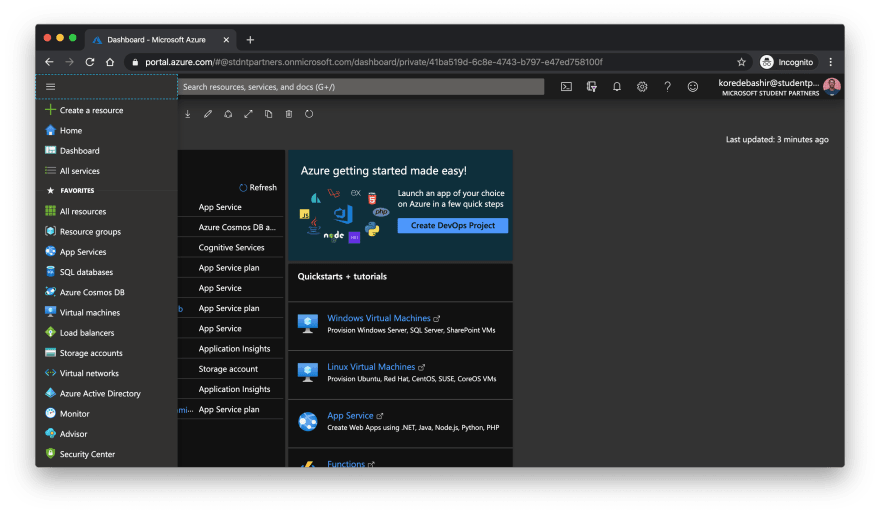

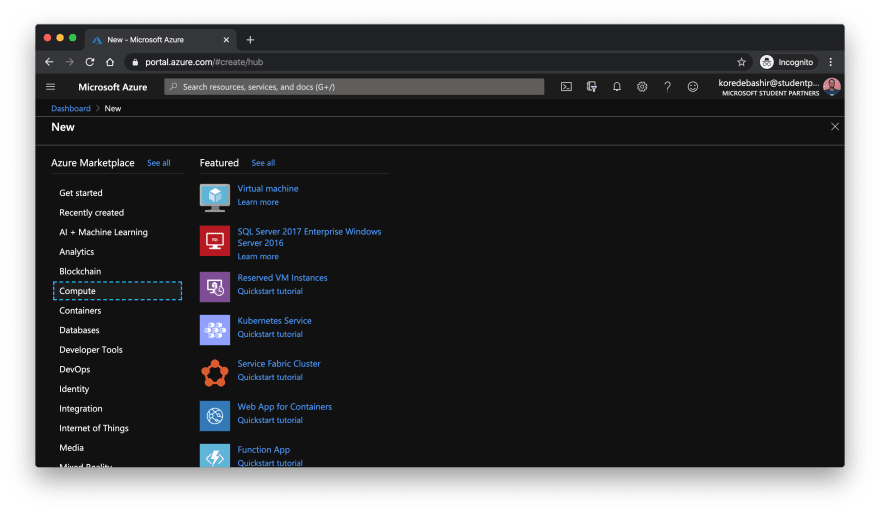

After getting the requirements stated above, sign in to the Azure Portal and create a new resource, as shown below.

Click on the + icon to create a resource

In the following blade, click on the Compute option to reveal the Function App resource we would be using to deploy our function.

In the next blade, you'll be required to provide how you want your Function App to be provisioned; just as below;

Enter a unique appname, this is also the Function App's URL

Click on Create new to create a new resource group. I didn't create a resource group, because I do have a resource group already - which houses all the functions I've ever created on my Azure subscription.

Select Node.js as runtime, as we would be working with JavaScript. Then hit Hosting to move to the next blade

On this next blade, since we're not really working with storage in this tutorial, a storage account would automatically be created for you with the consumption plan I mentioned in the first part. Leave everything as is here, then click Review + create. But, do ensure the Windows OS option is selected. Very Important!

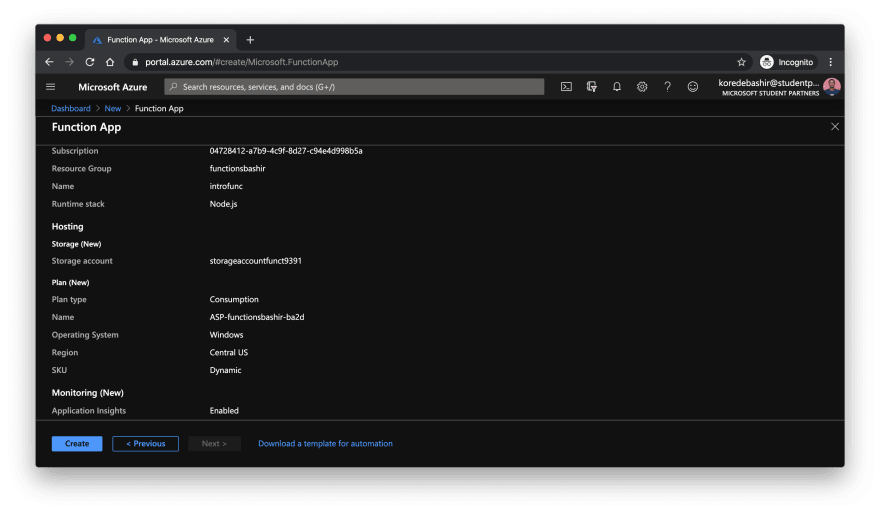

The following blade would be the option to review all the information you have provided Azure to provision your Function App instance. Review thoroughly, before hitting Create. Mine looks like this, below;

Review of my Function App instance

When you're done with reviewal, and after creating the Function App instance - you can monitor the progress of your Function App deployment from notifications (that "bell" icon). When the resource deployment is complete, click Go to resource to reveal the newly created Function App instance.

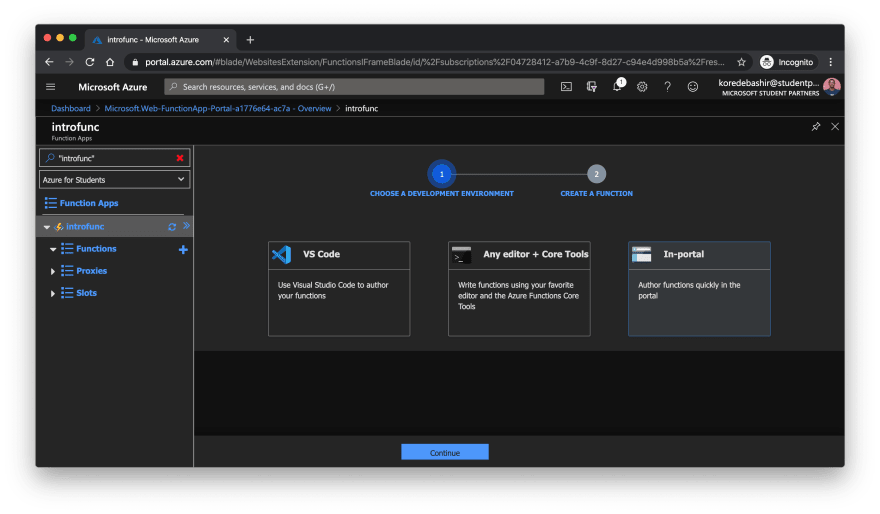

While on the overview page of your Function App (remember that this instance can hold multiple functions - JavaScript functions, 'cause of our runtime), click on the + icon on the left pane to add a new function to your Function App.

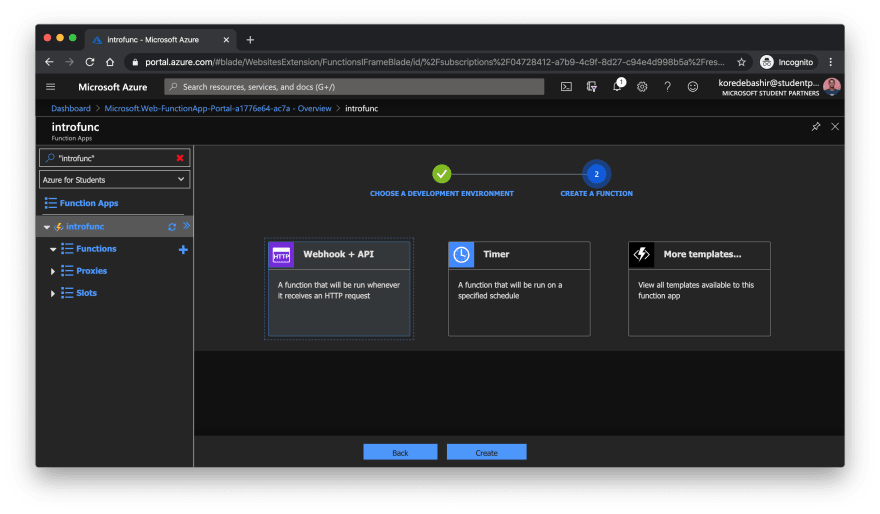

On the following screen, click the In-portal development environment option before hitting Continue.

Select In-portal, then continue

NOTE: There are various Azure Functions templates which all serve different purposes. In this Serverless guide, just the Webhook + API template (which also includes the HTTPTrigger template) would be covered.

• Going forward, select the Webhook + API option, then click Create.

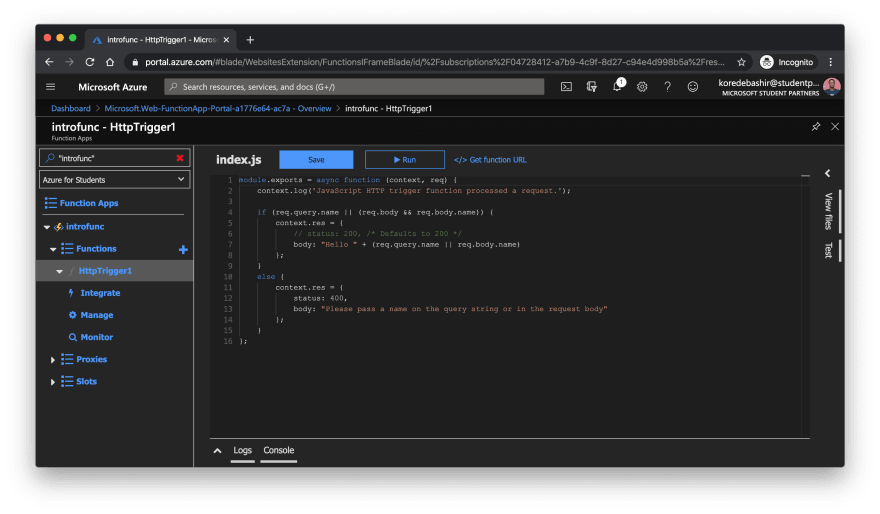

The next screen that comes up after clicking Create is an overview of your newly created function, with an in-portal development environment for editing and compiling your code.

As a start, we would be testing this code out - before going forward with reconstructing the function code to suit the project we would be creating in this article.

An overview of the newly created function

And to begin with, click on the Get function URL button to reveal the URL for your HTTP-triggered function code (yeah, we just deployed a code snippet in the cloud). This URL includes an authentication key for accessing the content of the deployed block of code which has been included in the copied URL. Paste this URL in a new tab on your browser to access the content of your function code.

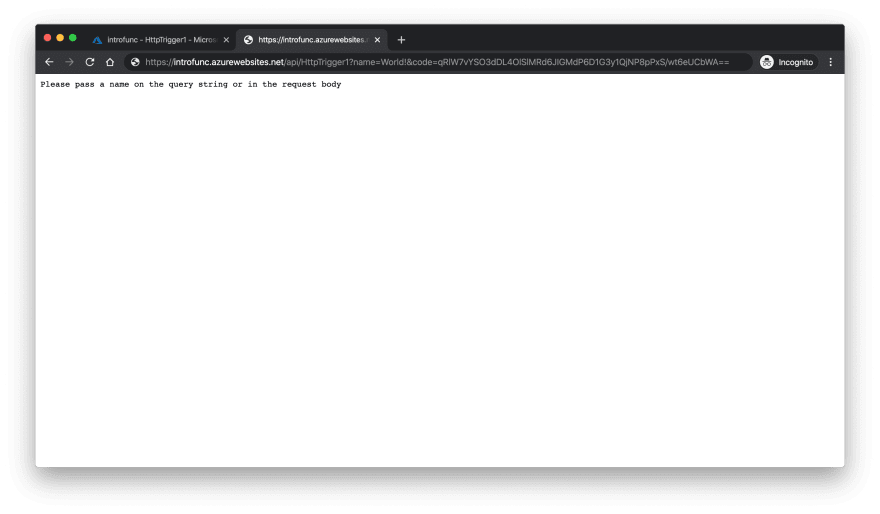

When the URL page loads up, the first screen you are greeted with is an exception with a message, as shown below. A comprehensive detail on the error can be found in your logs from your in-portal environment. To clear the error message, pass in a parameter - as shown below.

Add a name=any random letters& parameter, as shown, to clear the error message

NOTE: The & operator before the code parameter helps in passing multiple parameters to an API

After passing in the name parameter, you should be greeted with a page similar to the one below!

Hello World! You just successfully deployed a function

To reveal the bindings for your created function, click on the View files panel - on the right side of the portal. Then click the function.json file to reveal the input (trigger) and output bindings - these are denoted with in and out.

There's also a Test option on the right pane, where you can include and compile code to test for edge cases in your function. BTW,

What Do These Function Bindings Do?

The code snippet below should be the same as the code in your function.json file. And the description of what this code does is stated below this snippet.

{

...

"bindings": [

//binding for input/trigger starts here

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req"

},

//binding for output starts here

{

"type": "http",

"direction": "out",

"name": "res"

}

]

...

}

As can be seen, the code is in JSON format which is written in key/value ("key": "value") pairs - this should give you a proper sense of how the description of the code snippet above would go.

The first key from the input trigger binding is authLevel which describes the security (authentication) level for your function, there are only three values (or levels, in this context) that can be passed to this key, which are; the function, anonymous, and, admin levels.

• The anonymous level means that a function does not require a security (authentication) code to execute or provide responses to requests.

• The function level - used in our trigger - means that a function does require a security (authentication) code to execute or provide responses to requests. The trigger binding default value.

• The admin level - used in our trigger - means that a function does require a master security (authentication) code to execute or provide responses to requests, which means that only a user with administrative access (with the master code) to the Function App that hosts the function can execute the said function.

Moving on, the second key from the input binding is type, which indicates the kind of event that the function would be handling - in our case, HTTP-triggered events - hence, the httpTrigger value.

The third key from this same input binding is direction which denotes the kind of binding in the first bindings block, in this case in for input.

The last key from the input binding is the name key, which accepts the variable name used in the function code, for the request (input) or response (output) bodies, as value.

Now, the output binding has three default keys; type, direction, and name; which define the response provided by your function. More key/value pairs can definitely be added to suit your function definition.

The first key from the output trigger binding is type which indicates the kind of value that the function would be processing - in this case, http, since we would be sending responses to a webhook or the web.

The second key from this same output binding is direction which denotes the kind of binding in the second bindings block, in this case out for output.

The last key, also, from the output binding is the name key, which also accepts the variable name used in the function code, for the request (input) or response (output) bodies, as value.

What's Next?

In this part, I walked you through deploying a function code in the cloud using Azure Functions. In the next/last part, I would be walking you through how to create and setup a webhook on GitHub, connect this webhook with our newly created API (we would be making changes to the function code) to listen to Wiki update events (Gollum events, in this case).

See you on the other side. 😺 Cheers!

Top comments (0)