In the previous article I've introduced you to my plan of migrating away from my dedicated server to a fully serverless infrastructure. The previous example was hereby quite a doable task - after all the given website was mostly static and the migration of the API part was rather painless thanks to the sound base of using C# / .NET.

The current task at hand will be a bit more difficult. In this post I'm going to convert my multiplayer spaceshoot game. If you want to know more about this game, check out my "classic" articles:

A bit similar to the previous article the bulk of the work are static web assets. These will not pose any problem. However, this time we need something more than just a bit of API. We need a fully server - or at least full server capabilities.

Quick Recap - What do we want?

Before we go into the technical details let's see what I expect from this migration:

- A more clean code base (finally I can clean up my stuff, maybe remove something and modernize some other parts)

- No more FTP or messy / unclear deployments - everything should be handled by CI/CD pipelines

- Cost reduction; sounds weird, but the last thing I want to have is a cost increase (today it's about 30 € per month for the hosting and my goal is to bring this below or close to 10 € - note: I pay much more for domains and these costs are not included here as they will remain the same).

Right now I am on track. Up to this point the new monthly total will be 5 €, which is for a DNS service with full e-mail capabilities incl. 30 GB storage and simple static websites.

Let's see what we can do about this thing and how much it will cost me monthly.

Legacy Structure

Right now the project is just a Visual Studio solution. It consists of three projects:

- The client, these are mostly static assets

- The server, this is a command-line application spawning a WebSocket server on a certain port

- Unit tests for the server

Running this results in the game being loaded - but without the server the multiplayer part is just grayed out.

There are several things that make this structure hard to work with for my desired goal:

- We don't want to create a custom server / command line application

- The web assets should be processed by a web pipeline / bundler, which also includes more optimizations etc.

- The whole build process should run on a dedicated pipeline within Azure DevOps

Also a bit of an update would be nice - in the end I still need to enter the "Server IP" in the multiplayer options. I'd like to have a DNS here instead (and it should default to the current domain).

Before we can settle on a basic structure for our new repository we need to find an appropriate serverless replacement.

Choosing the Right Service

While I've chosen an Azure service in the last article we also might want to consider something else. However, let's first see what Azure offers us:

- Azure Web PubSub (only free for up to 20 connections with a max. of 20k messages; standard paid tier would work - but costs around $45 per month)

- Azure Functions (durable functions to be specific, the free tier goes up to 400,000 GB-s monthly, assuming 100 MB memory consumption that means we can have 46 days in a month - or a party of 46 users concurrently playing for a whole day in a single month)

- Azure App Service (the free tier would be sufficient, but it gives us a limit of 60 CPU minutes / day, which - for a larger game - would be insufficient; furthermore it does not allow custom domains)

- Azure SignalR (only free for up to 20 connections with a max. of 20k messages; otherwise - also from standard plan - comparable to Azure Web PubSub)

So far Azure Functions seems mostly interesting - especially since we could use Azure Static Web App again for our static assets.

Alternatives are:

- Linode (Nanode with 1 GB could be as cheap as $5 per month)

- DigitalOcean (a Droplet with 512 MB could be as cheap as $4 per month)

- Render (a free instance running for 750 instance hours with shutdown if not used)

- Ably (a free tier contains 6 million monthly messages with 200 concurrent connections)

Ably could be used together with Netlify or other functions as a service.

The free tier of Ably looks interesting and choosing this stack seems to be just the right amount of learning something new (Ably + using Azure Entity Functions) with an established pattern (Azure Static Webs). Also, there is a great blog post by Ably, which discusses a very similar scenario.

In the end we would aim for the architecture as outlined in the article.

Personally, I'd love to go with Render, however, it does not have "native" support for C#/.NET. This would mean a complete rewrite into Node.js. Even if I would like to do that I lack time. So, unfortunately, I need to drop that options. Or do I?!

It turns out that Render also supports deployment of Docker images. This way, I could just do the most minimalistic changes to the server code - making it a new and shiny .NET 8 AoT Docker container.

Minimum memory footprint, best performance - least development effort. Sounds too good to be true? Well, let's give it a try!

Code Changes for the Client

The app itself was quite a mess. This has been created in a time before bundlers. So it featured an HTML file that in the end had something like:

<script src="scripts/base.js"></script>

<script src="scripts/color.js"></script>

<script src="scripts/dialog.js"></script>

<script src="scripts/chat.js"></script>

<script src="scripts/game.js"></script>

<script src="scripts/menu.js"></script>

<script src="scripts/settings.js"></script>

<script src="scripts/gauges.js"></script>

<script src="scripts/collision.js"></script>

<script src="scripts/objects.js"></script>

<script src="scripts/canvas.js"></script>

<script src="scripts/logic.js"></script>

<script src="scripts/sockets.js"></script>

<script src="scripts/misc.js"></script>

<script src="scripts/managers.js"></script>

<script src="scripts/statistic.js"></script>

<script src="scripts/events.js"></script>

Then in order to optimize this I ran (from a BAT-file) a little utility called jsmin.exe (!), which received as input all found <script> sources. In the end, this little utility produced a script.js file, which was then used (via search and replace) as the only script in the index.html file.

Let's improve this. We create a new Node.js project and install vite:

npm init -y

npm i vite --save-dev

Let's change these scripts and run vite build in our app directory where the index.html is placed:

$ npx vite build app

vite v5.0.4 building for production...

app/scripts/color.js (43:6) Use of eval in "app/scripts/color.js" is strongly discouraged as it poses security risks and may cause issues with minification.

app/scripts/color.js (758:5) Use of eval in "app/scripts/color.js" is strongly discouraged as it poses security risks and may cause issues with minification.

✓ 21 modules transformed.

dist/index.html 6.13 kB │ gzip: 2.29 kB

dist/assets/Orbitron-R9u8LGC7.woff 11.05 kB

dist/assets/logo-Tskk-yc3.png 14.70 kB

dist/assets/ajax-GrDhAkYX.gif 15.59 kB

dist/assets/Orbitron-PNgY5g3B.ttf 19.63 kB

dist/assets/Orbitron-M_WnupyW.eot 19.80 kB

dist/assets/Orbitron-UMfAHA_M.svg 23.54 kB │ gzip: 5.49 kB

dist/assets/tile-Ptjf1ZB0.jpg 24.34 kB

dist/assets/music-oaBBUWJ3.ogg 2,488.88 kB

dist/assets/music-91in0ONT.mp3 2,612.04 kB

dist/assets/index-kof9P_RP.css 4.13 kB │ gzip: 1.46 kB

dist/assets/index-unNsPeoP.js 31.79 kB │ gzip: 8.47 kB

✓ built in 333ms

That does not look so bad! It takes care of all the references in the HTML file and processed the JavaScript and CSS. Now it's time to alter the JavaScript - while CSS already works with url() being considered for references, the asset references in the JS code require special treatment. Additionally, instead of making everything global we want to leverage exports and such.

Beforehand, the JS code contained references such as

this.background.src = 'stars.jpg';

Now we transform it into two parts:

// get the URL - inform Vite that we use this asset

import starsUrl from '../assets/stars.jpg';

// use the relative URL stored in starsUrl to assign the src

this.background.src = starsUrl;

After the transformation the build output changes to:

$ npx vite build app

vite v5.0.4 building for production...

✓ 50 modules transformed.

dist/assets/networklogo-BKpiE3Ml.png 4.13 kB

dist/index.html 5.64 kB │ gzip: 2.11 kB

dist/assets/speedlogo-CeTI_ixV.png 5.68 kB

dist/assets/bomblogo-bb9v27nW.png 6.21 kB

dist/assets/shieldlogo-a4efXfXK.png 8.95 kB

dist/assets/asteroid-Kwpmpd7o.png 10.46 kB

dist/assets/Orbitron-R9u8LGC7.woff 11.05 kB

dist/assets/hit-RD0vSICG.wav 13.38 kB

dist/assets/logo-Tskk-yc3.png 14.70 kB

dist/assets/ajax-GrDhAkYX.gif 15.59 kB

dist/assets/powerup-Im3npHN8.wav 18.06 kB

dist/assets/Orbitron-PNgY5g3B.ttf 19.63 kB

dist/assets/Orbitron-M_WnupyW.eot 19.80 kB

dist/assets/explosion5-0K9zPZss.png 23.15 kB

dist/assets/Orbitron-UMfAHA_M.svg 23.54 kB │ gzip: 5.49 kB

dist/assets/tile-Ptjf1ZB0.jpg 24.34 kB

dist/assets/explosion4-w_fwSyiw.png 34.61 kB

dist/assets/explosion--9c5hm9o.wav 51.57 kB

dist/assets/laser-X0m8flOM.wav 55.18 kB

dist/assets/explosion1-3PSQfbx8.png 70.62 kB

dist/assets/explosion2-MkJxUAwQ.png 82.56 kB

dist/assets/explosion3-aJ0YgvV4.png 110.42 kB

dist/assets/stars-9B6WonPT.jpg 160.04 kB

dist/assets/bomb-ev2Nc1go.wav 170.30 kB

dist/assets/music-oaBBUWJ3.ogg 2,488.88 kB

dist/assets/music-91in0ONT.mp3 2,612.04 kB

dist/assets/index-kof9P_RP.css 4.13 kB │ gzip: 1.46 kB

dist/assets/index-Rtd1L9jk.js 90.00 kB │ gzip: 47.10 kB

✓ built in 398ms

Testing it out - it seems to work; at least in single-player.

One thing that I realized is that smaller resources are not properly loading. The reason was that by default Vite is inlining resources smaller than 4kB. While this is a good default, it destroys the original logic. Of course, I could start a rewrite at this point - but I wanted to shorten this a bit.

So let's change the Vite config by adding a file vite.config.mjs with the following content:

import { defineConfig } from "vite";

export default defineConfig({

build: {

assetsInlineLimit: 0,

},

});

Using this we now get a few more resources - and a few less kB in the script:

$ npx vite build app

vite v5.0.4 building for production...

✓ 52 modules transformed.

dist/assets/arrow-YmF7o4vs.gif 0.07 kB

dist/assets/cross-OqODeppU.gif 0.08 kB

dist/assets/flame-6z2-Re3e.png 0.38 kB

dist/assets/connect-87h05rgg.gif 0.72 kB

dist/assets/aim-vdnAOdDt.png 1.76 kB

dist/assets/multiple-SzT_5iD2.png 2.16 kB

dist/assets/health-CHpAVgsz.png 2.30 kB

dist/assets/shield-HJ2OrUFW.png 2.49 kB

dist/assets/detonator-IUQfTagT.png 2.65 kB

dist/assets/hs-BkskWOnC.png 2.68 kB

dist/assets/agile-z8XeVHnL.png 2.75 kB

dist/assets/plasma-FS0udKO3.png 2.77 kB

dist/assets/bomb-fnUsMZqf.png 2.79 kB

dist/assets/userslogo-yfpb4KhT.png 2.86 kB

dist/assets/ammo-3sMfQBD0.png 2.96 kB

dist/assets/healthlogo-dtGfd-G3.png 3.93 kB

dist/assets/networklogo-BKpiE3Ml.png 4.13 kB

dist/index.html 4.68 kB │ gzip: 1.40 kB

dist/assets/speedlogo-CeTI_ixV.png 5.68 kB

dist/assets/bomblogo-bb9v27nW.png 6.21 kB

dist/assets/shieldlogo-a4efXfXK.png 8.95 kB

dist/assets/asteroid-Kwpmpd7o.png 10.46 kB

dist/assets/Orbitron-R9u8LGC7.woff 11.05 kB

dist/assets/hit-RD0vSICG.wav 13.38 kB

dist/assets/logo-Tskk-yc3.png 14.70 kB

dist/assets/ajax-GrDhAkYX.gif 15.59 kB

dist/assets/powerup-Im3npHN8.wav 18.06 kB

dist/assets/Orbitron-PNgY5g3B.ttf 19.63 kB

dist/assets/Orbitron-M_WnupyW.eot 19.80 kB

dist/assets/explosion5-0K9zPZss.png 23.15 kB

dist/assets/Orbitron-UMfAHA_M.svg 23.54 kB │ gzip: 5.49 kB

dist/assets/tile-Ptjf1ZB0.jpg 24.34 kB

dist/assets/explosion4-w_fwSyiw.png 34.61 kB

dist/assets/explosion--9c5hm9o.wav 51.57 kB

dist/assets/laser-X0m8flOM.wav 55.18 kB

dist/assets/explosion1-3PSQfbx8.png 70.62 kB

dist/assets/explosion2-MkJxUAwQ.png 82.56 kB

dist/assets/explosion3-aJ0YgvV4.png 110.42 kB

dist/assets/stars-9B6WonPT.jpg 160.04 kB

dist/assets/bomb-ev2Nc1go.wav 170.30 kB

dist/assets/music-oaBBUWJ3.ogg 2,488.88 kB

dist/assets/music-91in0ONT.mp3 2,612.04 kB

dist/assets/index-kof9P_RP.css 4.13 kB │ gzip: 1.46 kB

dist/assets/index-eZuLrvvR.js 62.32 kB │ gzip: 17.01 kB

✓ built in 445ms

So now let's transform / improve the server, too.

Code Changes for the Server

Most notably the server will be published as a Docker image. So let's create one right away:

FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build-env

WORKDIR /App

# Copy everything

COPY . ./

# Restore as distinct layers

RUN dotnet restore

# Build and publish a release

RUN dotnet publish -c Release -o out

# Build runtime image

FROM mcr.microsoft.com/dotnet/aspnet:8.0

WORKDIR /App

COPY --from=build-env /App/out .

ENTRYPOINT ["SpaceShoot.Server"]

Next thing on our list is to migrate from the "old" csproj to a new MSBuild / .NET SDK project. The file now looks like:

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>net8.0</TargetFramework>

<Nullable>enable</Nullable>

<ImplicitUsings>enable</ImplicitUsings>

<!-- 👇 Disables server GC to reduce memory consumption -->

<ServerGarbageCollection>false</ServerGarbageCollection>

<!-- 👇 Using invariant globalization reduces app sizes -->

<InvariantGlobalization>true</InvariantGlobalization>

<!-- 👇 Enables always publishing as AOT -->

<PublishAot>true</PublishAot>

</PropertyGroup>

</Project>

Quite clean, right? Well, what's missing is how the Program.cs changes. Previously, it looked like this:

namespace SpaceShooterServer

{

class Program

{

static SpaceShootServer server;

public static SpaceShootServer Server

{

get { return server; }

}

static void Main(string[] args)

{

server = new SpaceShootServer();

server.Start();

Console.WriteLine("SpaceShoot v 1.0.0. silver Server");

Console.WriteLine("-------------------------");

Console.WriteLine("Server up and running. Type help for more information.");

Console.WriteLine("You can get various lists with the list command.");

Console.WriteLine("-------------------------");

do

{

Console.Write(">> ");

var input = Console.ReadLine();

if (Commands.Instance.Invoke(input))

{

Console.ForegroundColor = ConsoleColor.Green;

Console.Write(Commands.Instance.Last.FlushOutput());

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine("Command not found or wrong arguments. Try help for available commands.");

}

Console.ResetColor();

}

while (server.Running);

}

}

}

As mentioned none of this is relevant any more. We don't want to create some custom server, but use the new capabilities incl. native WebSocket support.

In principle, the server's main code will look like this:

// 👇 Note Slim builder - new in .NET 8

var builder = WebApplication.CreateSlimBuilder(args);

var app = builder.Build();

var webSocketOptions = new WebSocketOptions

{

KeepAliveInterval = TimeSpan.FromMinutes(1)

};

app.UseWebSockets(webSocketOptions);

app.Use(async (context, next) =>

{

if (context.Request.Path == "/ws")

{

if (context.WebSockets.IsWebSocketRequest)

{

using var webSocket = await context.WebSockets.AcceptWebSocketAsync();

await SpaceShoot(webSocket);

}

else

{

context.Response.StatusCode = StatusCodes.Status400BadRequest;

}

}

else

{

await next(context);

}

});

app.Run();

Notably, since we want to use AoT for optimal memory usage we need to be careful with respect to using reflection. For instance, the following old code is not desired any more:

var types = Assembly.GetExecutingAssembly().GetTypes().Where(m => !m.IsInterface && m.GetInterfaces().Contains(typeof(IUpgrade))).ToList();

var idx = ran.Next(0, types.Count);

var o = types[idx].GetConstructor(System.Type.EmptyTypes).Invoke(null) as IUpgrade;

This code randomly selects one of the available upgrades to show up in an upgrade pack. These packs are randomly created in the game.

Instead we need to decide for any of the "static" ways. This could be using the service provider with an up-front registration or just a direct mapping:

var idx = ran.Next(0, 4);

IUpgrade o = idx switch

{

0 => new AgileShipUpgrade(),

1 => new MultipleParticleUpgrade(),

2 => new AimParticleUpgrade(),

_ => new FatParticleUpgrade()

};

After the full migration the whole program logic looks as follows:

using SpaceShooterServer;

using SpaceShooterServer.Game;

using SpaceShooterServer.Server;

var builder = WebApplication.CreateSlimBuilder(args);

builder.Services

.AddSingleton<Commands>()

.AddSingleton<MatchCollection>()

.AddSingleton<SpaceShootServer>()

.AddSingleton<ICommand, BanCommand>()

.AddSingleton<ICommand, EmptyCommand>()

.AddSingleton<ICommand, ExitCommand>()

.AddSingleton<ICommand, HelpCommand>()

.AddSingleton<ICommand, LogCommand>()

.AddSingleton<ICommand, ListCommand>()

.AddSingleton<ICommand, RestartCommand>()

.AddSingleton<ICommand, SaveCommand>()

.AddSingleton<ICommand, TimeCommand>()

.AddSingleton<ICommand, UnbanCommand>()

.AddSingleton<ICommand, UndoCommand>()

.AddSingleton<ICommand, UpCommand>();

var app = builder.Build();

var webSocketOptions = new WebSocketOptions

{

KeepAliveInterval = TimeSpan.FromMinutes(2)

};

app.UseWebSockets(webSocketOptions);

app.UseMiddleware<SpaceShootMiddleware>();

app.Run();

This registers all available commands, as well as the match collection and the space shoot server. By utilizing a middleware we can use dependency injection (for the space shoot server instance) as well as handling the incoming WebSocket requests.

The requests itself are stored in a new record type, which contains the connection info (for the IP and other details) as well as the WebSocket transfer socket. We also use this record to dispatch the message (see later).

using System.Net.WebSockets;

namespace SpaceShooterServer.Server;

public record WebSocketInfo

{

public event Action<string>? OnMessage;

public required ConnectionInfo Connection { get; set; }

public required WebSocket Socket { get; set; }

public void Send(string text)

{

OnMessage?.Invoke(text);

}

}

The middleware looks like this:

namespace SpaceShooterServer.Server;

public class SpaceShootMiddleware(RequestDelegate next, SpaceShootServer server)

{

private readonly RequestDelegate _next = next;

private readonly SpaceShootServer _server = server;

public async Task InvokeAsync(HttpContext context)

{

if (context.Request.Path == "/ws")

{

if (context.WebSockets.IsWebSocketRequest)

{

using var webSocket = await context.WebSockets.AcceptWebSocketAsync();

await _server.Handle(new WebSocketInfo

{

Connection = context.Connection,

Socket = webSocket,

});

}

else

{

context.Response.StatusCode = StatusCodes.Status400BadRequest;

}

}

else

{

await _next(context);

}

}

}

The middleware uses the new Handle function which is just a WebSocket dispatcher:

public async Task Handle(WebSocketInfo ws)

{

_matches.AddPlayer(ws);

await Task.WhenAny(HandleReceive(ws), HandleSend(ws));

_matches.RemovePlayer(ws);

await ws.Close();

}

The two loops use the injected IHostApplicationLifetime service to identify if the server should be stopped. In case this happens we want to cancel any processing of the WebSocket parts and close them gracefully. Let's see these methods in action:

private async Task HandleReceive(WebSocketInfo ws)

{

var end = _appLifetime.ApplicationStopping;

var (closed, message) = await ws.Receive(end);

while (!closed || !end.IsCancellationRequested)

{

_matches.UpdatePlayer(ws, message);

(closed, message) = await ws.Receive(end);

}

}

private Task HandleSend(WebSocketInfo ws)

{

var end = _appLifetime.ApplicationStopping;

var messages = new ConcurrentQueue<string>();

ws.OnMessage += messages.Enqueue;

return Task.Run(async () =>

{

while (!end.IsCancellationRequested)

{

if (!messages.TryDequeue(out var message))

{

var tcs = new TaskCompletionSource();

void handler(string str)

{

tcs.SetResult();

}

ws.OnMessage += handler;

using (end.Register(() => tcs.SetResult()))

{

await tcs.Task;

}

ws.OnMessage -= handler;

continue;

}

if (ws.Socket.State == WebSocketState.Open)

{

var raw = Encoding.UTF8.GetBytes(message);

var content = new ReadOnlyMemory<byte>(raw);

try

{

await ws.Socket.SendAsync(content, WebSocketMessageType.Text, true, end);

}

catch

{

break;

}

}

}

});

}

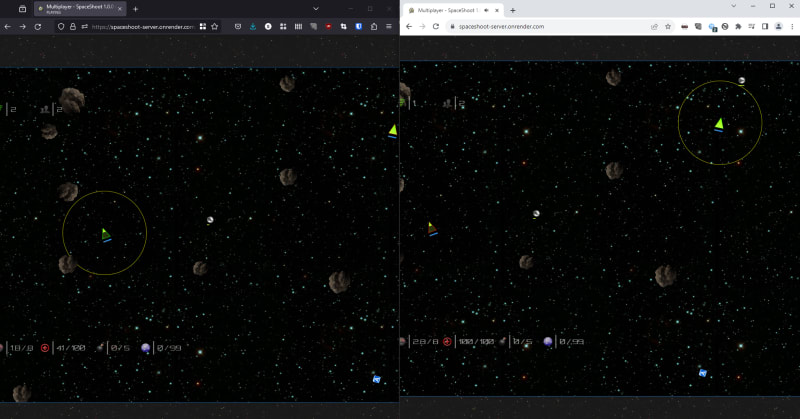

With all these changes in place we can run our game again:

However, once I built and ran this as an AoT standalone application through

dotnet publish -c Release -o out

./out/SpaceShoot.Server

the collisions stopped working. Something with reflection must still be in the code... Indeed, after looking for usage of GetType I found the following method:

public bool PerformCollision(GameObject source, GameObject target)

{

var t_source = source.GetType();

var t_target = target.GetType();

var coll_source = t_source.GetMethod("OnCollision", [t_target]);

if (coll_source == null)

{

return false;

}

coll_source.Invoke(source, [target]);

return true;

}

Since this little method performs the actual collision check we need to find a way that works without GetMethod. How about an abstract method on GameObject? The respective code would become:

public bool PerformCollision(GameObject source, GameObject target)

{

return source.CollideWith(target);

}

For instance, for the ship the whole code looks as follows:

public override bool CollideWith(GameObject target)

{

switch (target)

{

case Particle particle:

OnCollision(particle);

break;

case Bomb bomb:

OnCollision(bomb);

break;

case Asteroid asteroid:

OnCollision(asteroid);

break;

case Pack pack:

OnCollision(pack);

break;

default:

return false;

}

return true;

}

With this final change the AoT artifact is also fully operational. Time to build the image and put everything together to finish the migration.

CI/CD and More

Let's first get our Dockerfile fixed. The previous iteration still used a full .NET image and did not really make use of AoT. A more appropriate image looks like this:

FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build-env

WORKDIR /App

# Copy everything

COPY . ./

# Update package manager

RUN apt-get update

# Install missing dependencies

RUN apt install -y clang zlib1g-dev

# Restore as distinct layers

RUN dotnet restore

# Build and publish a release

RUN dotnet publish -c Release -o out

# Build runtime image

FROM alpine:3.14

WORKDIR /App

COPY --from=build-env /App/out .

RUN apk add gcompat

ENTRYPOINT ["./SpaceShoot.Server"]

As you can see we use an alpine image - pretty much as lightweight as we can be. We also install some more tooling to make the build work - without clang there is no native compiler available. Also, since alpine comes without libc we need to provide using the gcompat package. Besides those changes - that's it. Let's build an Azure Pipeline with it.

Deploying to Render will involve the GitHub Container Registry. So our goal is to publish a Docker image from Azure Pipelines to the GitHub Container Registry.

What we can do here is to create a new azure-pipelines.yml with the following code:

trigger:

- master

resources:

- repo: self

stages:

- stage: Build

displayName: Build image

jobs:

- job: Build

displayName: Build

pool:

vmImage: ubuntu-latest

steps:

- task: Docker@2

displayName: Login to GitHub

inputs:

command: login

containerRegistry: GitHubServiceConnection

- task: Docker@2

displayName: Build an image

inputs:

command: build

repository: FlorianRappl/SpaceShootServer

dockerfile: '$(Build.SourcesDirectory)/api/Dockerfile'

tags: |

latest

- task: Docker@2

displayName: Push an image

inputs:

command: push

dockerfile: '$(Build.SourcesDirectory)/api/Dockerfile'

repository: FlorianRappl/SpaceShootServer

containerRegistry: GitHubServiceConnection

tags: |

latest

There are two additional steps to make this work:

- We need to create a GitHub personal access token (PAT) which can be used in (2)

- We need to create a "Service Connection" in Azure DevOps (name:

GitHubServiceConnection) that logs in tohttps://ghcr.io, i.e., the GitHub Container Registry

More information on the GitHub Container Registry cam be found in the GitHub docs.

Once everything is working and the pipeline is pushing we can go to Render.com and create a new web service based on an existing Docker image. To have this working properly I set two environment variables as seen below.

The PORT variable is used by Render to speed up the auto discovery of the exposed HTTP Port. I set this to 5000 (ASP.NET Core default). To bind to the right host (0.0.0.0) instead of localhost I've also added the ASPNETCORE_URLS variable with the value http://0.0.0.0:5000. Using those settings the service is up and running!

Now it's time for a big decision: Either we place the files in a static web app (this could be hosted on Render or on Azure - in both cases it would be free), or we change the code to also expose some static files, which should then be copied into the Docker image. To keep things simple we'll go for the latter.

First, we need to change the Program.cs to actually serve static files, too:

var app = builder.Build();

var webSocketOptions = new WebSocketOptions

{

KeepAliveInterval = TimeSpan.FromMinutes(2)

};

var staticFilesOption = new StaticFileOptions

{

};

app.UseWebSockets(webSocketOptions);

app.UseMiddleware<SpaceShootMiddleware>();

// 👇 Serve "index.html" for "/"

app.UseDefaultFiles();

// 👇 Serve the files from "wwwroot"

app.UseStaticFiles(staticFilesOption);

app.Run();

Second, we adjust the build pipeline to also process and copy the static files:

steps:

- task: NodeTool@0

displayName: Use Node $(nodeVersion)

inputs:

versionSpec: $(nodeVersion)

- script: |

npm i

npm run build

cp -r app/dist api/wwwroot

displayName: Provide Static Files

# now as beforehand

Finally, we can redeploy and see the whole application working.

To have the migration finished we also change the custom domain.

Conclusion

It runs - faster and more cost efficient (for the given subdomain no additional costs will occur). The crucial part was to identify a cloud provider or way of deployment that aligns pretty well with the application and anticipated usage. In this case the application is mostly static with a huge dependency (for full operation) on a .NET service exposing a WebSocket server.

Choosing Render and deploying a free service with a Docker image using .NET 8 AoT makes sense as I doubt that this game will be used by too many people. After all, it was mostly exposed as a demo for the article - and for a funny game session with some friends.

In the next post I'll look into the overall architecture of the migration incl. an outline of the upcoming rewrite of my personal homepage.

Currently, the dedicated server is still operational - but I need to finish the migration until the end of the year.

Top comments (0)