So far we can create spheres and planes. We want to extend this to triangles so we can start using actual meshes, the same sort that we could render with WebGL.

Mesh Class

We'll create a class to hold the mesh data. It will not be dissimilar from the mesh class from the WebGL version, but I'm not adding all the functionality yet. It's just a data holding class. Also note that I'm getting normals for each triangle programmatically which I'll explain below:

//math-helpers.js

//order matters! CCW from bottom to top

export function getTriangleNormal(pointA, pointB, pointC) {

const vector1 = subtractVector(pointC, pointA);

const vector2 = subtractVector(pointB, pointA);

return normalizeVector(crossVector(vector1, vector2));

}

//mesh.js

import { getTriangleNormal } from "../lib/math-helpers.js";

export class Mesh {

#positions = [];

#colors = [];

#triangles = [];

#triangleNormals = [];

constructor(mesh){

this.#positions = mesh.positions;

this.#colors = mesh.colors;

this.#triangles = mesh.triangles;

this.#triangleNormals = this.#triangles.map(t => getTriangleNormal(t));

}

get positions(){

return this.#positions;

}

get triangles(){

return this.#triangles;

}

get colors(){

return this.#colors;

}

get triangleNormals(){

return this.#triangleNormals;

}

get type(){

return "mesh";

}

}

We can now create mesh object:

mesh: new Mesh({

positions: [

[-0.5, -0.5, 0],

[0.5, -0.5, 0],

[0, 0.5, 0]

],

triangles: [

[0,1,2]

],

colors: [

[1,0,0,1],

[1,0,0,1],

[1,0,0,1]

]

})

Keep in mind the vertices must be defined in counter-clockwise order to get the correct normal!

Intersecting a triangle

Intersecting triangles aren't too hard. We can already do planes and since all triangles reside in a plane we can find an intersection with that plane. Then we just need to decide if the hit point was inside the triangle or not.

Barycentric Coordinates

To do this we use the barycentric coordinates of the point. This is a set of 3 coordinates that is essentially the point's weigh toward each vertex in the triangle with the constraint that all weights must sum to 1. This is actually what the GPU does for us between the vertex shader and the pixel shader in WebGL. It calculates the barycentric coordinates which helps interpolate over the pixels in the triangle. For this we need to do it manually.

The way it works it that we break the triangle up into 3 smaller triangles using the point of interest. Then we calculate the area of each opposing triangle. So if triangle[0] is the point at the top, then a0 will be the triangle at the bottom.

The coordinate value is the area of that triangle divided by the total area. Note that most descriptions will call it "area" but it's very important that you get the "signed area." This is because if the point is outside the triangle, the area needs to subtracted from the total in order for all coordinates to sum to 1. This turns out to be strangely hard to find online here's what I came up with:

//math helpers

//order matters! CCW from bottom to top

export function getTriangleCrossProduct(pointA, pointB, pointC) {

const vector1 = subtractVector(pointC, pointA);

const vector2 = subtractVector(pointB, pointA);

return crossVector(vector1, vector2);

}

//point needs to be coplanar

export function getBarycentricCoordinates(triangle, point) {

const cross = getTriangleCrossProduct(...triangle);

const n = normalizeVector(cross);

const a0 = dotVector(n, getTriangleCrossProduct(triangle[1], triangle[2], point)) / 2;

const a1 = dotVector(n, getTriangleCrossProduct(triangle[0], point, triangle[2])) / 2;

const a2 = dotVector(n, getTriangleCrossProduct(triangle[0], triangle[1], point)) / 2;

const a = getVectorMagnitude(cross) / 2;

return [

a0 / a,

a1 / a,

a2 / a

];

}

When you cross two vectors, the resulting vector is always perpendicular to the other 2, but the magnitude of that vector is also equal to the parallelogram formed by the two lines. To get the area of a triangle we would divide that area by 2. Normally, you'd take the magnitude of the vector sqrt(a ** 2 + b ** 2 + c ** 2) but this does not give you the signed area. Instead you need to dot the vector against the triangle's normal. What this does is basically multiply the magnitude by one or negative one because the normal and cross-product are going to be either in the same direction or in different directions depending on the winding order. This gives us the signed area. The final area a can be calculated normally as it's always unsigned.

What this does is allow us to check if the point falls inside or outside the triangle. If any of the barycentric coordinates are negative that means the point fell outside the triangle.

The first part is to check if the ray intersects the plane the triangle is on. This is why we calculated all those normals for each triangle. When we define the normals per vertex, as we did with the WebGL engine, the problem is that we're no longer defining a plane, we're defining a sort of curved surface and I don't know how to find that. So we need one normal for each triangle.

To find the collision with the mesh we iterate through all triangles.

intersectMesh(ray, object){

for(let i = 0; i < object.triangles.length; i++){

const triangle = object.triangles[i];

const positions = [object.positions[triangle[0]], object.positions[triangle[1]], object.positions[triangle[2]]];

const normal = object.triangleNormals[i];

const distance = this.intersectPlane(ray, { offset: dotVector(normal, positions[0]), normal });

if(distance === Infinity || distance === -Infinity) continue; //parallel

const [alpha, beta, gamma] = getBarycentricCoordinates(positions, addVector(ray.origin, scaleVector(ray.direction, distance)));

if(alpha < 0 || beta < 0 || gamma < 0 || alpha > 1 || beta > 1 || gamma > 1) continue; //not on triangle

return { distance, barycentricCoords: [alpha, beta, gamma], componentIndex: i };

}

return { distance: Infinity, barycentricCoords: null, componentIndex: null };

}

First we see if the ray intersects the plane. We have the normal, we just need the offset which we can find by taking any point and dotting it with the normal to see how far in the normal direction it is. If there's no intersection with the plane (ray is parallel) we continue to the next triangle. Next we calculate the barycentric coordinates. If any are negative or greater than 1 we're outside the triangle and we try the next triangle. If not then we have a hit, we return the distance, the barycentric coordinates and the index of the triangle. I called this componentIndex in case it's used for other multi-component model types. If all triangles fail to produce a hit we return Infinity for the distance as well as null for the coordinates and componentIndex.

intersectObjects will get an update so that it can return the optional data for the surface when it's a mesh:

intersectObjects(ray) {

let closest = { distance: Infinity, object: null, barycentricCoords: null };

for (let object of Object.values(this.objects)) {

let distance;

let barycentricCoords = null;

let componentIndex = null;

switch(object.type){

case "sphere": {

distance = this.intersectSphere(ray, object);

barycentricCoords = null;

componentIndex = null;

break;

}

case "plane": {

distance = this.intersectPlane(ray, object);

barycentricCoords = null;

componentIndex = null;

break;

}

case "mesh": {

const hit = this.intersectMesh(ray, object);

distance = hit.distance;

barycentricCoords = hit.barycentricCoords;

componentIndex = hit.componentIndex;

break;

}

}

if (distance != undefined && distance < closest.distance && distance > 0.001) {

closest = { distance, object, barycentricCoords, componentIndex };

}

}

return closest;

}

Back in getSurfaceInfo we can use that new information:

getSurfaceInfo(collisionPosition, intersection, ray, bounceCounter){

let color;

switch(intersection.object.type){

case "mesh": {

const colorA = scaleVector(intersection.object.colors[intersection.triangle[0]], intersection.barycentricCoords[0]);

const colorB = scaleVector(intersection.object.colors[intersection.triangle[1]], intersection.barycentricCoords[1]);

const colorC = scaleVector(intersection.object.colors[intersection.triangle[2]], intersection.barycentricCoords[2]);

const c = addVector(colorA, addVector(colorB, colorC));

color = componentwiseMultiplyVector(BACKGROUND_LIGHT, c);

break;

}

default: {

color = componentwiseMultiplyVector(BACKGROUND_LIGHT, intersection.object.color);

}

}

for(const light of Object.values(this.lights)){

if(this.isVisible(collisionPosition, light.position)){

const normal = this.getNormal(collisionPosition, intersection.object, intersection.componentIndex);

const toLight = normalizeVector(subtractVector(light.position, collisionPosition));

const lightAmount = scaleVector(light.color, dotVector(toLight, normal));

color = addVector(color, componentwiseMultiplyVector(intersection.object.color, lightAmount));

if(intersection.object.specularity){

if(trueReflections){

const reflectionDirection = reflectVector(ray.direction, normal);

const reflectionColor = this.raytrace({ origin: collisionPosition, direction: reflectionDirection }, bounceCounter - 1);

const specularLight = clamp(scaleVector(reflectionColor, intersection.object.specularity), 0.0, 1.0);

color = addVector(color, specularLight);

} else {

const toCamera = normalizeVector(subtractVector(ray.origin, collisionPosition));

const halfVector = normalizeVector(addVector(toLight, toCamera));

const baseSpecular = clamp(dotVector(halfVector, normal), 0.0, 1.0);

const specularMagnitude = baseSpecular ** intersection.object.gloss;

const specularLight = componentwiseMultiplyVector(light.color, [specularMagnitude, specularMagnitude, specularMagnitude, 1.0]);

color = addVector(color, specularLight);

}

}

}

}

return [...color, 1];

}

To get the color of the object at that point we can use the triangle indices to map into the color array and we can scale each color by the corresponding coordinate. Remember that all barycentric coordinates add to one so if we add up these scaled colors we get the interpolated color.

getNormal also gets an update to get the normal of the intersected triangle.

getNormal(collisionPosition, object, componentIndex){

switch(object.type){

case "sphere": {

return normalizeVector(subtractVector(collisionPosition, object.position));

}

case "plane": {

return normalizeVector(object.normal);

}

case "mesh": {

return object.triangleNormals[componentIndex];

}

}

}

With this we get a nice triangle:

And checking color interpolation:

Debugging

I thought it might be a little useful to talk about how I went about debugging. Largely it consisted of manipulating the image so that a problem area was in an easy to find location like the edges or the center of the screen. This way I could stop the program and debug just that problem ray using the row and column to see what was going on. Chrome has a built-in feature for conditional breakpoints that's usually pretty handy but I found when doing long-running tasks like rendering images this tended to hang the browser. Instead I recommend putting the if statements into the code itself to prevent that and then setting a breakpoint on that line where you can continue to walk it through.

Here's an example. I ported over the data to make a cube:

//data.js

export const cube = {

positions: [

//Front

[-0.5, -0.5, -0.5],

[0.5, -0.5, -0.5],

[0.5, 0.5, -0.5],

[-0.5, 0.5, -0.5],

//Right

[0.5, -0.5, -0.5],

[0.5, -0.5, 0.5],

[0.5, 0.5, 0.5],

[0.5, 0.5, -0.5],

//Back

[0.5, -0.5, 0.5],

[-0.5, -0.5, 0.5],

[-0.5, 0.5, 0.5],

[0.5, 0.5, 0.5],

//Left

[-0.5, -0.5, 0.5],

[-0.5, -0.5, -0.5],

[-0.5, 0.5, -0.5],

[-0.5, 0.5, 0.5],

//Top

[-0.5, 0.5, -0.5],

[0.5, 0.5, -0.5],

[0.5, 0.5, 0.5],

[-0.5, 0.5, 0.5],

//Bottom

[-0.5, -0.5, 0.5],

[0.5, -0.5, 0.5],

[0.5, -0.5, -0.5],

[-0.5, -0.5, -0.5]

],

colors: [

//Front

[1.0, 0.0, 0.0],

[1.0, 0.0, 0.0],

[1.0, 0.0, 0.0],

[1.0, 0.0, 0.0],

//Right

[0.0, 1.0, 0.0],

[0.0, 1.0, 0.0],

[0.0, 1.0, 0.0],

[0.0, 1.0, 0.0],

//Back

[0.0, 0.0, 1.0],

[0.0, 0.0, 1.0],

[0.0, 0.0, 1.0],

[0.0, 0.0, 1.0],

//Left

[1.0, 1.0, 0.0],

[1.0, 1.0, 0.0],

[1.0, 1.0, 0.0],

[1.0, 1.0, 0.0],

//Top

[1.0, 0.0, 1.0],

[1.0, 0.0, 1.0],

[1.0, 0.0, 1.0],

[1.0, 0.0, 1.0],

//Bottom

[0.0, 1.0, 1.0],

[0.0, 1.0, 1.0],

[0.0, 1.0, 1.0],

[0.0, 1.0, 1.0]

],

triangles: [

[0, 1, 2], //front

[0, 2, 3],

[4, 5, 6], //right

[4, 6, 7],

[8, 9, 10], //back

[8, 10, 11],

[12, 13, 14], //left

[12, 14, 15],

[16, 17, 18], //top

[16, 18, 19],

[20, 21, 22], //bottom

[20, 22, 23]

]

};

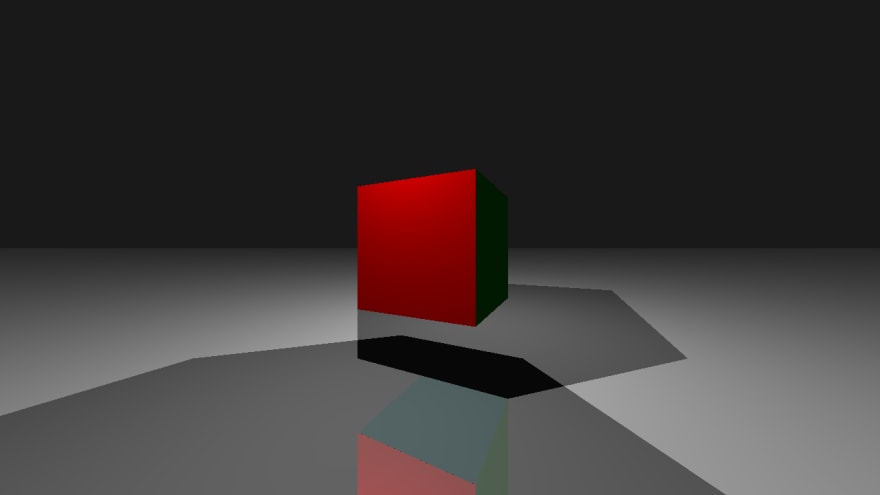

When I go to render this is what I get: (the camera is slightly to the right and there is a light source on each side of the cube a bit high on the Y-axis):

A few things are going on here:

1) We can see inside the cube using the reflection

2) The inside of the cube is colored even though it should not be facing any light source.

3) The face of the cube should have color because the light is shining on it.

Let's tackle #2 first. We can start by manipulating the camera so that the bottom side of the cube falls in the last row and center column of pixels. Then we can set a break point to check for that row and column (remember the last row is this.#height - 1). We can follow it through intersection with the plane (good) down into getSurfaceInfo which because it's a reflection and shading issue we were pretty sure this would be the case. As it turns out once we reflect, we intersect the cube but on the back side and so we just took this. This is because we just took the first triangle collision in a mesh, not the closest!

We can fix this:

intersectMesh(ray, object){

let closest = { distance: Infinity, barycentricCoords: null, componentIndex: null };

for(let i = 0; i < object.triangles.length; i++){

const triangle = object.triangles[i];

const positions = [object.positions[triangle[0]], object.positions[triangle[1]], object.positions[triangle[2]]];

const normal = object.triangleNormals[i];

const distance = this.intersectPlane(ray, { offset: dotVector(normal, positions[2]), normal });

if (distance === Infinity || distance === -Infinity || distance < INTERSECTION_DELTA) continue; //parallel or too close

if (closest.distance > distance) {

const [alpha, beta, gamma] = getBarycentricCoordinates(positions, addVector(ray.origin, scaleVector(ray.direction, distance)));

if (alpha < 0 || beta < 0 || gamma < 0 || alpha > 1 || beta > 1 || gamma > 1) continue; //not on triangle

closest = { distance, barycentricCoords: [alpha, beta, gamma], componentIndex: i };

}

}

return closest

}

It has to follow the same rules as intersectObjects. Perhaps we could fold these into a single loop, but we're just fixing, not optimizing.

This produces a better image:

This seems to fix almost all our issues, but I still have one more: "should we be able to see the bottom color of the cube in the reflection?" It's not in the light, so I feel we shouldn't.

Same thing as last time, let's set a breakpoint and check the bounced ray. When we check it what we find is that this is cause by the background light value which lightens everything a little bit to account for global illumination. So actually this makes total sense, but always good to check!

You can find the source code here: https://github.com/ndesmic/geort/tree/v4

Top comments (0)